大概要做的事情有這些

像是kinds: Prometheus、Alertmanager、serviceMonitor

我的寫法就是vi Prometheus.yaml

然後把要的內容複製貼上

然後:wq

你就可以在ls裡面看到你剛剛建立的yaml檔囉

然後

kubectl apply -f Prometheus.yaml

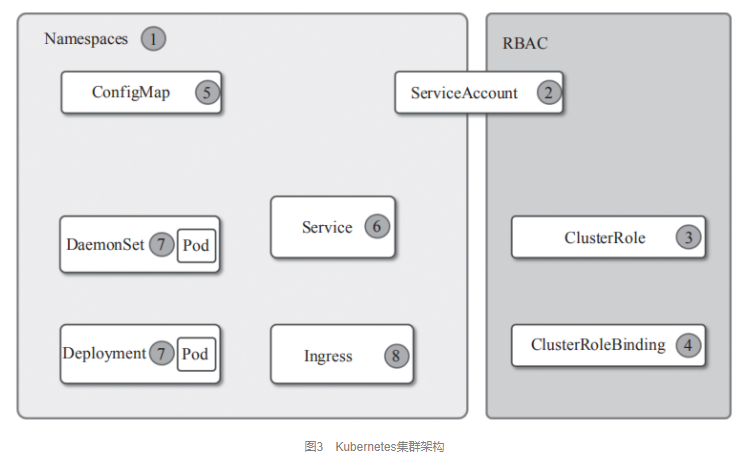

這張順序圖畫的很讚

參考來源 : https://dbaplus.cn/news-134-3247-1.html

kind: Namespace

apiVersion: v1

metadata:

name: yc

spec:

finalizers:

- kubernetes

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/version: v0.44.1

name: prometheus-operator-yc

namespace: yc

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/version: v0.44.1

name: prometheus-operator-yc

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus-operator

subjects:

- kind: ServiceAccount

name: prometheus-operator

namespace: yc

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/version: v0.44.1

name: prometheus-operator

namespace: yc

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/version: v0.44.1

spec:

containers:

- args:

- --kubelet-service=kube-system/kubelet

- --prometheus-config-reloader=quay.io/prometheus-operator/prometheus-config-reloader:v0.44.1

image: quay.io/prometheus-operator/prometheus-operator:v0.44.1

name: prometheus-operator

ports:

- containerPort: 8080

name: http

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

- args:

- --logtostderr

- --secure-listen-address=:8443

- --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305

- --upstream=http://127.0.0.1:8080/

image: quay.io/brancz/kube-rbac-proxy:v0.8.0

name: kube-rbac-proxy

ports:

- containerPort: 8443

name: https

securityContext:

runAsGroup: 65532

runAsNonRoot: true

runAsUser: 65532

nodeSelector:

beta.kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

serviceAccountName: prometheus-operator-yc

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/version: v0.44.1

name: prometheus-operator

namespace: yc

spec:

clusterIP: None

ports:

- name: https

port: 8443

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

蓋一個

ServiceAccount : prometheus-operator-yc

ClusterRoleBinding : prometheus-operator-yc

所以Deployment裡面有一行

serviceAccountName: prometheus-operator-yc

apiVersion: v1

kind: ServiceAccount

metadata:

name: alertmanager-main

namespace: yc

apiVersion: v1

kind: Secret

metadata:

name: alertmanager-main

namespace: yc

stringData:

alertmanager.yaml: |-

"global":

"resolve_timeout": "5m"

"slack_api_url": "https://hooks.slack.com/services/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"

"receivers":

- "name": "slack-notifications"

"slack_configs":

- "channel": "456"

"route":

"receiver": "slack-notifications"

"repeat_interval": "12h"

type: Opaque

apiVersion: monitoring.coreos.com/v1

kind: Alertmanager

metadata:

labels:

alertmanager: main

name: main

namespace: yc

spec:

externalUrl: https://自己填/alertmanager-test-yc

image: quay.io/prometheus/alertmanager:v0.21.0

nodeSelector:

kubernetes.io/os: linux

replicas: 3

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: alertmanager-main

version: v0.21.0

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

name: alertmanager-main

namespace: yc

spec:

ports:

- name: web

port: 9093

targetPort: web

selector:

alertmanager: main

app: alertmanager

sessionAffinity: ClientIP

kind: Ingress

apiVersion: networking.k8s.io/v1beta1

metadata:

name: alertmanager

namespace: yc

annotations:

appgw.ingress.kubernetes.io/backend-path-prefix: /

appgw.ingress.kubernetes.io/connection-draining: 'true'

appgw.ingress.kubernetes.io/connection-draining-timeout: '30'

appgw.ingress.kubernetes.io/cookie-based-affinity: 'true'

appgw.ingress.kubernetes.io/ssl-redirect: 'true'

cert-manager.io/cluster-issuer: letsencrypt-production

kubernetes.io/ingress.allow-http: 'false'

kubernetes.io/ingress.class: azure/application-gateway

spec:

tls:

- hosts:

- 自己填

secretName: 自己填 記得要去蓋secret

rules:

- host: 自己填

http:

paths:

- path: /alertmanager-test-yc/*

pathType: ImplementationSpecific

backend:

serviceName: alertmanager-main

servicePort: 9093

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus-k8s-test

namespace: yc

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus-k8s-test-yc

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- nonResourceURLs:

- /metrics

verbs:

- get

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus-k8s-test-yc

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus-k8s-test-yc

subjects:

- kind: ServiceAccount

name: prometheus-k8s-test

namespace: yc

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: yc

spec:

externalUrl: https://自己填/prometheus-test-yc

alerting:

alertmanagers:

- name: alertmanager-main

namespace: yc

port: web

image: quay.io/prometheus/prometheus:v2.22.1

nodeSelector:

kubernetes.io/os: linux

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s-test

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.22.1

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s-test

namespace: yc

spec:

ports:

- name: web

port: 9090

targetPort: web

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP

kind: Ingress

apiVersion: networking.k8s.io/v1beta1

metadata:

name: prometheus

namespace: yc

annotations:

appgw.ingress.kubernetes.io/backend-path-prefix: /

appgw.ingress.kubernetes.io/connection-draining: 'true'

appgw.ingress.kubernetes.io/connection-draining-timeout: '30'

appgw.ingress.kubernetes.io/cookie-based-affinity: 'true'

appgw.ingress.kubernetes.io/ssl-redirect: 'true'

cert-manager.io/cluster-issuer: letsencrypt-production

kubernetes.io/ingress.allow-http: 'false'

kubernetes.io/ingress.class: azure/application-gateway

spec:

tls:

- hosts:

- 自己填

secretName: 自己填

rules:

- host: 自己填

http:

paths:

- path: /prometheus-test-yc/*

pathType: ImplementationSpecific

backend:

serviceName: prometheus-k8s-test

servicePort: 9090

方便測試 推薦

serviceMonitorSelector: {}

先改成

serviceMonitorSelector:

matchLabels:

k8s-app: yc

如果你是按著我步驟走到這邊

恭喜你 改一下ls的yaml檔案吧

首先

kubectl delete Prometheus k8s -n yc

然後改寫

vi Prometheus.yaml

按a進入編輯模式,就修完之後按esc退出然後輸入:wq

這樣就只會抓到k8s-app為yc的service monitoring了

ServiceMonitors如下

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: prometheus

name: prometheus

namespace: yc

spec:

endpoints:

- interval: 30s

port: web

selector:

matchLabels:

prometheus: k8s

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: yc

name: alertmanager

namespace: yc

spec:

endpoints:

- interval: 30s

port: web

selector:

matchLabels:

alertmanager: main

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/version: v0.44.1

k8s-app: yc

name: prometheus-operator

namespace: yc

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

honorLabels: true

port: https

scheme: https

tlsConfig:

insecureSkipVerify: true

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/version: v0.44.1

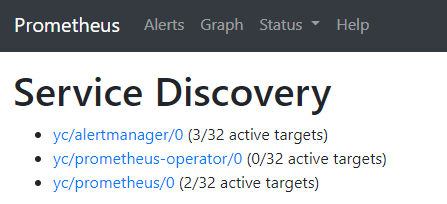

然後你就可以看到這樣啦

架大概這樣,測的部分下集待續,謝謝

然後會生成一個.gz的秘密 可以這樣進去看

kubectl get secret -n yc prometheus-k8s -o json | jq -r '.data."prometheus.yaml.gz"' | base64 -d | gzip -d