承 Day 27 的進度,今天要讓 ARC 的 runner 使用 job container 執行 container job,在參考此文章精心的整理後,感覺成功率極高。

唯一的差別是該文作者使用 production 規格的 kubernetes 集群進行實驗,我是用 Minikube 在一旁玩沙,極有可能踩坑。

該文章表示 ARC 目前支援 Dind (Docker-in-Docker mode) & actions/runner-container-hooks (Kubernetes mode),既然都用了 Kubernetes,我就來試試看 Kubernetes mode,Docker-in-Docker 有空再來玩。

建立一個檔案叫做 runner-scale-set-values.yaml,內容如下,拆成三大塊 (建議再看一次官方文件學學):

containerMode:

type: "kubernetes"

# Kubernetes mode relies on persistent volumes to share job details between the runner pod and the container job pod.

# To use Kubernetes mode, you must do the following.

# - Create persistent volumes available for the runner pods to claim.

# - Use a solution to automatically provision persistent volumes on demand.

kubernetesModeWorkVolumeClaim:

accessModes: ["ReadWriteOnce"]

storageClassName: "standard" # 關於這一點,坑很深

resources:

requests:

storage: 1Gi

template:

spec:

# Solve 'Access to the path /home/runner/_work/_tool is denied' error

# You may see this error if you're using Kubernetes mode with persistent volumes. This error occurs if the runner container is running with a non-root user and is causing a permissions mismatch with the mounted volume.

# You can work around the issue by using `initContainers` to change the mounted volume's ownership, as follows.

# see 'https://docs.github.com/en/actions/hosting-your-own-runners/managing-self-hosted-runners-with-actions-runner-controller/troubleshooting-actions-runner-controller-errors#error-access-to-the-path-homerunner_work_tool-is-denied'

initContainers:

- name: kube-init

image: ghcr.io/actions/actions-runner:latest

command: ["sudo", "chown", "-R", "1001:1001", "/home/runner/_work"]

volumeMounts:

- name: work

mountPath: /home/runner/_work

# Allow jobs without a job container to run

containers:

- name: runner

image: ghcr.io/actions/actions-runner:latest

command: ["/home/runner/run.sh"]

env:

- name: ACTIONS_RUNNER_REQUIRE_JOB_CONTAINER

value: "false"

更新目前的 arc-runner-set:

user@host:~$ vi runner-scale-set-values.yaml

user@host:~$ INSTALLATION_NAME="arc-runner-set"

NAMESPACE="arc-runners"

GITHUB_CONFIG_URL="https://github.com/repo的完整URL"

GITHUB_PAT="pre-defined-secret"

helm upgrade "${INSTALLATION_NAME}" \

--namespace "${NAMESPACE}" \

--set githubConfigUrl="${GITHUB_CONFIG_URL}" \

--set githubConfigSecret="${GITHUB_PAT}" \

--set maxRunners=10 \

--set minRunners=2 \

-f runner-scale-set-values.yaml \

oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set

Pulled: ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set:0.6.1

Digest: sha256:d0a4e067e15a2c616c6c2d049e98d9dc8e8aadb11ac6625cd01ee3ca30db8caa

false

Release "arc-runner-set" has been upgraded. Happy Helming!

NAME: arc-runner-set

LAST DEPLOYED: Fri Sep 29 15:42:32 2023

NAMESPACE: arc-runners

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

Thank you for installing gha-runner-scale-set.

Your release is named arc-runner-set.

user@host:~$ kubectl -n arc-runners get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

arc-runner-set-rvg27-runner-lp7jv-work Pending managed 5m51s

arc-runner-set-rvg27-runner-st95l-work Pending managed 5m51s

新產生的 runner 會發起 PVC,這些 PVC 都成功拿到了 volume,狀態切為 'Bound',且多了一個 'ProvisioningSucceeded' 的 event:

user@host:~$ kubectl -n arc-runners get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

arc-runner-set-mvght-runner-64g4l-work Bound pvc-ae602a7c-4c83-4de7-a73f-8fc3f71dc75f 1Gi RWO standard 8m41s

arc-runner-set-mvght-runner-kxg5q-work Bound pvc-fb9669f8-894d-49e9-bb6f-63b838ed5d91 1Gi RWO standard 9m52s

user@host:~$ kubectl describe pvc -A

Name: arc-runner-set-mvght-runner-2t7t6-work

Namespace: arc-runners

StorageClass: standard

Status: Bound

Volume: pvc-b9132329-9819-419d-8a5c-69f489636639

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: k8s.io/minikube-hostpath

volume.kubernetes.io/storage-provisioner: k8s.io/minikube-hostpath

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 1Gi

Access Modes: RWO

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Provisioning 1s k8s.io/minikube-hostpath_minikube_4579bf80-8497-42e4-809a-98381a3db90b External provisioner is provisioning volume for claim "arc-runners/arc-runner-set-mvght-runner-2t7t6-work"

Normal ExternalProvisioning 1s persistentvolume-controller waiting for a volume to be created, either by external provisioner "k8s.io/minikube-hostpath" or manually created by system administrator

Normal ProvisioningSucceeded 1s k8s.io/minikube-hostpath_minikube_4579bf80-8497-42e4-809a-98381a3db90b Successfully provisioned volume pvc-b9132329-9819-419d-8a5c-69f489636639

Name: arc-runner-set-mvght-runner-shxdf-work

Namespace: arc-runners

StorageClass: standard

Status: Bound

Volume: pvc-f6fdbf1f-602a-42f8-b2ff-573093dc53ad

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: k8s.io/minikube-hostpath

volume.kubernetes.io/storage-provisioner: k8s.io/minikube-hostpath

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 1Gi

Access Modes: RWO

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ExternalProvisioning 1s (x2 over 1s) persistentvolume-controller waiting for a volume to be created, either by external provisioner "k8s.io/minikube-hostpath" or manually created by system administrator

Normal Provisioning 1s k8s.io/minikube-hostpath_minikube_4579bf80-8497-42e4-809a-98381a3db90b External provisioner is provisioning volume for claim "arc-runners/arc-runner-set-mvght-runner-shxdf-work"

Normal ProvisioningSucceeded 1s k8s.io/minikube-hostpath_minikube_4579bf80-8497-42e4-809a-98381a3db90b Successfully provisioned volume pvc-f6fdbf1f-602a-42f8-b2ff-573093dc53ad

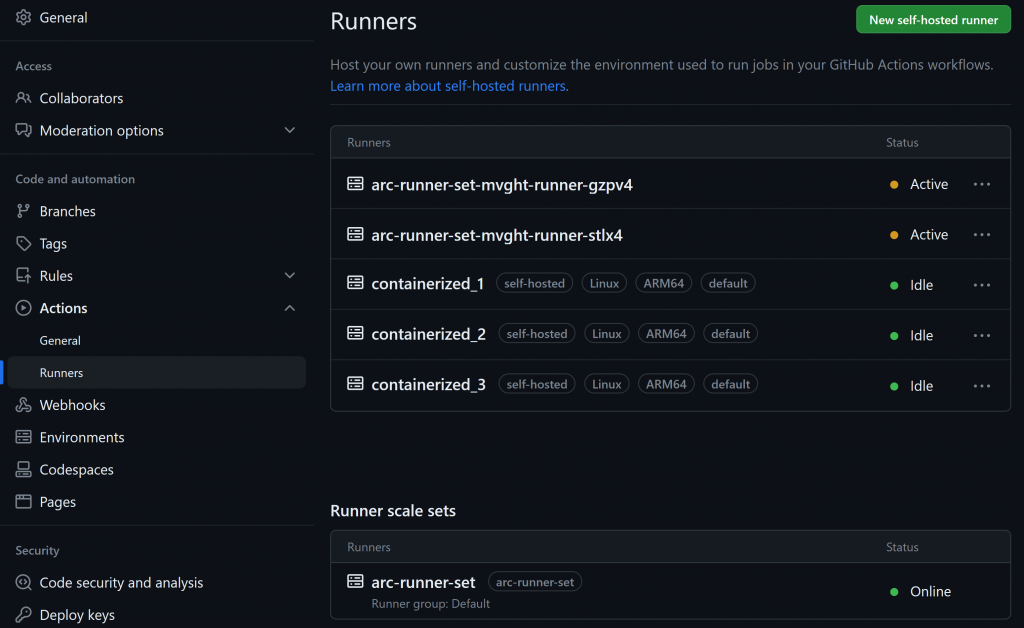

去 GitHub 看,arc-runner-set 自動建立的 runner 狀態終於變為 Active,正在執行 workflow:

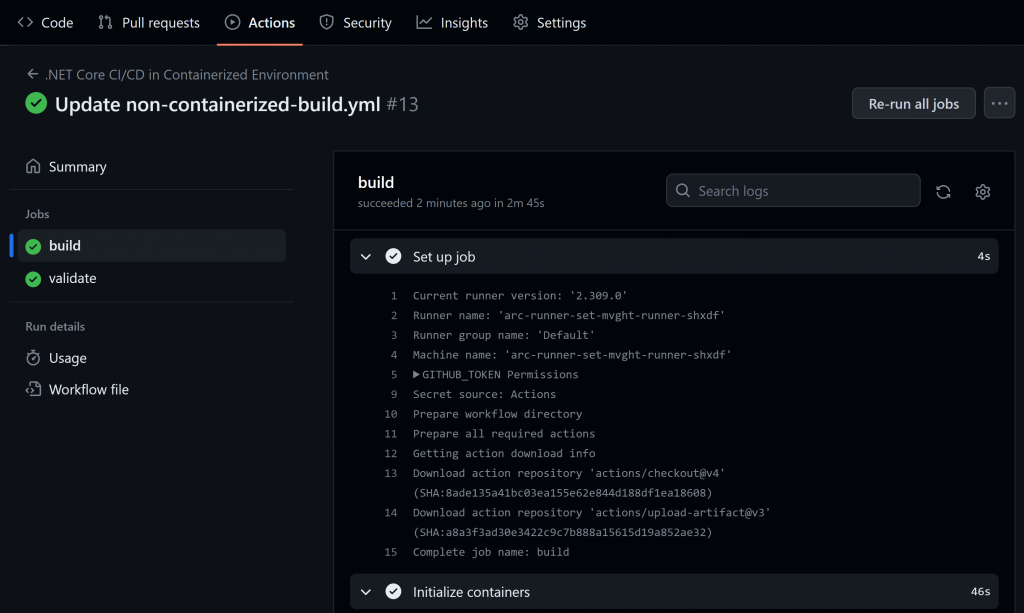

更感動的是竟然跑完了 containerized 的 build job,這是我第一次使用 self-hosted runner 在容器內跑完一個 workflow:

快累死,雖然學到一些 Kubernetes 的知識,但是公司根本沒有 Kubernetes 集群。

結論 1:目前在公司用 ARC 似乎沒戲,哈哈

結論 2:我的中秋假期既充實又空虛啊🤣