網上各位大神好,我是程式新手!跟著"Python大數據特訓班"的範例打了程式,明明都照著做,但就是跑不出書上的結果,希望有大神能指導一下哪裡出了問題QQ

目前我在想是不是網頁的連結錯誤?

import requests,os

from bs4 import BeautifulSoup

from urllib.request import urlopen

url='https://running.biji.co/index.php?q=album&act=photo_list&album_id=30668&cid=5791&type=album&subtitle=第三屆埔里跑 Puli Power 山城派對馬拉松 - 向善橋(約34K)'

html=requests.get(url)

soup=BeautifulSoup(html.text,'html.parser')

title=soup.find('h1',{'class':'album-title flex-1'}).text.strip()

url='https://running.biji.co/index.php?pop=ajax&func=album&fename=load_more_photos_in_listing_computer'

payload={'type':'ablum','rows':'0','need_rows':'20','cid':'5791','album_id':'30668'}

html=requests.post(url,data=payload)

soup = BeautifulSoup(html.text,'html.parser')

images_dir=title + "/"

if not os.path.exists(images_dir):

os.mkdir(images_dir)

photos = soup.select('.photo_img')

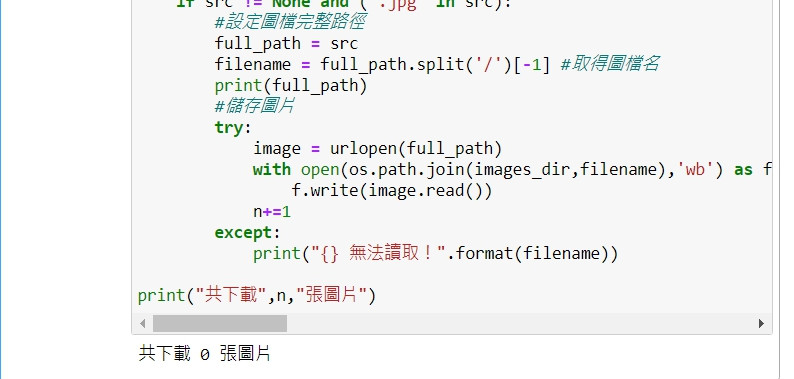

n=0

for photo in photos:

src=photo['src']

if src != None and ('.jpg' in src):

full_path = src

filename = full_path.split('/')[-1]

print(full_path)

try:

image = urlopen(full_path)

with open(os.path.join(images_dir,filename),'wb') as f:

f.write(image.read())

n+=1

except:

print("{} 無法讀取!".format(filename))

print("共下載",n,"張圖片")

--我的結果顯示--

--書上要的結果--

麻煩大家了〒▽〒

其實用第一個網址就可以抓到了

可以直接用第8行的soup直接抓圖片

soup=BeautifulSoup(html.text,'html.parser')

photos = soup.select('.photo_img')

...

兩個get的方法

下面兩個進入的網址都是一樣的只是第二個方法是用 params 傳入資料

方法一

url='https://running.biji.co/index.php?q=album&act=photo_list&album_id=30668'

html = requests.get(url)

方法二

url='https://running.biji.co/index.php'

payload={'q':'album', 'act':'photo_list', 'album_id':'30668'}

html=requests.get(url,params=payload)

在

payload={'q':'album', 'act':'photo_list', 'album_id':'30668'}

這部分最少只需要給q, act, album_id的參數就可以進入那個網頁

import requests,os

from bs4 import BeautifulSoup

from urllib.request import urlopen

url='https://running.biji.co/index.php?q=album&act=photo_list&album_id=30668&cid=5791&type=album&subtitle=第三屆埔里跑 Puli Power 山城派對馬拉松 - 向善橋(約34K)'

html=requests.get(url)

soup=BeautifulSoup(html.text,'html.parser')

title=soup.find('h1',{'class':'album-title flex-1'}).text.strip()

# url='https://running.biji.co/index.php?func=album&fename=load_more_photos_in_listing_computer'

# payload={'type':'ablum', 'rows':'0','need_rows':'20','cid':'5791','album_id':'30668'}

url='https://running.biji.co/index.php'

payload={'q':'album', 'act':'photo_list', 'album_id':'30668'}

html=requests.get(url,params=payload)

soup = BeautifulSoup(html.text,'html.parser')

images_dir=title + "/"

if not os.path.exists(images_dir):

os.mkdir(images_dir)

photos = soup.select('.photo_img')

n=0

for photo in photos:

src=photo['src']

if src != None and ('.jpg' in src):

full_path = src

filename = full_path.split('/')[-1]

print(full_path)

try:

image = urlopen(full_path)

with open(os.path.join(images_dir,filename),'wb') as f:

f.write(image.read())

n+=1

except:

print("{} 無法讀取!".format(filename))

print("共下載",n,"張圖片")

謝謝大神的幫忙!(終於破新手可以回覆了QQ)

現在還遇到一個問題是要設定最大可以下載幾張相片,但是不管設定載幾張,相簿都只有20張,請問要怎麼解呢?

...

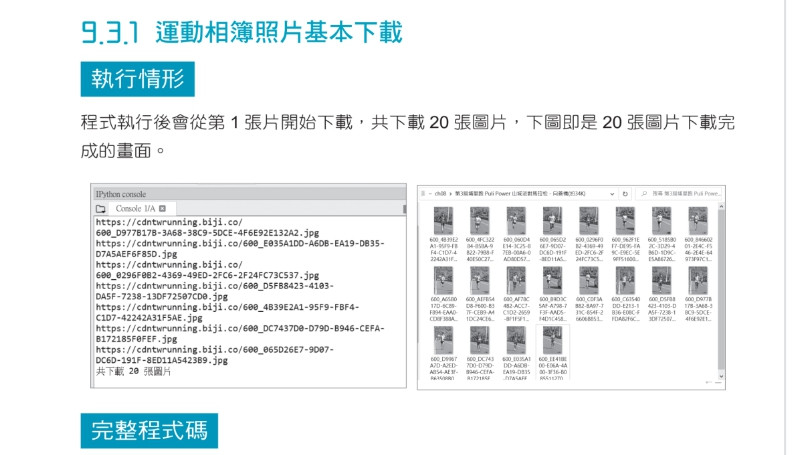

#第3屆埔里跑 Puli Power 山城派對馬拉松 - 向善橋(約34K)

url='https://running.biji.co/index.php?q=album&act=photo_list&album_id=30668&cid=5791&type=album&subtitle=第三屆埔里跑 Puli Power 山城派對馬拉松 - 向善橋(約34K)'

html=requests.get(url)

soup=BeautifulSoup(html.text,'html.parser')

title=soup.find('h1',{'class':'album-title flex-1'}).text.strip()+"_全部相片"

#url='https://running.biji.co/index.php?pop=ajax&func=album&fename=load_more_photos_in_listing_computer'

n=0

for i in range(200):

payload={'type':'ablum','rows':str(i*20),'need_rows':'20','cid':'5791','album_id':'30668'}

html=requests.post(url,data=payload)

#在回憶頁面中找出所有照片連結

soup = BeautifulSoup(html.text,'html.parser')

#以標題建立目錄儲存圖片

images_dir=title + "/"

if not os.path.exists(images_dir):

os.mkdir(images_dir)

#處理所有 <img> 標籤

photos = soup.select('.photo_img')

for photo in photos:

#讀取 scr 屬性內容

src=photo['src']

#讀取 .jpg 檔

if src != None and ('.jpg' in src):

#設定圖檔完整路徑

full_path = src

filename = full_path.split('/')[-1] #取得圖檔名

print(full_path)

#儲存圖片

try:

image = urlopen(full_path)

with open(os.path.join(images_dir,filename),'wb') as f:

f.write(image.read())

n+=1

print("n=",n)

if n>=30: #最多下載30張

break #跳出迴圈

except:

print("{} 無法讀取!".format(filename))

if n>=30: #最多下載30張

break #跳出迴圈

print("共下載",n,"張圖片")

...