Let's proceed to the 2nd lesson of Machine Learning Infrastructure.

If you think this topic is quite boring.

Please bear with me ![]()

We will create and configure deep neural network models with Google Cloud ML, then use the Google Cloud ML Engine to make predictions using your trained models.

Create a deep neural network machine learning model

Copy the pre-configured Python model code from your storage bucket into local storage in your lab VM:

gsutil cp gs://${BUCKET}/flights/chapter9/linear-model.tar.gz ~

Untar the files:

cd ~

tar -zxvf linear-model.tar.gz

Change to the tensorflow directory:

cd ~/tensorflow

Edit model.py to configure the code to use a Deep Neural Network machine learning model:

nano -w ~/tensorflow/flights/trainer/model.py

Insert the following code below the linear_model function, above the definition for the serving_input_fn:

def create_embed(sparse_col):

dim = 10 # default

if hasattr(sparse_col, 'bucket_size'):

nbins = sparse_col.bucket_size

if nbins is not None:

dim = 1 + int(round(np.log2(nbins)))

return tflayers.embedding_column(sparse_col, dimension=dim)

Now add a function definition to create the deep neural network model.

Add the following below the create_embed function you just added:

def dnn_model(output_dir):

real, sparse = get_features()

all = {}

all.update(real)

# create embeddings of the sparse columns

embed = {

colname : create_embed(col) \

for colname, col in sparse.items()

}

all.update(embed)

estimator = tflearn.DNNClassifier(

model_dir=output_dir,

feature_columns=all.values(),

hidden_units=[64, 16, 4])

estimator = tf.contrib.estimator.add_metrics(estimator, my_rmse)

return estimator

Page down to the run_experiment function at the end of the file. You need to reconfigure the experiment to call the deep neural network estimator function instead of the linear classifier function.

Replace the line estimator = linear_model(output_dir)with:

#estimator = linear_model(output_dir)

estimator = dnn_model(output_dir)

Save model.py.

Now set some environment variables to point to Cloud Storage buckets for the source and output locations for your data and model:

export REGION=us-central1

export OUTPUT_DIR=gs://${BUCKET}/flights/chapter9/output

export DATA_DIR=gs://${BUCKET}/flights/chapter8/output

Create a jobname to allow you to identify the job and change to the working directory:

export JOBNAME=dnn_flights_$(date -u +%y%m%d_%H%M%S)

cd ~/tensorflow

The custom parameters for your training job, such as the location of the training and evaluation data, are provided as custom parameters after all of the gcloud parameters.

gcloud ai-platform jobs submit training $JOBNAME \

--module-name=trainer.task \

--package-path=$(pwd)/flights/trainer \

--job-dir=$OUTPUT_DIR \

--staging-bucket=gs://$BUCKET \

--region=$REGION \

--scale-tier=STANDARD_1 \

--runtime-version=1.15 \

-- \

--output_dir=$OUTPUT_DIR \

--traindata $DATA_DIR/train* --evaldata $DATA_DIR/test*

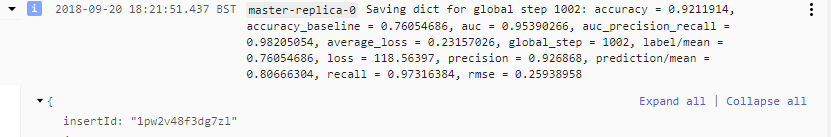

Then you will see the full list of analysis metrics listed as shown below.

This lesson is quite long, so let's break into 4 parts to write.

You know a long article easily makes people be distracted.

So let's continue tomorrow~