TGIF!!! Thanks GOD It's Friday!!!

But I believe some of you, like me, need to work tomorrow...

For our long holidays next week, we just need to "suffer" one more day...

FIGHTING!!! ![]()

Ok~ Let's continue the 2nd part~

Add a wide and deep neural network model

You will now extend the model to include additional features by creating features that allow you to associate airports with broad geographic zones and from those derive simplified air traffic corridors.

You start by creating location buckets for an n*n grid covering the USA and then assign each departure and arrival airport to their specific grid locations.

You can also create additional features using a technique called feature crossing that creates combinations of features that may provide useful insights when combined.

In this case grouping departure and arrival grid location combinations together to create an approximation of air traffic corridors and also grouping origin and destination airport ID combinations to use each such pair as a feature in the model.

Enter the following command to edit the model.py function again:

nano -w ~/tensorflow/flights/trainer/model.py

Add the following two functions above the linear_model function to implement a wide and deep neural network model:

def parse_hidden_units(s):

return [int(item) for item in s.split(',')]

def wide_and_deep_model(output_dir, nbuckets=5, hidden_units='64,32', learning_rate=0.01):

real, sparse = get_features()

# lat/lon cols can be discretized to "air traffic corridors"

latbuckets = np.linspace(20.0, 50.0, nbuckets).tolist()

lonbuckets = np.linspace(-120.0, -70.0, nbuckets).tolist()

disc = {}

disc.update({

'd_{}'.format(key) : \

tflayers.bucketized_column(real[key], latbuckets) \

for key in ['dep_lat', 'arr_lat']

})

disc.update({

'd_{}'.format(key) : \

tflayers.bucketized_column(real[key], lonbuckets) \

for key in ['dep_lon', 'arr_lon']

})

# cross columns that make sense in combination

sparse['dep_loc'] = tflayers.crossed_column( \

[disc['d_dep_lat'], disc['d_dep_lon']],\

nbuckets*nbuckets)

sparse['arr_loc'] = tflayers.crossed_column( \

[disc['d_arr_lat'], disc['d_arr_lon']],\

nbuckets*nbuckets)

sparse['dep_arr'] = tflayers.crossed_column( \

[sparse['dep_loc'], sparse['arr_loc']],\

nbuckets ** 4)

sparse['ori_dest'] = tflayers.crossed_column( \

[sparse['origin'], sparse['dest']], \

hash_bucket_size=1000)

# create embeddings of all the sparse columns

embed = {

colname : create_embed(col) \

for colname, col in sparse.items()

}

real.update(embed)

#lin_opt=tf.train.FtrlOptimizer(learning_rate=learning_rate)

#l_rate=learning_rate*0.25

#dnn_opt=tf.train.AdagradOptimizer(learning_rate=l_rate)

estimator = tflearn.DNNLinearCombinedClassifier(

model_dir=output_dir,

linear_feature_columns=sparse.values(),

dnn_feature_columns=real.values(),

dnn_hidden_units=parse_hidden_units(hidden_units))

#linear_optimizer=lin_opt,

#dnn_optimizer=dnn_opt)

estimator = tf.contrib.estimator.add_metrics(estimator, my_rmse)

return estimator

Page down to the run_experiment function at the end of the file. You need to reconfigure the experiment to call the deep neural network estimator function instead of the linear classifier function.

Replace the line estimator = dnn_model(output_dir) with:

# estimator = linear_model(output_dir)

# estimator = dnn_model(output_dir)

estimator = wide_and_deep_model(output_dir, 5, '64,32', 0.01)

Save model.py.

Modify the OUTPUT environment variable to point to a new location for this model run:

export OUTPUT_DIR=gs://${BUCKET}/flights/chapter9/output2

Create a new job name to allow you to identify the job and change to the working directory:

export JOBNAME=wide_and_deep_flights_$(date -u +%y%m%d_%H%M%S)

Submit the Cloud-ML task for the new model:

gcloud ai-platform jobs submit training $JOBNAME \

--module-name=trainer.task \

--package-path=$(pwd)/flights/trainer \

--job-dir=$OUTPUT_DIR \

--staging-bucket=gs://$BUCKET \

--region=$REGION \

--scale-tier=STANDARD_1 \

--runtime-version=1.15 \

-- \

--output_dir=$OUTPUT_DIR \

--traindata $DATA_DIR/train* --evaldata $DATA_DIR/test*

Monitor the job while it goes through the training process.

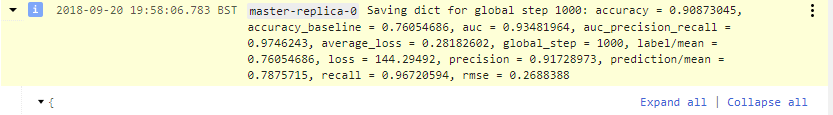

You will see a large number of events but there will be an event towards the end of the job run with a description that starts with "Saving dict for global step ...".

We will continue the 3rd part of this topic tomorrow.

Have a nice weekend ![]() (Don't be sad if you need to work tomorrow...

(Don't be sad if you need to work tomorrow...