上午: Python機器學習套件與資料分析

使用tensorflow.keras

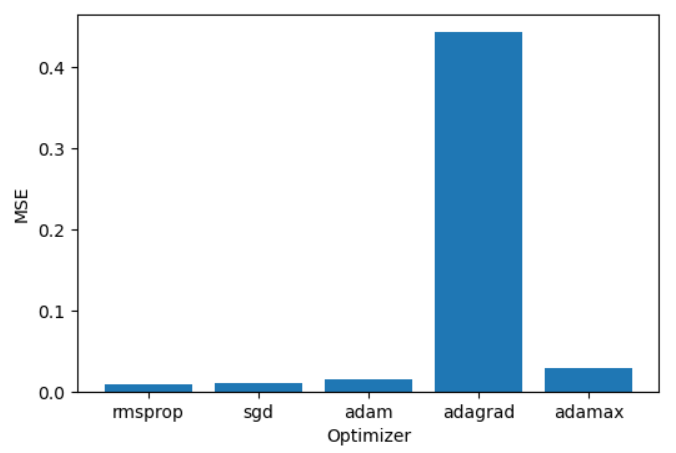

測試不同optimizer、batzh_size影響的數據結果

optimizer = ['rmsprop', 'sgd', 'adam', 'adagrad', 'adamax']

Batch_size = [8, 16, 32, 64]

Loss_test = []

MAE_test = []

#套用不同的optimizer & Batch_size

for opt in optimizer:

Admission_Model.set_weights(Admission_Model_weights)

Admission_Model.compile(optimizer = opt, loss = 'mse', metrics = ['mae'])

Admission_Model_History = Admission_Model.fit(X_train, y_train, epochs = 20, batch_size = 8, verbose = 0,

validation_data = (X_test, y_test))

Result = Admission_Model.evaluate(X_test, y_test)

Loss_test.append(Result[0])

MAE_test.append(Result[1])

import matplotlib.pyplot as plt

plt.figure(dpi = 100)

plt.bar(range(len(optimizer)), Loss_test)

plt.xticks(range(len(optimizer)), optimizer)

plt.xlabel('Optimizer')

plt.ylabel('MSE')

plt.show()

下午: Pytorch 與深度學習初探

######################### step1: load data (generate) ############

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

import numpy as np

from sklearn import datasets

def scatter_plot():

plt.scatter(X[Y==0,0], X[Y==0,1]) # scatter(X1,X2) where y==0

plt.scatter(X[Y==1,0], X[Y==1,1]) #==> scatter(X[Y==0,0],X[Y==0,1])

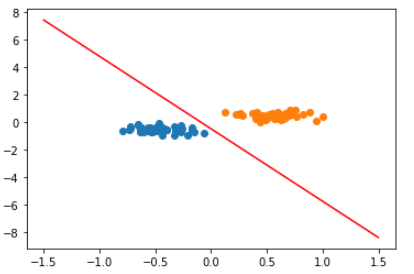

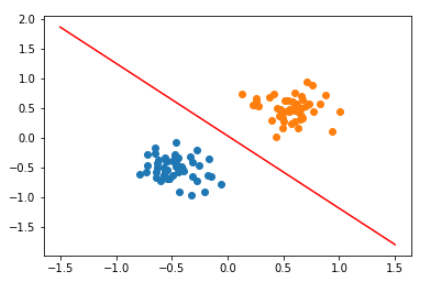

def plot_fit(model=0):

if model==0:

w1=-7

w2=5

b=0

else:

[w,b]=model.parameters()

[w1,w2]=w.view(2)

w1=w1.detach().item()

w2=w2.detach().item()

b=b[0].detach().item()

x1=np.array([-1.5,1.5])

x2=(w1*x1+b)/(-1*w2)

scatter_plot()

plt.plot(x1,x2,'r')

plt.show()

# generate data

n_samples=100

centers=[[-0.5,-0.5],[0.5,0.5]]

X,Y=datasets.make_blobs(n_samples=n_samples,random_state=1,centers=centers,cluster_std=0.2)

print("X=",X.shape,"Y=",Y.shape)

########################### step2: preprocessing X,Y ############

tensorX=torch.Tensor(X)

tensorY=torch.Tensor(Y.reshape(len(X),1))

print(tensorX.shape,tensorY.shape)

print(type(tensorX))

plot_fit(0)

########################### step3: build model ############

class LogRegModel(nn.Module):

def __init__(self,numIn,numOut):

super(LogRegModel,self).__init__()

self.layer1=torch.nn.Linear(numIn,numOut)

def forward(self,x):

x=self.layer1(x)

yPre=torch.sigmoid(x)

return yPre

model=LogRegModel(2,1)

print(model)

plot_fit(model)

#print("########### parameters=",w,b)

criterion= nn.BCELoss()

optimizer=torch.optim.SGD(model.parameters(),lr=0.01)#advance 2:lr=0.0001

############################ step4: traing model############

torch.manual_seed(1)

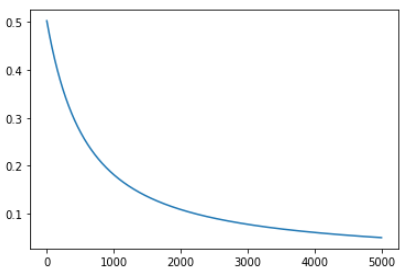

epochs=5000

losses=[]

for e in range(epochs):

preY=model.forward(tensorX)

loss=criterion(preY,tensorY)

losses.append(loss)

optimizer.zero_grad()

loss.backward()

optimizer.step()

plt.plot(range(epochs),losses)

plt.show()

###### plot boundary

plot_fit(model)

# step5: evaluate model (draw)