早上: Python機器學習套件與資料分析

今天也是練習CNN

import os

import cv2

parent_directory = './AfricanWildlife'

X = []

y = []

for folders in os.listdir('./AfricanWildlife'):

filepath = os.path.join(parent_directory, folders)

if not os.path.isdir(filepath):

continue

Category_index = os.listdir('./AfricanWildlife').index(folders)

print(Category_index)

for files in os.listdir(filepath):

if files.endswith('jpg'):

filename = os.path.join(filepath, files)

image = cv2.imread(filename)

image_resized = cv2.resize(image, (128,128))

X.append(image_resized)

y.append(Category_index)

import numpy as np

X = np.array(X).astype(np.float32)/255

y = np.array(y)

# 切分訓練&測試集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, stratify=y, random_state=1)

# 建立網路

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, Dense, MaxPooling2D, Flatten

AW_Model = Sequential()

AW_Model.add(Conv2D(64,(5,5), activation='relu', input_shape=(128,128,3)))

AW_Model.add(MaxPooling2D(2))

AW_Model.add(Conv2D(32,(5,5), activation='relu'))

AW_Model.add(MaxPooling2D(2))

AW_Model.add(Conv2D(16,(5,5), activation='relu'))

AW_Model.add(MaxPooling2D(2))

AW_Model.add(Flatten())

AW_Model.add(Dense(32, activation='relu'))

AW_Model.add(Dense(4, activation='softmax'))

AW_Model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['acc'])

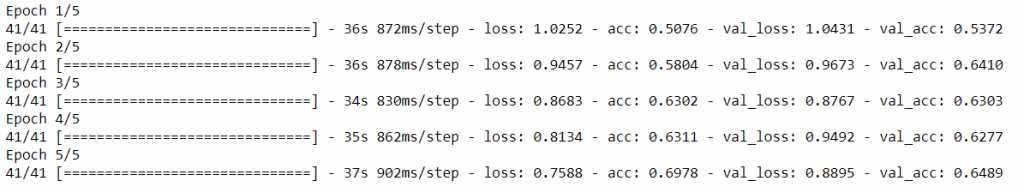

AW_Model.fit(X_train, y_train, epochs=5, batch_size=28, validation_data=(X_test, y_test))

結果不盡理想,應該是參數或是網路架構要再調整

下午: Pytorch 與深度學習初探

######################### step1: load data (generate) ############

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from torchvision import datasets, transforms

from torchsummary import summary

t1=transforms.Resize((28,28))

t2=transforms.ToTensor()

t3=transforms.Normalize((0.5,),(0.5,))

transform=transforms.Compose([t1,t2,t3])

train_data= datasets.MNIST(root='./data',train=True, download=True, transform=transform)

validate_data=datasets.MNIST(root='./data',train=False, download=True, transform=transform)

print(len(train_data),type(train_data))

print(len(validate_data),type(validate_data))

train_loader=torch.utils.data.DataLoader(train_data, batch_size=100,shuffle=True)

validation_loader=torch.utils.data.DataLoader(validate_data,batch_size=100,shuffle=False)

print(len(train_loader),type(train_loader))

print(len(validation_loader),type(validation_loader))

def im_convert(tensor):

image = tensor.clone().detach().numpy()

image = image.transpose(1, 2, 0)

image = image * np.array((0.5, 0.5, 0.5)) + np.array((0.5, 0.5, 0.5))

image = image.clip(0, 1)

return image

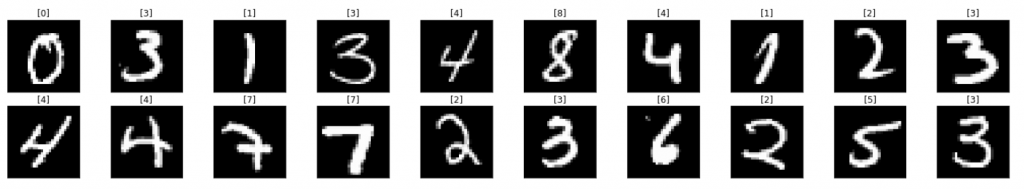

dataiter = iter(train_loader)

images, labels = dataiter.next()

fig = plt.figure(figsize=(25, 4))

for idx in np.arange(20):

ax = fig.add_subplot(2, 10, idx+1, xticks=[], yticks=[])

plt.imshow(im_convert(images[idx]))

ax.set_title([labels[idx].item()])

########################### step3: build model ############

class myDNN(nn.Module):

def __init__(self,numIn,numH1,numH2,numOut):

super(myDNN,self).__init__()

self.layer1=torch.nn.Linear(numIn,numH1)

self.layer2=torch.nn.Linear(numH1,numH2)

self.layer3=torch.nn.Linear(numH2,numOut)

def forward(self,x):

x=F.relu(self.layer1(x))

x=F.relu(self.layer2(x))

yProb=self.layer3(x)

return yProb

model=myDNN(784,256,64,10)

print(model)

#backward path

criterion= nn.CrossEntropyLoss()

optimizer=torch.optim.Adam(model.parameters(),lr=0.0001)#advance 2:lr=0.0001

############################ step4: traing model############

epochs = 15

running_loss_history = []

running_corrects_history = []

val_running_loss_history = []

val_running_corrects_history = []

for e in range(epochs):

running_loss = 0.0

running_corrects = 0.0

val_running_loss = 0.0

val_running_corrects = 0.0

for inputs, labels in train_loader:

inputs = inputs.view(inputs.shape[0], -1)

outputs = model(inputs)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

_, preds = torch.max(outputs, 1)

running_loss += loss.item()

running_corrects += torch.sum(preds == labels.data)

else:

with torch.no_grad():

for val_inputs, val_labels in validation_loader:

val_inputs = val_inputs.view(val_inputs.shape[0], -1)

val_outputs = model(val_inputs)

val_loss = criterion(val_outputs, val_labels)

_, val_preds = torch.max(val_outputs, 1)

val_running_loss += val_loss.item()

val_running_corrects += torch.sum(val_preds == val_labels.data)

epoch_loss = running_loss/len(train_loader)

epoch_acc = running_corrects.float()/ len(train_loader)

running_loss_history.append(epoch_loss)

running_corrects_history.append(epoch_acc)

val_epoch_loss = val_running_loss/len(validation_loader)

val_epoch_acc = val_running_corrects.float()/ len(validation_loader)

val_running_loss_history.append(val_epoch_loss)

val_running_corrects_history.append(val_epoch_acc)

print('epoch :', (e+1))

print('training loss: {:.4f}, acc {:.4f} '.format(epoch_loss, epoch_acc.item()))

print('validation loss: {:.4f}, validation acc {:.4f} '.format(val_epoch_loss, val_epoch_acc.item()))