k8s的特性來說,基本上部署到k8s一定是會挑資源最輕的那台node優先,所以pod會分散至各個node上面,

如果今天使用環境上面需要把pod分配到特定的node上面時,要怎麼做呢?

請繼續看下去~~

先來看node上面有沒有label

kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-playground-control-plane Ready control-plane,master 4m45s v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-playground-control-plane,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-playground-worker Ready <none> 4m16s v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-playground-worker,kubernetes.io/os=linux

k8s-playground-worker2 Ready <none> 4m17s v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-playground-worker2,kubernetes.io/os=linux

給work上個high-vm的label,work2給個backend-only的label

kubectl label nodes k8s-playground-worker use=high-vm

node/k8s-playground-worker labeled

kubectl label nodes k8s-playground-worker2 use=backend-only

node/k8s-playground-worker2 labeled

查看一下是否都被加上label了

kubectl get nodes --show-labels

k8s-playground-worker Ready <none> 6m10s v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-playground-worker,kubernetes.io/os=linux,use=high-vm

k8s-playground-worker2 Ready <none> 6m11s v1.21.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-playground-worker2,kubernetes.io/os=linux,use=backend-only

這樣子我二台node都有不同的label了,一樣用前面的redis來部署。

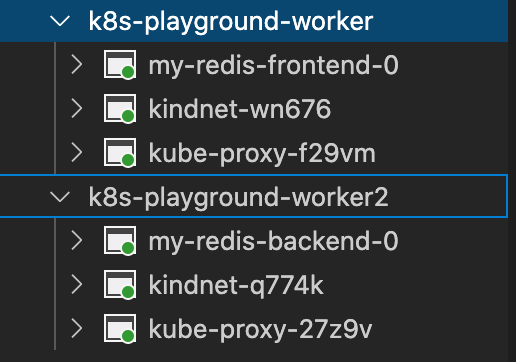

從下圖可以看的出來二個pod被分別部署至不同node上面

現在我再來部署二個redis到high-vm這個label的node上面

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: my-redis-backend1

labels:

app.kubernetes.io/name: my-redis

env: dev

user: backend

spec:

serviceName: my-redis-backend1

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: my-redis

template:

metadata:

labels:

app.kubernetes.io/name: my-redis

env: dev

user: backend

spec:

containers:

- name: my-redis-backend1

image: "redis:latest"

imagePullPolicy: IfNotPresent

args: ["--appendonly", "yes", "--save", "600", "1"]

ports:

- name: redis

containerPort: 6379

protocol: TCP

volumeMounts:

- name: data

mountPath: /data

resources: {}

nodeSelector: #加這個

use: high-vm

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: my-redis-frontend1

labels:

app.kubernetes.io/name: my-redis

env: dev

user: frontend

spec:

serviceName: my-redis-frontend1

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: my-redis

template:

metadata:

labels:

app.kubernetes.io/name: my-redis

env: dev

user: frontend

spec:

containers:

- name: my-redis-frontend1

image: "redis:latest"

imagePullPolicy: IfNotPresent

args: ["--appendonly", "yes", "--save", "600", "1"]

ports:

- name: redis

containerPort: 6379

protocol: TCP

volumeMounts:

- name: data

mountPath: /data

resources: {}

nodeSelector: #加這個

use: high-vm

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

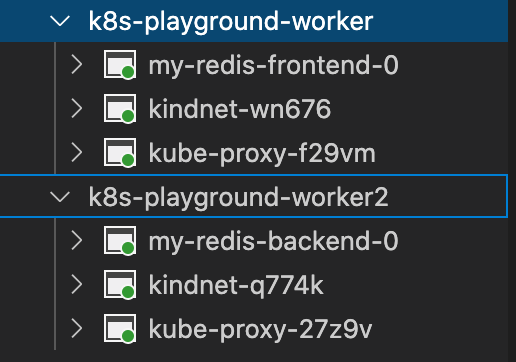

來確認是否都部署至use=high-vm這台node呢。

從下圖可以看到新增二個redis都部署到worker這台node上面

親和力(Affinity) 和 反親和力(anti-affinity),因為正好是相反的行為,所以就單純以Affinity來說明,

親和力跟上面寫的Node Selector有點像,但是Node Selector無法進行條件設定。

Affinity有二種條件設定模式

如果有符合條件就照條件部署到對應node,如果沒有符合的node一樣還是能部署。一樣用上面的yaml說明

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: my-redis-backend1

labels:

app.kubernetes.io/name: my-redis

env: dev

user: backend

spec:

serviceName: my-redis-backend1

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: my-redis

template:

metadata:

labels:

app.kubernetes.io/name: my-redis

env: dev

user: backend

spec:

containers:

- name: my-redis-backend1

image: "redis:latest"

imagePullPolicy: IfNotPresent

args: ["--appendonly", "yes", "--save", "600", "1"]

ports:

- name: redis

containerPort: 6379

protocol: TCP

volumeMounts:

- name: data

mountPath: /data

resources: {}

affinity: #親和力設定

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # node必需符合這條件

nodeSelectorTerms:

- matchExpressions: #條件為use=backend-only

- key: use

operator: In

values:

- "backend-only"

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: my-redis-frontend1

labels:

app.kubernetes.io/name: my-redis

env: dev

user: frontend

spec:

serviceName: my-redis-frontend1

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: my-redis

template:

metadata:

labels:

app.kubernetes.io/name: my-redis

env: dev

user: frontend

spec:

containers:

- name: my-redis-frontend1

image: "redis:latest"

imagePullPolicy: IfNotPresent

args: ["--appendonly", "yes", "--save", "600", "1"]

ports:

- name: redis

containerPort: 6379

protocol: TCP

volumeMounts:

- name: data

mountPath: /data

resources: {}

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution: #如果有符合條件時就部署到對應node

- weight: 70 #權重70分

preference:

matchExpressions:

- key: use

operator: In

values:

- "high-vm"

- weight: 30 #權重30分

preference:

matchExpressions:

- key: use

operator: In

values:

- "backend-only"

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 1Gi

簡單說pod與pod的連結(?),如果部署的情形需要某個pod跟某個pod必需是cp,不能拆散時,就可以使用pod Affinity來實作

把原本的nodeAffinity改成podAffinity

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- test-pod-Affinity

這樣子這個pod就會去找尋它的真命天子(?)

如果需要更多資訊可以到下面的網站查詢喔

官網

亲和与反亲和调度