承接上次的code

### GPU

# device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

device = torch.device('cpu')

print(device)

我自己的電腦GPU的記憶體不是很足夠,所以我是用自己的CPU跑的(跑了兩天左右)

### Function to calculate accuracy

def accuracy(preds, trues):

### Converting preds to 0 or 1

preds = [1 if preds[i] >= 0.5 else 0 for i in range(len(preds))]

### Calculating accuracy by comparing predictions with true labels

acc = [1 if preds[i] == trues[i] else 0 for i in range(len(preds))]

### Summing over all correct predictions

acc = np.sum(acc) / len(preds)

return (acc * 100)

這個 Function會給預測函數設定一個閥值(0.5)將其作2元分類,然後再將其與測試組做對比算出 accurancy

1.Reseting Gradients,當GPU不夠大時呼叫'optimizer.zero_grad()'#reset防止每一次迭代後,累加gradient狀態一直處於GPU中未被釋放。

2.Forward,'preds = model(images)'是將data傳入model進行forward propagation

3.Calculating Loss,'_loss = criterion(preds, labels)',計算loss

4.Backward,'_loss.backward()'根據loss進行back propagation,計算gradient

5.Update,'optimizer.step()' 更新gradient descent

source: https://meetonfriday.com/posts/18392404/

每訓練一回所做的事:

### Function - One Epoch Train

def train_one_epoch(train_data_loader):

### Local Parameters

epoch_loss = []

epoch_acc = []

start_time = time.time()

###Iterating over data loader

for images, labels in train_data_loader:

#Loading images and labels to device

images = images.to(device)

labels = labels.to(device)

labels = labels.reshape((labels.shape[0], 1)) # [N, 1] - to match with preds shape

#Reseting Gradients (reset)

optimizer.zero_grad()

#Forward

preds = model(images)

#Calculating Loss

_loss = criterion(preds, labels)

loss = _loss.item()

epoch_loss.append(loss)

#Calculating Accuracy

acc = accuracy(preds, labels)

epoch_acc.append(acc)

#Backward (update)

_loss.backward()

optimizer.step()

###Overall Epoch Results

end_time = time.time()

total_time = end_time - start_time

###Acc and Loss

epoch_loss = np.mean(epoch_loss)

epoch_acc = np.mean(epoch_acc)

###Storing results to logs

train_logs["loss"].append(epoch_loss)

train_logs["accuracy"].append(epoch_acc)

train_logs["time"].append(total_time)

return epoch_loss, epoch_acc, total_time

每一回驗證:儲存模型為'resnet50_best.pth'

### Function - One Epoch Valid

def val_one_epoch(val_data_loader, best_val_acc):

### Local Parameters

epoch_loss = []

epoch_acc = []

start_time = time.time()

###Iterating over data loader

for images, labels in val_data_loader:

#Loading images and labels to device

images = images.to(device)

labels = labels.to(device)

labels = labels.reshape((labels.shape[0], 1)) # [N, 1] - to match with preds shape

#Forward

preds = model(images)

#Calculating Loss

_loss = criterion(preds, labels)

loss = _loss.item()

epoch_loss.append(loss)

#Calculating Accuracy

acc = accuracy(preds, labels)

epoch_acc.append(acc)

###Overall Epoch Results

end_time = time.time()

total_time = end_time - start_time

###Acc and Loss

epoch_loss = np.mean(epoch_loss)

epoch_acc = np.mean(epoch_acc)

###Storing results to logs

val_logs["loss"].append(epoch_loss)

val_logs["accuracy"].append(epoch_acc)

val_logs["time"].append(total_time)

###Saving best model

if epoch_acc > best_val_acc:

best_val_acc = epoch_acc

torch.save(model.state_dict(),"resnet50_best.pth")

return epoch_loss, epoch_acc, total_time, best_val_acc

在kaggle,適用python 3.7。

model = resnet50(pretrained = True)

在本地端我是用python3.9.7。

from torchvision.models import ResNet50_Weights

model = resnet50(weights=ResNet50_Weights.DEFAULT)

設定訓練的Sequential model:

# Modifying Head - classifier

model.fc = nn.Sequential(

nn.Linear(2048, 1, bias = True),

nn.Sigmoid()

)

# Optimizer

optimizer = torch.optim.Adam(model.parameters(), lr = 0.0001)

# Learning Rate Scheduler

# 每次 step_size epochs 衰減每個參數組的學習率。

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size = 5, gamma = 0.5)

#Loss Function

criterion = nn.BCELoss() #二元交叉熵損失函數

# Logs - Helpful for plotting after training finishes

train_logs = {"loss" : [], "accuracy" : [], "time" : []}

val_logs = {"loss" : [], "accuracy" : [], "time" : []}

# Loading model to device

model.to(device)

# No of epochs

epochs = 20

### Training and Validation xD

best_val_acc = 0

for epoch in range(epochs):

###Training

loss, acc, _time = train_one_epoch(train_data_loader)

#Print Epoch Details

print("\nTraining")

print("Epoch {}".format(epoch+1))

print("Loss : {}".format(round(loss, 4)))

print("Acc : {}".format(round(acc, 4)))

print("Time : {}".format(round(_time, 4)))

###Validation

loss, acc, _time, best_val_acc = val_one_epoch(val_data_loader, best_val_acc)

#Print Epoch Details

print("\nValidating")

print("Epoch {}".format(epoch+1))

print("Loss : {}".format(round(loss, 4)))

print("Acc : {}".format(round(acc, 4)))

print("Time : {}".format(round(_time, 4)))

"

Training

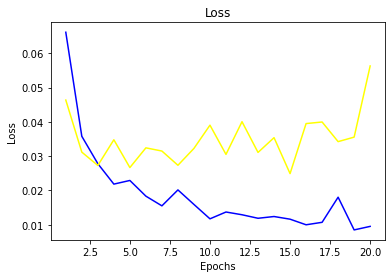

Epoch 1

Loss : 0.0662

Acc : 97.8296

Time : 5253.6753

Validating

Epoch 1

Loss : 0.0464

Acc : 98.3216

Time : 736.903

Training

Epoch 2

Loss : 0.0358

Acc : 98.7948

Time : 4882.465

Validating

Epoch 2

Loss : 0.0312

Acc : 98.7852

Time : 638.738

Training

Epoch 3

Loss : 0.0278

Acc : 98.9814

Time : 4885.1015

Validating

Epoch 3

Loss : 0.0273

Acc : 98.913

Time : 629.354

Training

Epoch 4

Loss : 0.0218

Acc : 99.2854

Time : 4925.169

Validating

Epoch 4

Loss : 0.0348

Acc : 98.6733

Time : 632.915

Training

Epoch 5

Loss : 0.0229

Acc : 99.1841

Time : 5086.6376

Validating

Epoch 5

Loss : 0.0267

Acc : 98.8811

Time : 591.848

Training

Epoch 6

Loss : 0.0183

Acc : 99.3494

Time : 4841.4826

Validating

Epoch 6

Loss : 0.0324

Acc : 98.8811

Time : 628.952

Training

Epoch 7

Loss : 0.0155

Acc : 99.4294

Time : 4824.0096

Validating

Epoch 7

Loss : 0.0315

Acc : 98.7532

Time : 615.423

Training

Epoch 8

Loss : 0.0202

Acc : 99.3113

Time : 4763.0

Validating

Epoch 8

Loss : 0.0273

Acc : 98.8811

Time : 613.794

Training

Epoch 9

Loss : 0.0159

Acc : 99.4294

Time : 4748.983

Validating

Epoch 9

Loss : 0.0323

Acc : 98.913

Time : 615.81

Training

Epoch 10

Loss : 0.0117

Acc : 99.6054

Time : 4762.96

Validating

Epoch 10

Loss : 0.039

Acc : 98.4974

Time : 615.812

Training

Epoch 11

Loss : 0.0137

Acc : 99.5787

Time : 4756.948

Validating

Epoch 11

Loss : 0.0305

Acc : 99.0249

Time : 613.552

Training

Epoch 12

Loss : 0.0129

Acc : 99.5627

Time : 4765.72

Validating

Epoch 12

Loss : 0.0401

Acc : 98.5934

Time : 615.958

Training

Epoch 13

Loss : 0.0119

Acc : 99.6054

Time : 4779.163

Validating

Epoch 13

Loss : 0.0311

Acc : 98.913

Time : 613.923

Training

Epoch 14

Loss : 0.0124

Acc : 99.5627

Time : 6105.9822

Validating

Epoch 14

Loss : 0.0354

Acc : 98.8331

Time : 912.4271

Training

Epoch 15

Loss : 0.0116

Acc : 99.6054

Time : 6537.8869

Validating

Epoch 15

Loss : 0.0249

Acc : 99.009

Time : 918.9861

Training

Epoch 16

Loss : 0.01

Acc : 99.728

Time : 6638.2821

Validating

Epoch 16

Loss : 0.0395

Acc : 98.5934

Time : 824.718

Training

Epoch 17

Loss : 0.0107

Acc : 99.632

Time : 6624.546

Validating

Epoch 17

Loss : 0.04

Acc : 98.6893

Time : 934.2406

Training

Epoch 18

Loss : 0.018

Acc : 99.5094

Time : 5312.4133

Validating

Epoch 18

Loss : 0.0342

Acc : 98.7276

Time : 621.479

Training

Epoch 19

Loss : 0.0085

Acc : 99.7014

Time : 5512.1889

Validating

Epoch 19

Loss : 0.0356

Acc : 98.7852

Time : 647.4116

Training

Epoch 20

Loss : 0.0095

Acc : 99.6907

Time : 6281.2117

Validating

Epoch 20

Loss : 0.0563

Acc : 98.7372

Time : 790.0679

"

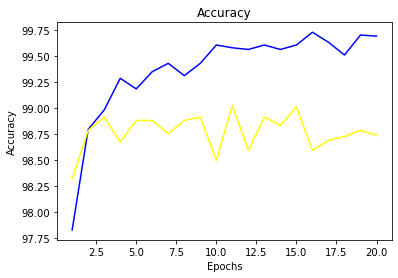

### Plotting Results

#Loss

plt.title("Loss")

plt.plot(np.arange(1, 21, 1), train_logs["loss"], color = 'blue')

plt.plot(np.arange(1, 21, 1), val_logs["loss"], color = 'yellow')

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.show()

#Accuracy

plt.title("Accuracy")

plt.plot(np.arange(1, 21, 1), train_logs["accuracy"], color = 'blue')

plt.plot(np.arange(1, 21, 1), val_logs["accuracy"], color = 'yellow')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.show()