以PyTorch實作卷積神經網路模型

- MNIS手寫數據

import torch

import torch.nn as nn

import torch.utils.data as Data

import torchvision

import matplotlib.pyplot as plt

torch.manual_seed(1)

EPOCH = 10 #訓練數據10次

BATCH_SIZE = 50

LR = 0.001 #學習率

DOWNLOAD_MNIST = True

train_data = torchvision.datasets.MNIST(

root='./mnist/',

train=True,

transform=torchvision.transforms.ToTensor(),

download=DOWNLOAD_MNIST

)

#對(50,1,28,28)進行批次訓練

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

test_data = torchvision.datasets.MNIST(root='./mnist/', train=False)

#測試前1000個樣本

test_x = torch.unsqueeze(test_data.test_data, dim=1).type(torch.FloatTensor)[:1000]/255.

test_y = test_data.test_labels[:1000]

- CNN模型

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(

in_channels=1,

out_channels=16,

kernel_size=5,

stride=1,

padding=2,

),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

)

self.conv2 = nn.Sequential(

nn.Conv2d(16, 32, 5, 1, 2),

nn.ReLU(),

nn.MaxPool2d(2),

)

self.out = nn.Linear(32 * 7 * 7, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1)

output = self.out(x)

return output, x

cnn = CNN()

print(cnn)

- 訓練

optimizer = torch.optim.Adam(cnn.parameters(), lr=LR)

loss_func = nn.CrossEntropyLoss()

try: from sklearn.manifold import TSNE; HAS_SK = True

except: HAS_SK = False; print('Please install sklearn for layer visualization')

def plot_with_labels(lowDWeights, labels):

plt.cla()

X, Y = lowDWeights[:, 0], lowDWeights[:, 1]

for x, y, s in zip(X, Y, labels):

c = cm.rainbow(int(255 * s / 9)); plt.text(x, y, s, backgroundcolor=c, fontsize=9)

plt.xlim(X.min(), X.max()); plt.ylim(Y.min(), Y.max()); plt.title('Visualize last layer'); plt.show(); plt.pause(0.01)

plt.ion()

for epoch in range(EPOCH):

for step, (b_x, b_y) in enumerate(train_loader):

output = cnn(b_x)[0]

loss = loss_func(output, b_y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step % 50 == 0:

test_output, last_layer = cnn(test_x)

pred_y = torch.max(test_output, 1)[1].data.numpy()

accuracy = float((pred_y == test_y.data.numpy()).astype(int).sum()) / float(test_y.size(0))

print('Epoch: ', epoch, '| train loss: %.4f' % loss.data.numpy(), '| test accuracy: %.2f' % accuracy)

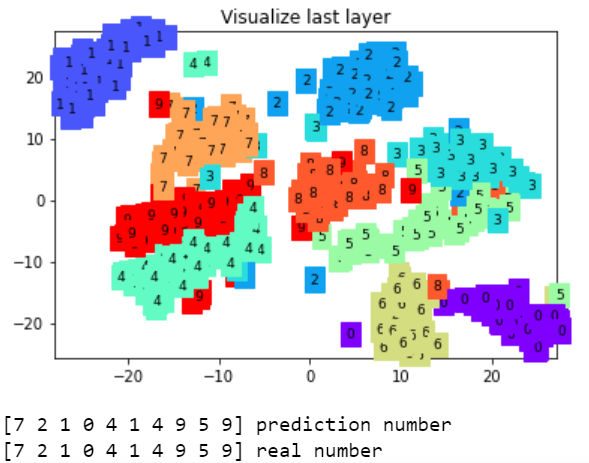

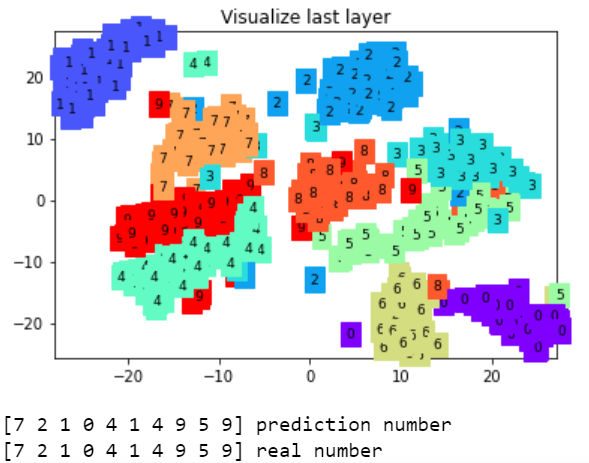

- 可視化訓練

if HAS_SK:

tsne = TSNE(perplexity=30, n_components=2, init='pca', n_iter=5000)

plot_only = 500

low_dim_embs = tsne.fit_transform(last_layer.data.numpy()[:plot_only, :])

labels = test_y.numpy()[:plot_only]

plot_with_labels(low_dim_embs, labels)

plt.ioff()

- 取十個數據來驗證預測的值是否正確

test_output, _ = cnn(test_x[:10])

pred_y = torch.max(test_output, 1)[1].data.numpy()

print(pred_y, 'prediction number')

print(test_y[:10].numpy(), 'real number')

參考資料: