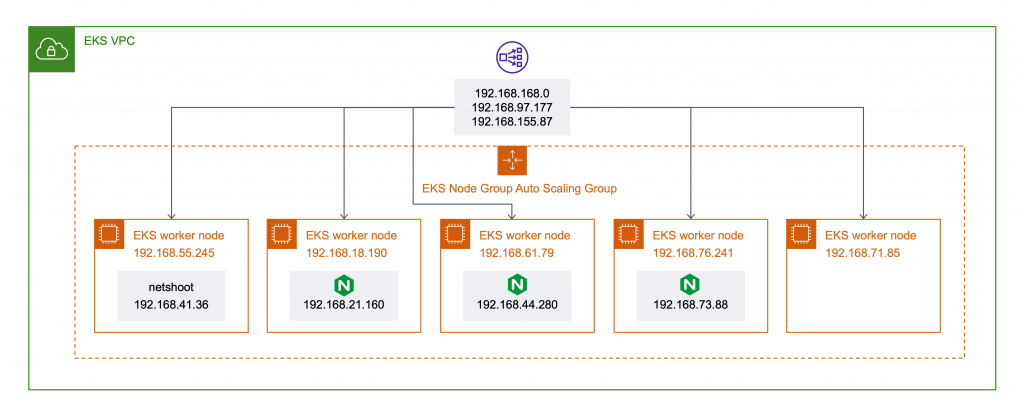

在開始之前,我們需要先理解 EKS 環境中, NLB 是如何註冊 EKS worker node 作為 Target Group。先透過 kubectl 查看 Kubernetes Service。

$ kubectl -n nginx-demo describe svc

Name: service-nginx-demo

Namespace: nginx-demo

Labels: <none>

Annotations: service.beta.kubernetes.io/aws-load-balancer-internal: true

service.beta.kubernetes.io/aws-load-balancer-type: nlb

Selector: app=nginx-demo

Type: LoadBalancer

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.135.233

IPs: 10.100.135.233

LoadBalancer Ingress: a0ac38093315243b1a67d275a4379ca6-c09bd862a6e56fc5.elb.eu-west-1.amazonaws.com

Port: <unset> 80/TCP

TargetPort: 80/TCP

NodePort: <unset> 30161/TCP

Endpoints: 192.168.21.160:80,192.168.44.248:80,192.168.73.88:80

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

由輸出內容 LoadBalancer Ingress endpoint 為 NLB FQDN,使用 NodePort 方式暴露 30161 port。接續,查看在此關聯 NLB 的 Target Group。

$ aws elbv2 describe-target-groups --load-balancer-arn arn:aws:elasticloadbalancing:eu-west-1:111111111111:loadbalancer/net/a0ac38093315243b1a67d275a4379ca6/c09bd862a6e56fc5

{

"TargetGroups": [

{

"TargetGroupArn": "arn:aws:elasticloadbalancing:eu-west-1:111111111111:targetgroup/k8s-nginxdem-servicen-f2b581224e/2c5a6b0de24b622d",

"TargetGroupName": "k8s-nginxdem-servicen-f2b581224e",

"Protocol": "TCP",

"Port": 30161,

"VpcId": "vpc-0b150640c72b722db",

"HealthCheckProtocol": "TCP",

"HealthCheckPort": "traffic-port",

"HealthCheckEnabled": true,

"HealthCheckIntervalSeconds": 30,

"HealthCheckTimeoutSeconds": 10,

"HealthyThresholdCount": 3,

"UnhealthyThresholdCount": 3,

"LoadBalancerArns": [

"arn:aws:elasticloadbalancing:eu-west-1:111111111111:loadbalancer/net/a0ac38093315243b1a67d275a4379ca6/c09bd862a6e56fc5"

],

"TargetType": "instance",

"IpAddressType": "ipv4"

}

]

}

$ aws elbv2 describe-target-group-attributes --target-group-arn arn:aws:elasticloadbalancing:eu-west-1:111111111111:targetgroup/k8s-nginxdem-servicen-f2b581224e/2c5a6b0de24b622d

{

"Attributes": [

{

"Key": "proxy_protocol_v2.enabled",

"Value": "false"

},

{

"Key": "preserve_client_ip.enabled",

"Value": "true"

},

{

"Key": "stickiness.enabled",

"Value": "false"

},

{

"Key": "deregistration_delay.timeout_seconds",

"Value": "300"

},

{

"Key": "stickiness.type",

"Value": "source_ip"

},

{

"Key": "deregistration_delay.connection_termination.enabled",

"Value": "false"

}

]

}

$ aws elbv2 describe-target-health --target-group-arn arn:aws:elasticloadbalancing:eu-west-1:111111111111:targetgroup/k8s-nginxdem-servicen-f2b581224e/2c5a6b0de24b622d --query 'TargetHealthDescriptions[].Target'

[

{

"Id": "i-05cb2efcde4228b34",

"Port": 30161

},

{

"Id": "i-03846c730abe852f4",

"Port": 30161

},

{

"Id": "i-073bba202e9f0119f",

"Port": 30161

},

{

"Id": "i-06bdd562d4fc42c2b",

"Port": 30161

},

{

"Id": "i-020fc35362114539d",

"Port": 30161

}

]

由上述輸出統整,原生 Kubernetes NodePort Service[1] 是將 Kubernetes Service port 暴露於每一個 worker上, AWS ELB 整合此特性使用 instance type target group 並將所有 EKS worker node 註冊於同一 Target Group,並由 NodePort 暴露 30161 port 作為對外服務 port。

此外,預設使用 Instance type target group 預設啟用 Client IP preservation [2] target group 屬性。NLB 將會保留原始(source) IP 並轉發至 backend。

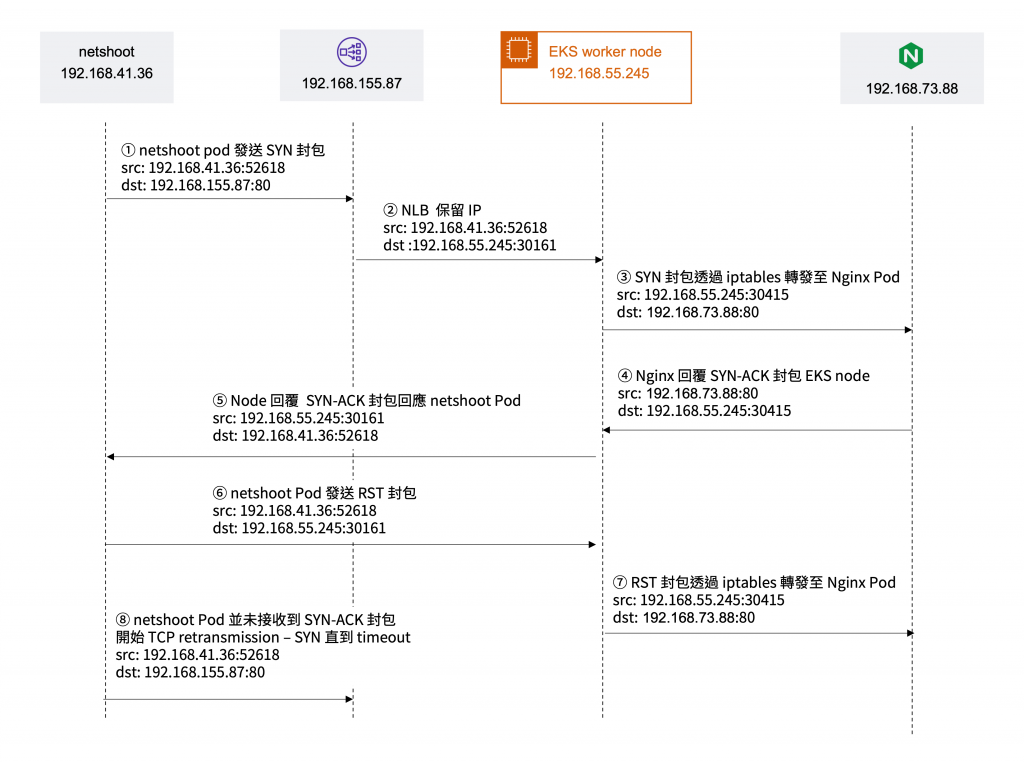

為瞭解實際發生問題狀況,我們登入至執行 netshoot Pod 的 worker node 上擷取封包:

[ec2-user@ip-192-168-55-245 ~]$ sudo tcpdump -i any -w packets.pcap

同時,透過 kubectl 於 netshoot Pod 訪問 NLB endpoint 直到確定發生 timeout 問題。

$ kubectl exec -it netshoot -- curl a0ac38093315243b1a67d275a4379ca6-c09bd862a6e56fc5.elb.eu-west-1.amazonaws.com

## 偶發性 timeout

netshoot Pod 發起 SYN 封包至 NLB 開始建立連線,然而並為接收到 SYN-AZ 封包,後續則有數次的 TCP Retransmission 重傳 SYN 封包。

$ tshark -Y "tcp.stream eq 28" -r ./21-packets.pcap

791 5.163175 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056305444 TSecr=0 WS=128

792 5.163189 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056305444 TSecr=0 WS=128

865 6.179489 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056306460 TSecr=0 WS=128

866 6.179521 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056306460 TSecr=0 WS=128

969 8.195495 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056308476 TSecr=0 WS=128

970 8.195526 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056308476 TSecr=0 WS=128

1317 12.355496 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056312636 TSecr=0 WS=128

1318 12.355531 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056312636 TSecr=0 WS=128

1513 20.547509 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056320828 TSecr=0 WS=128

1514 20.547539 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056320828 TSecr=0 WS=128

2011 36.675486 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056336956 TSecr=0 WS=128

2012 36.675513 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056336956 TSecr=0 WS=128

2942 69.699492 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056369980 TSecr=0 WS=128

2943 69.699517 192.168.41.36 → 192.168.155.87 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8961 SACK_PERM TSval=1056369980 TSecr=0 WS=128

由 worker node 發起 SYN 封包至 Nginx Pod 開始建立連線,Nginx Pod 回應 SYN-ACK 封包,但是由 node 值發送 RST 封包結束連線。

$ tshark -Y "tcp.stream eq 30" -r ./21-packets.pcap

794 5.163637 192.168.55.245 → 192.168.73.88 TCP 76 3857268289 30415 → 80 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056305444 TSecr=0 WS=128

795 5.164058 192.168.73.88 → 192.168.55.245 TCP 76 1733300389 80 → 30415 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=3379462264 TSecr=1056305444 WS=128

798 5.164076 192.168.55.245 → 192.168.73.88 TCP 56 3857268290 30415 → 80 [RST] Seq=1 Win=0 Len=0

由 Pod 與 Node 30161 Port 的連線:

$ tshark -Y "tcp.stream eq 29" -r ./21-packets.pcap

793 5.163613 192.168.41.36 → 192.168.55.245 TCP 76 3857268289 52618 → 30161 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056305444 TSecr=0 WS=128

796 5.164064 192.168.55.245 → 192.168.41.36 TCP 76 1733300389 30161 → 52618 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=3379462264 TSecr=1056305444 WS=128

797 5.164072 192.168.41.36 → 192.168.55.245 TCP 56 3857268290 52618 → 30161 [RST] Seq=1 Win=0 Len=0

867 6.179681 192.168.41.36 → 192.168.55.245 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 30161 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056306460 TSecr=0 WS=128

870 6.179880 192.168.55.245 → 192.168.41.36 TCP 76 2085595241 [TCP Previous segment not captured] [TCP Port numbers reused] 30161 → 52618 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=1771920452 TSecr=1056306460 WS=128

871 6.179892 192.168.41.36 → 192.168.55.245 TCP 56 3857268290 52618 → 30161 [RST] Seq=1 Win=0 Len=0

971 8.195685 192.168.41.36 → 192.168.55.245 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 30161 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056308476 TSecr=0 WS=128

974 8.196124 192.168.55.245 → 192.168.41.36 TCP 76 3152640874 [TCP Previous segment not captured] [TCP Port numbers reused] 30161 → 52618 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=690311064 TSecr=1056308476 WS=128

975 8.196135 192.168.41.36 → 192.168.55.245 TCP 56 3857268290 52618 → 30161 [RST] Seq=1 Win=0 Len=0

1319 12.355696 192.168.41.36 → 192.168.55.245 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 30161 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056312636 TSecr=0 WS=128

1322 12.356171 192.168.55.245 → 192.168.41.36 TCP 76 444079951 [TCP Previous segment not captured] [TCP Port numbers reused] 30161 → 52618 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=690315224 TSecr=1056312636 WS=128

1323 12.356184 192.168.41.36 → 192.168.55.245 TCP 56 3857268290 52618 → 30161 [RST] Seq=1 Win=0 Len=0

1515 20.547698 192.168.41.36 → 192.168.55.245 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 30161 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056320828 TSecr=0 WS=128

1518 20.548168 192.168.55.245 → 192.168.41.36 TCP 76 1956884196 [TCP Previous segment not captured] [TCP Port numbers reused] 30161 → 52618 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=690323416 TSecr=1056320828 WS=128

1519 20.548187 192.168.41.36 → 192.168.55.245 TCP 56 3857268290 52618 → 30161 [RST] Seq=1 Win=0 Len=0

2013 36.675694 192.168.41.36 → 192.168.55.245 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 30161 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056336956 TSecr=0 WS=128

2016 36.675910 192.168.55.245 → 192.168.41.36 TCP 76 825667838 [TCP Retransmission] [TCP Port numbers reused] 30161 → 52618 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=1771950948 TSecr=1056336956 WS=128

2017 36.675924 192.168.41.36 → 192.168.55.245 TCP 56 3857268290 52618 → 30161 [RST] Seq=1 Win=0 Len=0

2944 69.699679 192.168.41.36 → 192.168.55.245 TCP 76 3857268289 [TCP Retransmission] [TCP Port numbers reused] 52618 → 30161 [SYN] Seq=0 Win=62727 Len=0 MSS=8645 SACK_PERM TSval=1056369980 TSecr=0 WS=128

2947 69.699948 192.168.55.245 → 192.168.41.36 TCP 76 202589517 [TCP Retransmission] [TCP Port numbers reused] 30161 → 52618 [SYN, ACK] Seq=0 Ack=1 Win=62643 Len=0 MSS=8961 SACK_PERM TSval=1771983972 TSecr=1056369980 WS=128

2948 69.699961 192.168.41.36 → 192.168.55.245 TCP 56 3857268290 52618 → 30161 [RST] Seq=1 Win=0 Len=0

統整上述流程:

iptables-save 命令查看對應 iptable 規則。[ec2-user@ip-192-168-55-245 ~]$ sudo iptables-save | grep "service-nginx-demo"

-A KUBE-NODEPORTS -p tcp -m comment --comment "nginx-demo/service-nginx-demo" -m tcp --dport 30161 -j KUBE-SVC-WGW5ZPXP7HZEBG5V

-A KUBE-SEP-4S7NMWHTQECMLTDA -s 192.168.21.160/32 -m comment --comment "nginx-demo/service-nginx-demo" -j KUBE-MARK-MASQ

-A KUBE-SEP-4S7NMWHTQECMLTDA -p tcp -m comment --comment "nginx-demo/service-nginx-demo" -m tcp -j DNAT --to-destination 192.168.21.160:80

-A KUBE-SEP-I2YR57KE2BLSY73L -s 192.168.44.248/32 -m comment --comment "nginx-demo/service-nginx-demo" -j KUBE-MARK-MASQ

-A KUBE-SEP-I2YR57KE2BLSY73L -p tcp -m comment --comment "nginx-demo/service-nginx-demo" -m tcp -j DNAT --to-destination 192.168.44.248:80

-A KUBE-SEP-RRH7WDK5LM6YECXZ -s 192.168.73.88/32 -m comment --comment "nginx-demo/service-nginx-demo" -j KUBE-MARK-MASQ

-A KUBE-SEP-RRH7WDK5LM6YECXZ -p tcp -m comment --comment "nginx-demo/service-nginx-demo" -m tcp -j DNAT --to-destination 192.168.73.88:80

-A KUBE-SERVICES -d 10.100.135.233/32 -p tcp -m comment --comment "nginx-demo/service-nginx-demo cluster IP" -m tcp --dport 80 -j KUBE-SVC-WGW5ZPXP7HZEBG5V

-A KUBE-SVC-WGW5ZPXP7HZEBG5V -p tcp -m comment --comment "nginx-demo/service-nginx-demo" -m tcp --dport 30161 -j KUBE-MARK-MASQ

-A KUBE-SVC-WGW5ZPXP7HZEBG5V -m comment --comment "nginx-demo/service-nginx-demo" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-4S7NMWHTQECMLTDA

-A KUBE-SVC-WGW5ZPXP7HZEBG5V -m comment --comment "nginx-demo/service-nginx-demo" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-I2YR57KE2BLSY73L

-A KUBE-SVC-WGW5ZPXP7HZEBG5V -m comment --comment "nginx-demo/service-nginx-demo" -j KUBE-SEP-RRH7WDK5LM6YECXZ

ip route:[ec2-user@ip-192-168-55-245 ~]$ ip route

default via 192.168.32.1 dev eth0

169.254.169.254 dev eth0

192.168.32.0/19 dev eth0 proto kernel scope link src 192.168.55.245

192.168.41.36 dev enie348bef9edc scope link

192.168.49.56 dev eni3c1ae1f0141 scope link

在 NLB 文件[4]上提及,當 NLB 啟用了 client IP preservation 時並不支援 hairpinning[5] 或 loopback。換言之,當 client 屬於 NLB target group 上其中一個 backend 並由此 client 發出請求經由 NLB 轉發回到自己時,會產生 connection timeout 問題