還記得昨天packstack的參數--os-neutron-ml2-mechanism-drivers=ovn嗎? 當採用ovn mechanism做為neutron的網路方案時,建立tenant Network時,原生就支援geneve type. 此type的tenant network裡的instance跨節點互相通訊時,會透過Geneve 進行Encapsulation達到Tunnel. 今天我們就來理解Geneve Tenant Network的行為吧。

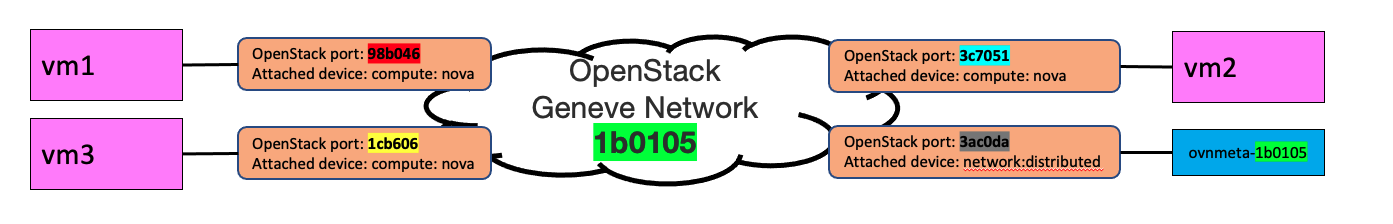

先看看今天要實驗的OpenStack網路的長像吧。今天會將三個VM放在同一個Geneve Network上。並討論VM之間的網路連通性。

openstack network create --provider-network-type geneve --provider-segment 123 n1

openstack subnet create --subnet-range 172.16.100.0/24 --network n1 n1subnet

和建立local type的Network不同,建geneve type Network時,要透過

--provider-segment指定geneve network要使用的 VNI (virtual network identifier)。最大值是2^24-1。

openstack server group create --policy affinity odd_affinity

openstack server group create --policy anti-affinity odd_anti_affinity

這裡建立二個server group的目的,是為了確保等下建立的三個vm中,vm_1 & vm_3在同一個compute node,vm_2會在另一個compute node。方便我們說明跨節點與不跨節點的流量走向。

IMAGE_ID=$(openstack image show cirros --format json | jq -r .id)

AFFINITY_ID=$(openstack server group show odd_affinity --format json | jq -r .id)

ANTI_AFFINITY_ID=$(openstack server group show odd_anti_affinity --format json | jq -r .id)

openstack server create --nic net-id=n1,v4-fixed-ip=172.16.100.10 --flavor m1.nano --image $IMAGE_ID --hint group=$AFFINITY_ID vm_1

openstack server create --nic net-id=n1,v4-fixed-ip=172.16.100.20 --flavor m1.nano --image $IMAGE_ID --hint group=$ANTI_AFFINITY_ID vm_2

openstack server create --nic net-id=n1,v4-fixed-ip=172.16.100.30 --flavor m1.nano --image $IMAGE_ID --hint group=$AFFINITY_ID vm_3

openstack server list --long -c Name -c Status -c Host -c "Power State"

+------+--------+-------------+------------+

| Name | Status | Power State | Host |

+------+--------+-------------+------------+

| vm_3 | ACTIVE | Running | compute-01 |

| vm_2 | ACTIVE | Running | compute-02 |

| vm_1 | ACTIVE | Running | compute-01 |

+------+--------+-------------+------------+

# Network

openstack network list --long -c ID -c "Network Type" | abbrev

+--------+--------------+

| ID | Network Type |

+--------+--------------+

| 1b0105 | geneve |

+--------+--------------+

# Port

openstack port list --long -c ID -c "Fixed IP Addresses" -c "Device Owner" | abbrev

+--------+------------------------------------------------+---------------------+

| ID | Fixed IP Addresses | Device Owner |

+--------+------------------------------------------------+---------------------+

| 98b046 | ip_address='172.16.100.10', subnet_id='ec52a9' | compute:nova |

| 3c7051 | ip_address='172.16.100.20', subnet_id='ec52a9' | compute:nova |

| 1cb606 | ip_address='172.16.100.30', subnet_id='ec52a9' | compute:nova |

| 3ac0da | ip_address='172.16.100.2' , subnet_id='ec52a9' | network:distributed |

+--------+------------------------------------------------+---------------------+

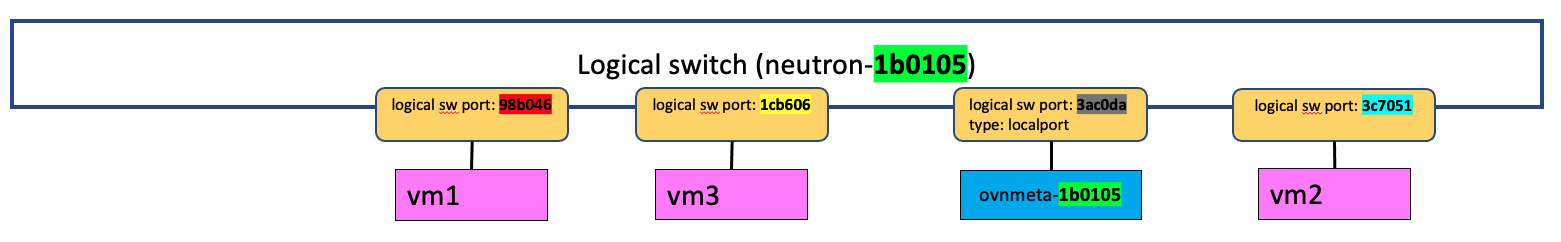

查看North Bound DB的情況

neutron-1b0105 logical switch1cb606、3c7051、98b046、3ac0da,其中3ac0da的type是localportovn-nbctl show | abbrev

switch 365ee5 (neutron-1b0105) (aka n1)

port 3ac0da

type: localport

addresses: ["fa:16:3e:af:47:b8 172.16.100.2"]

port 1cb606

addresses: ["fa:16:3e:e9:8c:34 172.16.100.30"]

port 3c7051

addresses: ["fa:16:3e:62:26:ff 172.16.100.20"]

port 98b046

addresses: ["fa:16:3e:29:f3:07 172.16.100.10"]

我們可以發現North Bound DB查得的logical switch的資訊,與OpenStack上Network的資訊,有一對一的關係

1b0105和OVN logical switchneutron-1b0105相呼應3ac0da port ,attached device是network:distributed

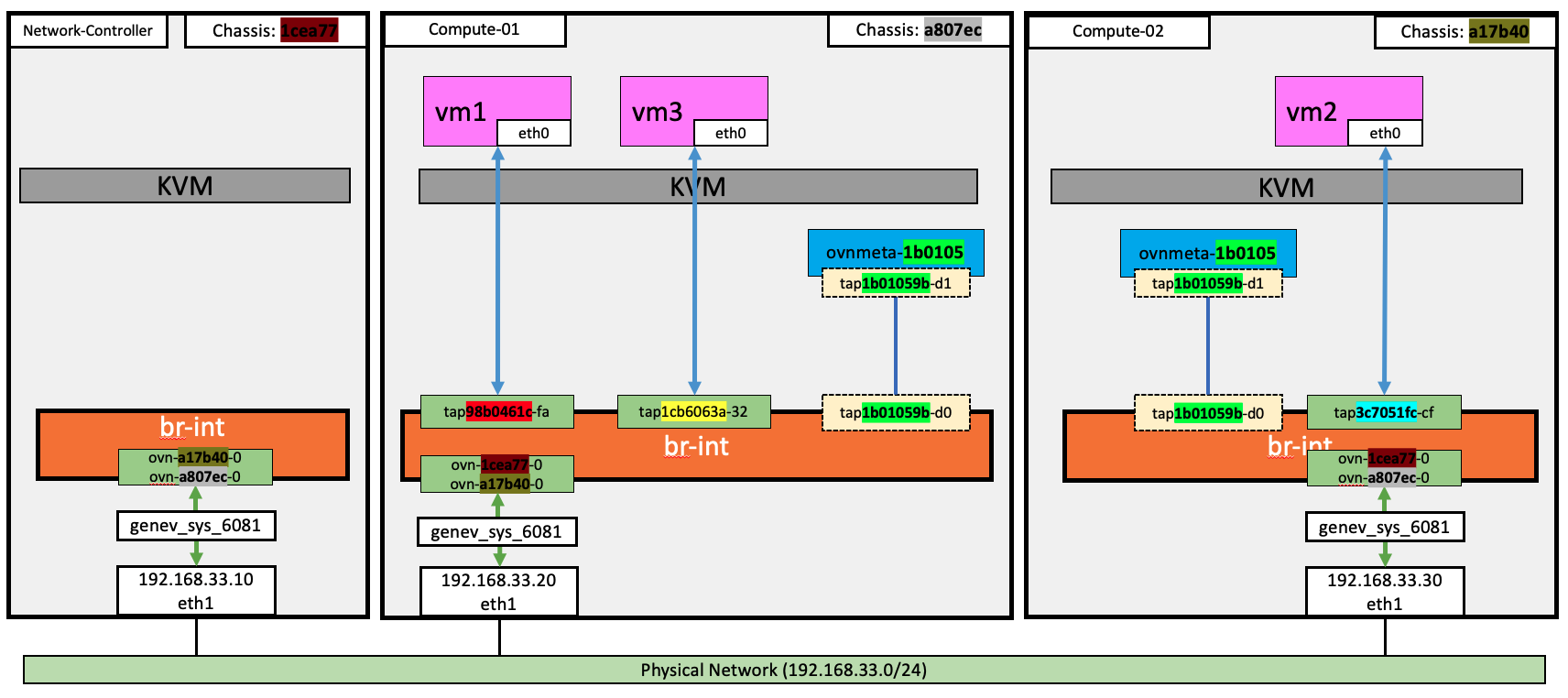

compute:nova的port建立三個instances後,compute node與network-controller node上bridge的情況。

因為今天的說明不會提到eth0與eth2,所以我們把這二個NIC從圖中省略。

查看South Bound DB,

a807ec、a17b40、1cea77,分別代表三個ovn node3c7051綁在chassisa17b40上,即192.168.33.30; 同理port1cb606 & 98b046 是綁在chassisa807ec上。3ac0da是localport,我們之前曾介紹過Day-08: 只能和本地溝通的localport ,這個port在每個chassis上都會有,所以就不會特地顯示綁定在那個chassis上。ovn-sbctl show | abbrev

Chassis "a17b40"

hostname: compute-02

Encap geneve

ip: "192.168.33.30"

options: {csum="true"}

Port_Binding "3c7051"

Chassis "1cea77"

hostname: network-controller

Encap geneve

ip: "192.168.33.10"

options: {csum="true"}

Chassis "a807ec"

hostname: compute-01

Encap geneve

ip: "192.168.33.20"

options: {csum="true"}

Port_Binding "1cb606"

Port_Binding "98b046"

在每個node上,查很看 br-int 有那些 port

ovn-a17b40-0: 往chassis a17b40

ovn-a807ec-0: 往chassis a807ec

ovn-a17b40-0: 往chassis a17b40

ovn-1cea77-0: 往chassis 1cea77

tap1b01059b-d0: 與ovnmeta-1b0105 namespace溝通。因為對應到的logical port是localport,所以在每個節點都會有這個porttap98b0461c-fa: 與 VM1 連接tap1cb60631-32: 與 VM3 連接ovn-1cea77-0: 往chassis 1cea77

ovn-a807ec-0: 往chassis a807ec

tap1b01059b-d0: 與ovnmeta-1b0105 namespace溝通。因為對應到的logical port是localport,所以在每個節點都會有這個porttap3c7051fc-cf: 與 VM2 連接# 在192.168.33.30 查看br-int 的port

ovs-vsctl show

...

Bridge br-int

fail_mode: secure

datapath_type: system

Port ovn-a807ec-0

Interface ovn-eeaddf-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.33.20"}

Port br-int

Interface br-int

type: internal

Port tap1b01059b-d0

Interface tap1b01059b-d0

Port tap3c7051fc-cf

Interface tap3c7051fc-cf

Port ovn-1cea77-0

Interface ovn-5a1b60-0

type: geneve

options: {csum="true", key=flow, remote_ip="192.168.33.10"}

到每個compute node查ovs port和 logcial port的對應

tap98b0461c-fa -> logical port 98b046

tap1cb60631-32 -> logical port 1cb606

tap1b01059b-d0 -> logical port 3ac0da

tap3c7051fc-cf -> logical port 3c7051

tap1b01059b-d0 -> logical port 3ac0da

3ac0da 是屬於localport,所以在二個compute node上都會有。# 在192.168.33.30 查 tap1b01059b-d0 & tap3c7051fc-cf

$ ovs-vsctl --columns external_ids find Interface name=tap3c7051fc-cf | abbrev

external_ids : {attached-mac="fa:16:3e:62:26:ff", iface-id="3c7051", iface-status=active, ovn-installed="true", ovn-installed-ts="1668560324916", vm-uuid="f79924"}

$ ovs-vsctl --columns external_ids find Interface name=tap1b01059b-d0 | abbrev

external_ids : {iface-id="3ac0da"}

在來源端的 host的genev_sys_6081上,可以抓到封裝前的封包,在目的端的host genev_sys_6081,可以抓到解封裝後的封包。換句話說,在genev_sys_6081上可以看到原始的封包內容

$ tcpdump -i genev_sys_6081 -vvnn icmp

在tunnel使用的interface的udp/6081 上,在來源端則可以抓到封裝後的封包,在目的端,可以裝到解封裝前的封包。換句話說,在udp/6081上,可以看到封裝後的封包內容

$ tcpdump -vvneei eth1 'udp port 6081'

在192.168.33.20對ovn-a17b40-0、tap98b0461c-fa、genev_sys_6081、eth1 四個點抓封包,可以發現,只有在eth1 是利用geneven封裝後的packet

$ ovs-tcpdump -i ovn-a17b40-0 icmp

14:02:09.710212 IP 172.16.100.10 > 172.16.100.20: ICMP echo request, id 5400, seq 1, length 64

14:02:09.711118 IP 172.16.100.20 > 172.16.100.10: ICMP echo reply, id 5400, seq 1, length 64

$ ovs-tcpdump -i tap98b0461c-fa icmp

14:02:09.710064 IP 172.16.100.10 > 172.16.100.20: ICMP echo request, id 5400, seq 1, length 64

14:02:09.711183 IP 172.16.100.20 > 172.16.100.10: ICMP echo reply, id 5400, seq 1, length 64

$ tcpdump -i genev_sys_6081 icmp

14:02:41.470903 IP 172.16.100.10 > 172.16.100.20: ICMP echo request, id 38721, seq 1, length 64

14:02:41.471831 IP 172.16.100.20 > 172.16.100.10: ICMP echo reply, id 38721, seq 1, length 64

$ tcpdump -vvneei eth1 'udp port 6081'

14:03:01.838958 e6:cf:6b:dd:5b:b4 > da:ac:a9:da:bc:69, ethertype IPv4 (0x0800), length 156: (tos 0x0, ttl 64, id 9325, offset 0, flags [DF], proto UDP (17), length 142)

192.168.33.20.ffserver > 192.168.33.30.geneve: [bad udp cksum 0x82d1 -> 0xa3dd!] Geneve, Flags [C], vni 0x1, proto TEB (0x6558), options [class Open Virtual Networking (OVN) (0x102) type 0x80(C) len 8 data 00020003]

fa:16:3e:6d:5b:0c > fa:16:3e:02:f4:d9, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 12208, offset 0, flags [DF], proto ICMP (1), length 84)

172.16.100.10 > 172.16.100.20: ICMP echo request, id 56428, seq 1, length 64

14:03:01.840014 da:ac:a9:da:bc:69 > e6:cf:6b:dd:5b:b4, ethertype IPv4 (0x0800), length 156: (tos 0x0, ttl 64, id 52114, offset 0, flags [DF], proto UDP (17), length 142)

192.168.33.30.22950 > 192.168.33.20.geneve: [bad udp cksum 0x82d1 -> 0x5928!] Geneve, Flags [C], vni 0x1, proto TEB (0x6558), options [class Open Virtual Networking (OVN) (0x102) type 0x80(C) len 8 data 00030002]

fa:16:3e:02:f4:d9 > fa:16:3e:6d:5b:0c, ethertype IPv4 (0x0800), length 98: (tos 0x0, ttl 64, id 57200, offset 0, flags [none], proto ICMP (1), length 84)

172.16.100.20 > 172.16.100.10: ICMP echo reply, id 56428, seq 1, length 64

在192.168.33.20對ovn-a17b40-0、tap98b0461c-fa、genev_sys_6081、eth1 四個點抓封包,可以發現,只有在tap98b0461c-fa 才有封包經過,其餘三點完全抓不到封包。這理由也很簡單。因為VM1和VM3是不需要跨節點,所有封包的轉送在br-int上,就可以從tap98b0461c-fa直接送給 tap1cb60631-32。

$ ovs-tcpdump -i tap98b0461c-fa icmp

14:02:09.710064 IP 172.16.100.10 > 172.16.100.20: ICMP echo request, id 5400, seq 1, length 64

14:02:09.711183 IP 172.16.100.20 > 172.16.100.10: ICMP echo reply, id 5400, seq 1, length 64

今天我們將之前對OVN與單節點的OpenStack的認識再加以延申到多節點的情境之下,是不是有發現採用OVN的網路方案,其他原理都是完全相同的呢? 現在再來看是不是就很直覺呢?