Loki Stack有兩種獲取Log的方式。

除了藉由Promtail抓取Log file獲取Log外,也能由應用服務主動傳送Log至Loki後端程式。

Promtail抓取Log file: 使用Promtail抓取Log file可以取得系統的Log及某些需要抓取的Log,Promtail在收集Log後會將Log處理(如打上Label)過後傳送至Loki後端程式,K8S部署Loki Stack的方式就是使用Promtail去抓取pod及其他資源的Log。

應用服務(Application)將Log 主動推送至Loki程式後端:可以由服務主動推送Log至Loki程式後端,若是使用SpringBoot開發的Web Application,可以使用由Loki 社群開發的套件--Loki4j將Log傳送至Loki程式後端。

這篇為應用服務(Application)將Log 主動推送至Loki程式後端的教學文章。

註 : Loki4j是由Loki社群開發的套件,是基於Logback開發的套件,使用上與Logback相差不大。

於pom.xml加入Loki4j dependency

<!-- https://mvnrepository.com/artifact/com.github.loki4j/loki-logback-appender -->

<dependency>

<groupId>com.github.loki4j</groupId>

<artifactId>loki-logback-appender</artifactId>

<version>1.4.2</version>

</dependency>

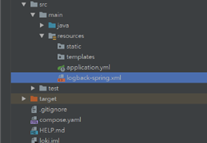

於/src/main/resource下新增logback-spring.xml

logback-spring.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<springProperty name="name" source="spring.application.name" />

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>

%d{HH:mm:ss.SSS} %-5level %logger{36} %X{X-Request-ID} - %msg%n

</pattern>

</encoder>

</appender>

<appender name="LOKI" class="com.github.loki4j.logback.Loki4jAppender">

<!-- 是否開啟Loki的metrics監控(使用Micrometer) -->

<metricsEnabled>true</metricsEnabled>

<!-- 要推送的Loki URL -->

<http>

<url>http://${Loki IP}:${Loki port}/loki/api/v1/push</url>

</http>

<format>

<!-- Loki是以Log的Label進行搜尋,可以在Logback-spring.xml將這個服務所有的Log都打上特定的Label -->

<label>

<pattern>

job=loki4jtest

app=my-app

<!-- // 呼叫API的host-->

host=${HOSTNAME}

<!-- // Log為info、error等狀態-->

level=%level

<!-- // 自定義的Label,methoddd為Label名稱,dockercompose為Label內容-->

methoddd=dockercompose

</pattern>

<!-- // Regular Expression以換行符號分隔-->

<pairSeparator>regex:(\n|//[^\n]+)+</pairSeparator>

<readMarkers>true</readMarkers>

</label>

<message>

<pattern>

{

"level":"%level",

"class":"%logger{36}",

"thread":"%thread",

"message": "%message",

"requestId": "%X{X-Request-ID}"

}

</pattern>

</message>

</format>

</appender>

<root level="INFO">

<appender-ref ref="CONSOLE" />

<appender-ref ref="LOKI" />

</root>

</configuration>

以下是我寫的一個測試Controller

package com.example.demo.controller;

import com.github.loki4j.slf4j.marker.LabelMarker;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.slf4j.MDC;

import org.springframework.web.bind.annotation.*;

import java.util.Map;

@RestController

@RequestMapping("/cloud/")

public class logproducer {

// 在使用Loki4j前需要先新增一個Logger

private final Logger LOG = LoggerFactory.getLogger(logproducer.class);

// 若是需要在產生Log時為那條Log打上不同的Label,需要使用LabelMarker

private final LabelMarker labelMarker = LabelMarker.of(() -> Map.of("audit", "true",

"X-Request-ID", MDC.get("X-Request-ID"),

"X-Correlation-ID", MDC.get("X-Correlation-ID")));

@ResponseBody

@GetMapping(value = "/demo1", produces = "application/json")

public String cloudDemo1() {

// 出一個info Log,內容(text)為"demo1 success",Log裡帶了job、host、level、methoddd的Label

LOG.info("demo1 success");

return "Hello Cloud Demo !!!!!!!!";

}

int yy = 0;

@ResponseBody

@GetMapping(value = "/demo2", produces = "application/json")

public String cloudDemo2(@RequestParam(name = "name", defaultValue = "測試用戶") String name) {

yy++;

// 出一個 info Log,內容(text)為"demo2 success : 此API呼叫第" + yy + " 次",Log裡帶了job、host、level、methoddd的Label

LOG.info("demo2 success : 此API呼叫第" + yy + " 次");

// 新增一個LabelMarker,新增一個Label--"callNumTest",數值是變數yy的值( (yy+"")是將yy強制轉型為字串 )

LabelMarker marker = LabelMarker.of("callNumTest", () ->

(yy + ""));

// 出一個error Log,內容(text)為"Call Number successfully updated",Log裡帶了job、host、level、methoddd還有LabelMaarker提供的callNumTest Label

LOG.error(marker, "Call Number successfully updated");

return "Hello Cloud Demo " + " " + name + " !!!!!!!" + " 此API呼叫第 " + yy + " 次";

}

@ResponseBody

@GetMapping(value = "/demo3", produces = "application/json")

public String cloudDemo3() {

yy = 0;

// 出一個 info Log,內容(text)為"demo3 success : 呼叫次數已歸零 ",Log裡帶了job、host、level、methoddd的Label

LOG.info("demo3 success : 呼叫次數已歸零 ");

// 出一個 error Log,內容(text)為"一人做事一人當,小叮做事小叮噹",Log裡帶了job、host、level、methoddd的Label

LOG.error("一人做事一人當,小叮做事小叮噹");

return "呼叫次數已歸零!!!!!!!";

}

}

在pom.xml新增dependency

<!--產出metrics(指標數據)-->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-core</artifactId>

<version>1.9.15</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

<version>2.7.9</version>

</dependency>

<!--用於導出prometheus類型的metrics(指標數據)-->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

<version>1.9.8</version>

</dependency>

修改application.yaml

application.yaml

server:

#服務運行端口

port: 8088

management:

endpoints:

web:

exposure:

#開放頁面中的所有端口(endpoint)

include: '*'

# actuator 預設的頁面為 ip:port/actuator

#修改path,將訪問頁面從ip:port/actuator改為ip:port/monitor

base-path: /monitor

server:

#將監聽端口設為7000,所以訪問頁面為 ip:7000/monitor

port: 7000

再於logback-spring.xml開啟metrics

logback-spring.xml

<appender name="LOKI" class="com.github.loki4j.logback.Loki4jAppender">

...

<metricsEnabled>true</metricsEnabled>

</appender>

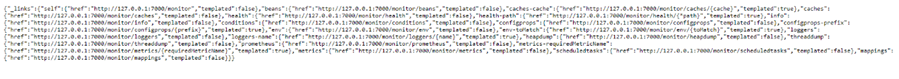

輸入 http:// ${ip}:7000/monitor,得到metrics

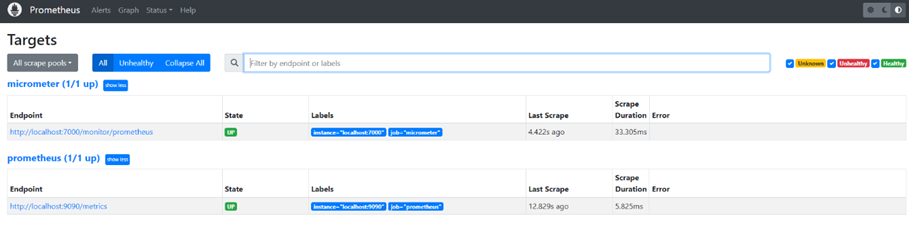

新增Prometheus 監控job,需要修改prometheus.yml

prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

# 新增micrometer job

- job_name: 'micrometer'

metrics_path: /monitor/prometheus

static_configs:

- targets: ["localhost:7000"]

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

輸入 http:// ${ip}:9090/,檢查Prometheus頁面,確認成功註冊micrometer的job

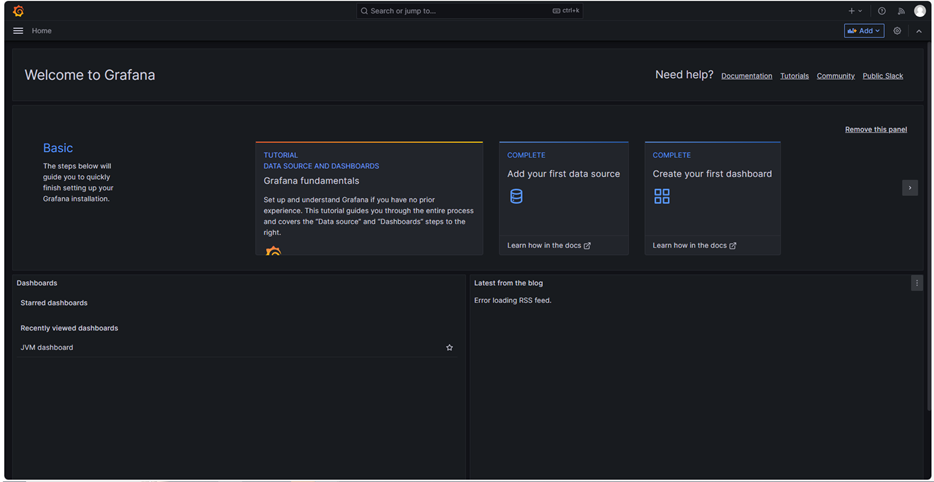

輸入 http://${目標IP} : ${garana的port}/login 後出現登入畫面

預設帳號密碼為 帳號:admin、密碼:admin

進入主畫面後按下 "Add your first data source "

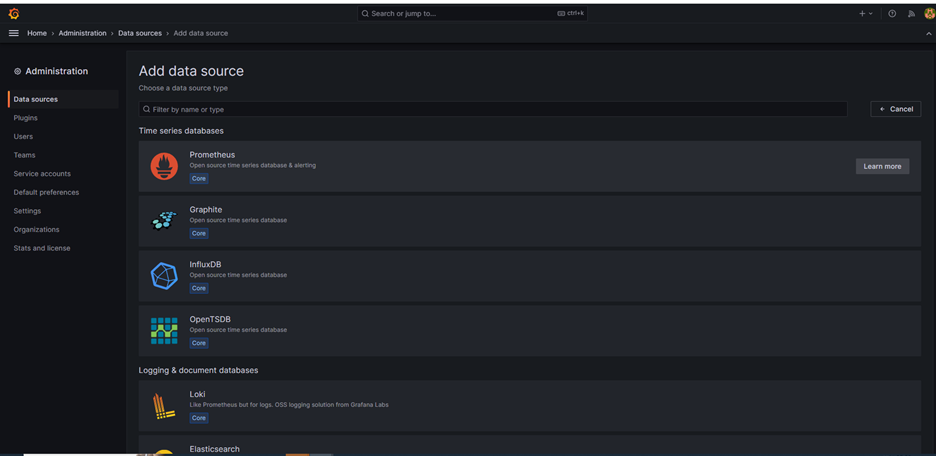

選擇Prometheus

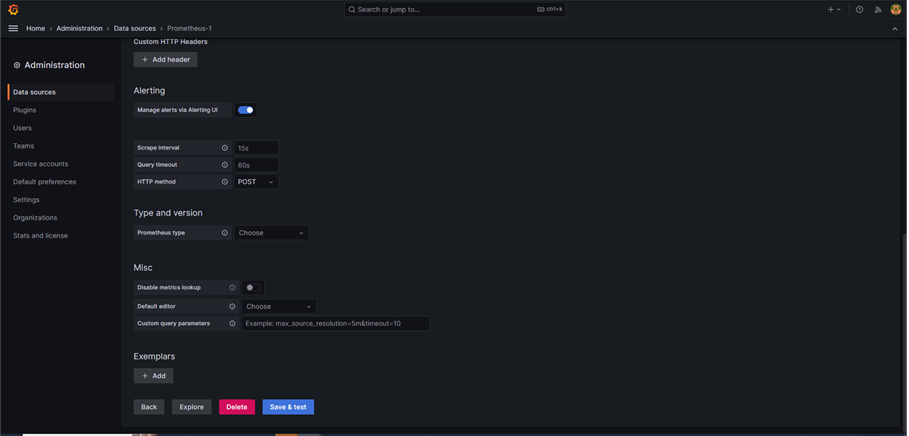

在URL部分輸入 http://${目標IP} : ${prometheus的port}/

按 save & test,成功後即代表data source新增成功

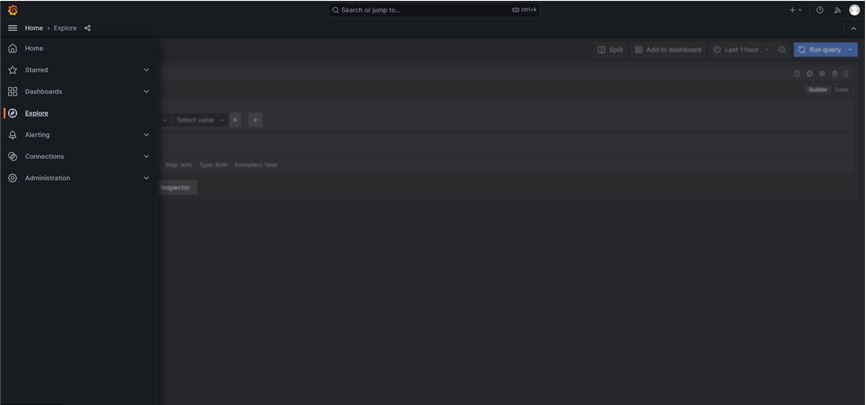

可以在"Explore"部份下promQL,查詢metrics

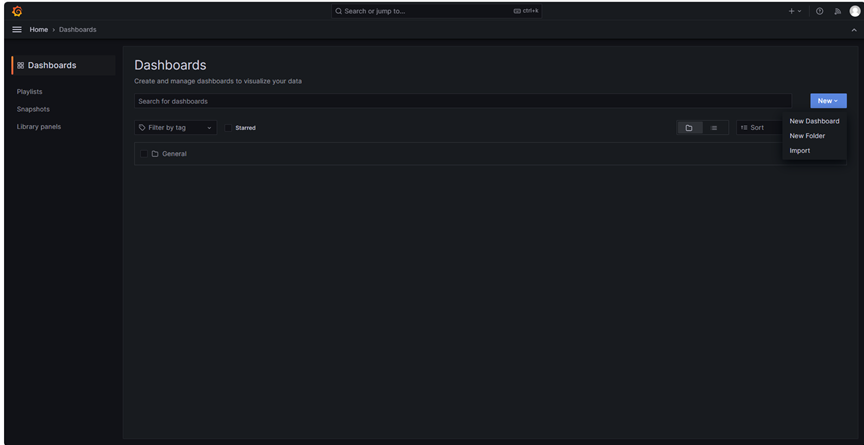

選擇Dashboards,New 一個 import

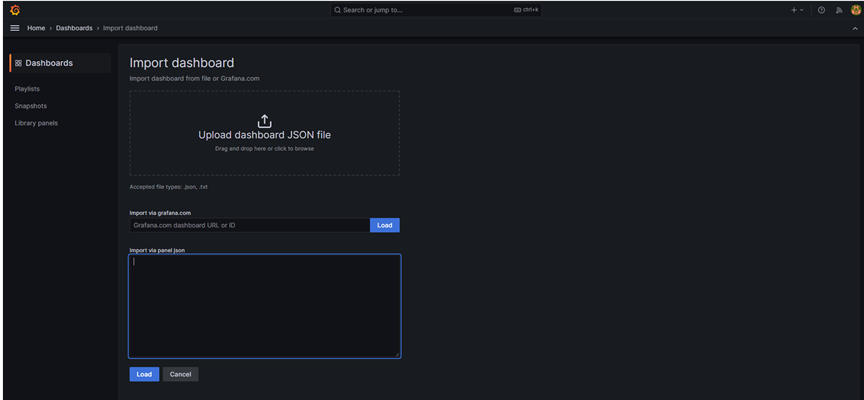

輸入JSON或是上傳.json檔案

推薦使用 " JVM (Micrometer) " 這個dashboard ( https://grafana.com/grafana/dashboards/4701-jvm-micrometer/ )