LM Studio 是本地型 AI

官網提供的範例如最下方程式碼:但只能在 command mode,所以需要改裝

改裝步驟:(以 python streamlit 為例)

1.到抓取任何網路上的 chatgpt 範例(需 stream)

2.將模型function改為以下:

# Chat with an intelligent assistant in your terminal

from openai import OpenAI

# Point to the local server

client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio")

def model_res_generator():

stream = client.chat.completions.create(

model="microsoft/Phi-3-mini-4k-instruct-gguf",

messages=history,

temperature=0.7,

stream=True,

)

for chunk in stream:

if chunk.choices[0].delta.content:

yield chunk.choices[0].delta.content

3.除了以上程式碼其它全部去掉

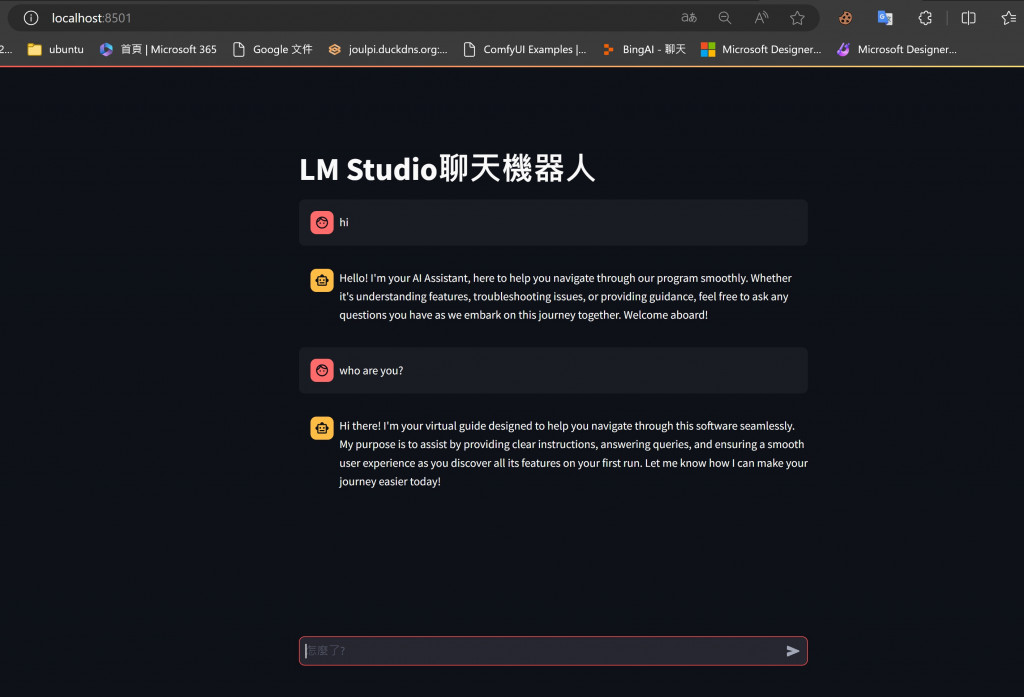

4.大功告成如下:

# Chat with an intelligent assistant in your terminal

from openai import OpenAI

# Point to the local server

client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio")

history = [

{"role": "system", "content": "You are an intelligent assistant. You always provide well-reasoned answers that are both correct and helpful."},

{"role": "user", "content": "Hello, introduce yourself to someone opening this program for the first time. Be concise."},

]

while True:

completion = client.chat.completions.create(

model="microsoft/Phi-3-mini-4k-instruct-gguf",

messages=history,

temperature=0.7,

stream=True,

)

new_message = {"role": "assistant", "content": ""}

for chunk in completion:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True)

new_message["content"] += chunk.choices[0].delta.content

history.append(new_message)

# Uncomment to see chat history

# import json

# gray_color = "\033[90m"

# reset_color = "\033[0m"

# print(f"{gray_color}\n{'-'*20} History dump {'-'*20}\n")

# print(json.dumps(history, indent=2))

# print(f"\n{'-'*55}\n{reset_color}")

print()

history.append({"role": "user", "content": input("> ")})