ConfigMap - 讓你的應用配置不再寫死在程式碼裡 ⚙️

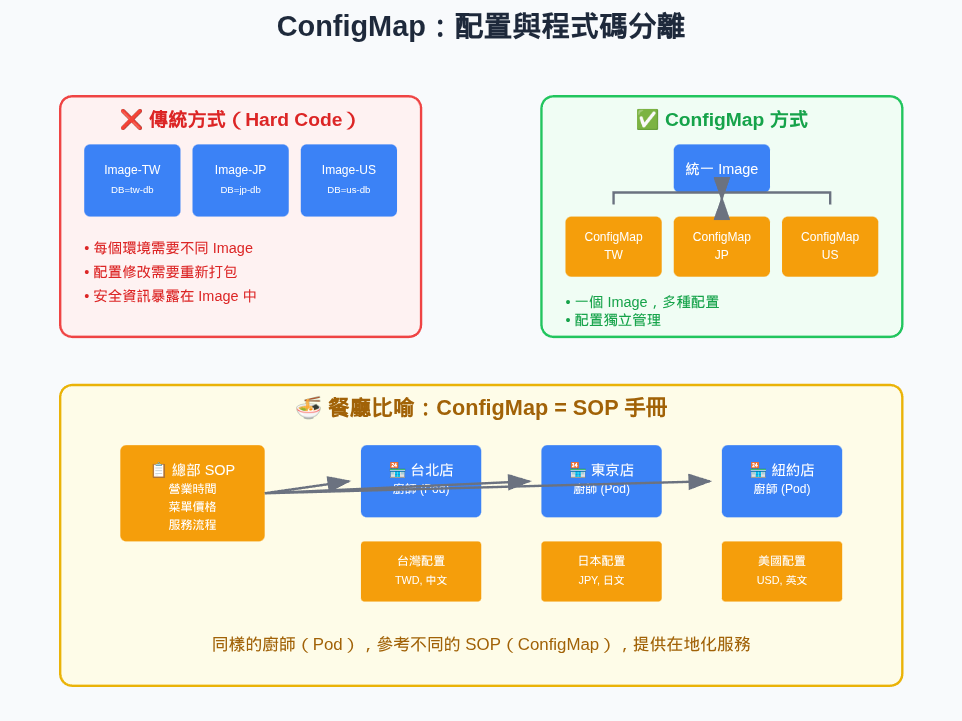

還記得我們上一章學會了資源管理,讓 Pod 不再餓肚子嗎?現在我們的 Pod 已經能穩定運行了,但是各位,有沒有遇到過這種情況:每次要修改應用程式的配置(比如資料庫連線、API 端點、feature flag),就要重新打包 Docker image?

就像你是一家連鎖餐廳的老闆,每家分店的菜單價格、營業時間都不一樣,如果每次調整都要重新印製所有的 SOP 手冊,是不是很麻煩?ConfigMap 就是要解決這個「配置與程式碼分離」的問題!

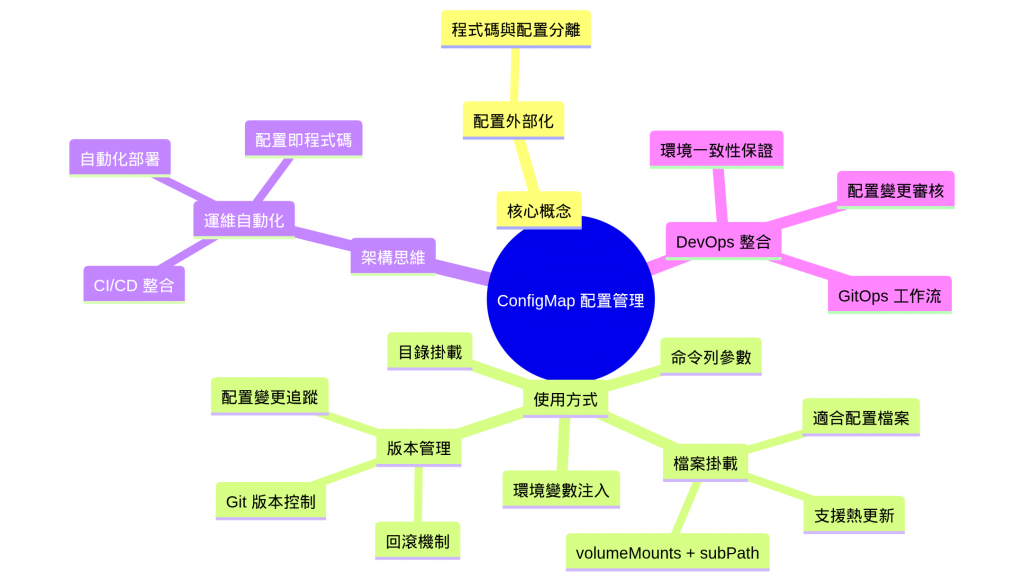

✅ 理解配置外部化的重要性:學會將配置從程式碼中解耦

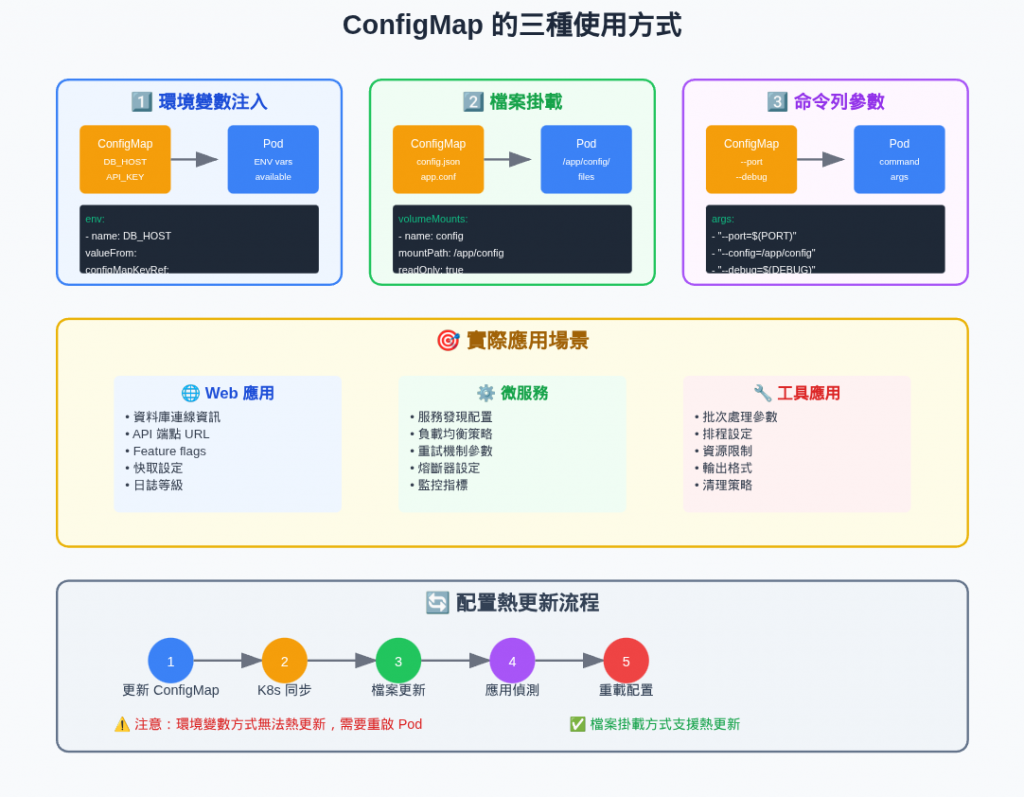

✅ 掌握 ConfigMap 的多種使用方式:環境變數、檔案掛載、命令列參數

✅ 實作配置熱更新機制:不重啟容器就能更新配置

✅ 培養配置管理思維:從硬編碼到配置驅動的架構轉變

沒有配置管理的災難現場

想像一下,你是一家跨國企業的 IT 主管,每個國家的系統都需要不同的配置:

# 災難場景:hard code配置 😱

FROM node:16

COPY . /app

WORKDIR /app

# TW 版本

ENV DB_HOST=tw-db.company.com

ENV CURRENCY=TWD

ENV LANGUAGE=zh-TW

# JP 版本

# 如果要部署到日本,就要重新打包... 🔴

# ENV DB_HOST=jp-db.company.com

# ENV CURRENCY=JPY

# ENV LANGUAGE=ja-JP

這樣會造成什麼問題?

🔴 一個配置,多個 Image:每個環境都要維護不同的 Image

🔴 部署複雜性:無法用同一個 Image 部署到不同環境

🔴 安全風險:敏感資訊(如 API Key)被寫死在 Image 裡

🔴 維護噩夢:修改一個配置就要重新 CI/CD 流程

# docker-compose.yml

version: '3'

services:

web:

image: my-app:latest

environment:

- DB_HOST=${DB_HOST}

- CURRENCY=${CURRENCY}

volumes:

- ./config.json:/app/config.json

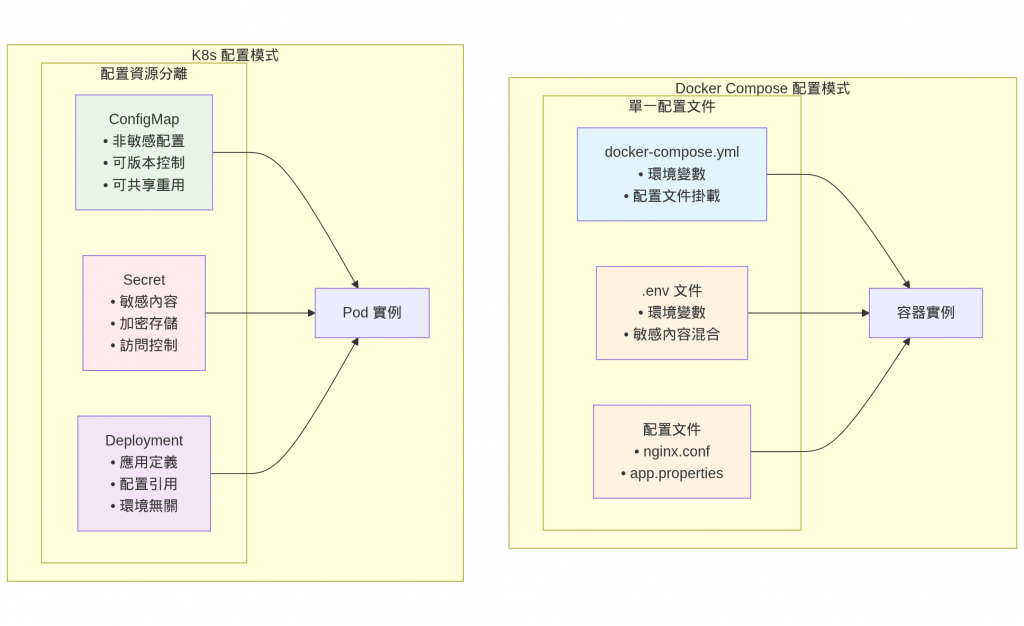

這已經比 hard code 好很多,但在 K8s cluster 中還是有限制:

🟡 檔案管理困難:配置檔案散落在各個節點

🟡 版本控制問題:難以追蹤配置變更歷史

🟡 共享困難:多個 Pod 難以共享同一份配置

ConfigMap 就像餐廳的「標準作業程序手冊」

想像你經營一家連鎖餐廳:

# 餐廳 SOP 手冊 = ConfigMap

營業時間: "09:00-22:00"

主廚推薦: "牛肉麵"

價格表:

牛肉麵: 280

排骨麵: 260

餛飩麵: 240

每個廚師(Pod)都可以參考同一本手冊(ConfigMap),但根據不同分店(環境)可能有不同的版本。

所以 ConfigMap 本質上是 K8s 中的一個 Key-Value Pair 儲存對象,專門用來存放非敏感的配置資料。

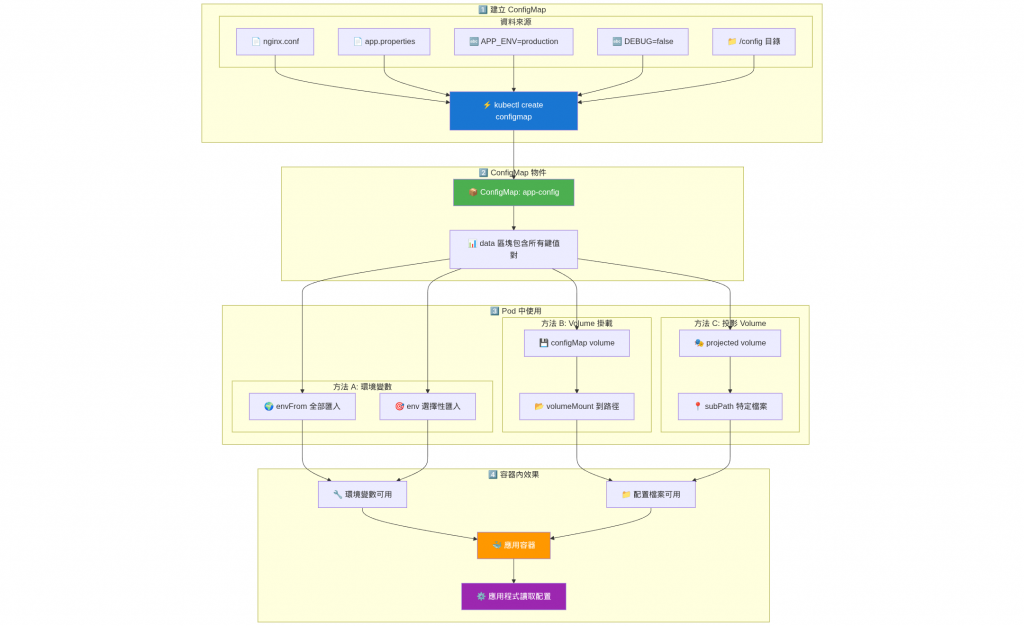

最常見的使用方式,就像給員工發工作證

# app-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: app-config

namespace: default

data:

# 簡單的 key-value 配置

DATABASE_HOST: "mysql.default.svc.cluster.local"

DATABASE_PORT: "3306"

APP_ENV: "production"

DEBUG_MODE: "false"

MAX_CONNECTIONS: "100"

CACHE_TTL: "3600"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-app

spec:

replicas: 3

selector:

matchLabels:

app: web-app

template:

metadata:

labels:

app: web-app

spec:

containers:

- name: app

image: nginx:1.21

# 方式1:直接引用整個 ConfigMap

envFrom:

- configMapRef:

name: app-config

# 方式2:選擇性引用特定 key

env:

- name: DB_HOST

valueFrom:

configMapKeyRef:

name: app-config

key: DATABASE_HOST

- name: DB_PORT

valueFrom:

configMapKeyRef:

name: app-config

key: DATABASE_PORT

# 創建 ConfigMap

> kubectl apply -f app-config.yaml

# 檢查 ConfigMap 內容

> kubectl get configmap app-config -o yaml

# 檢查 Pod 環境變數

> kubectl exec -it deployment/web-app -- env | grep -E "(DATABASE|APP_ENV)"

適合複雜配置檔案,就像給員工一本完整的操作手冊

# nginx-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

# 完整的 nginx 配置檔案

nginx.conf: |

events {

worker_connections 1024;

}

http {

upstream backend {

server app1.default.svc.cluster.local:8080;

server app2.default.svc.cluster.local:8080;

}

server {

listen 80;

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

location /health {

return 200 "OK";

}

}

}

# 額外的配置檔案

mime.types: |

types {

text/html html htm shtml;

text/css css;

application/javascript js;

application/json json;

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-proxy

spec:

replicas: 2

selector:

matchLabels:

app: nginx-proxy

template:

metadata:

labels:

app: nginx-proxy

spec:

containers:

- name: nginx

image: nginx:1.21

ports:

- containerPort: 80

volumeMounts:

# 將 ConfigMap 掛載為檔案

- name: nginx-config-volume

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf # 只掛載特定檔案

- name: nginx-config-volume

mountPath: /etc/nginx/mime.types

subPath: mime.types

volumes:

- name: nginx-config-volume

configMap:

name: nginx-config

# 部署 nginx

kubectl apply -f nginx-config.yaml

# 檢查掛載的配置檔案

kubectl exec -it deployment/nginx-proxy -- cat /etc/nginx/nginx.conf

# 檢查 nginx 配置是否正確

kubectl exec -it deployment/nginx-proxy -- nginx -t

將整個 ConfigMap 掛載為目錄,適合多檔案配置

# multi-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: app-configs

data:

database.yml: |

production:

host: mysql.default.svc.cluster.local

port: 3306

database: myapp_production

pool: 20

redis.yml: |

production:

host: redis.default.svc.cluster.local

port: 6379

db: 0

application.properties: |

server.port=8080

logging.level.root=INFO

spring.profiles.active=production

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: spring-app

spec:

replicas: 2

selector:

matchLabels:

app: spring-app

template:

metadata:

labels:

app: spring-app

spec:

containers:

- name: app

image: openjdk:11-jre-slim

command: ["sleep", "3600"] # 用於測試

volumeMounts:

# 將整個 ConfigMap 掛載為目錄

- name: config-volume

mountPath: /app/config

volumes:

- name: config-volume

configMap:

name: app-configs

# 部署應用

kubectl apply -f multi-config.yaml

# 檢查掛載的目錄結構

kubectl exec -it deployment/spring-app -- ls -la /app/config

# 檢查各個配置檔案

kubectl exec -it deployment/spring-app -- cat /app/config/database.yml

將 ConfigMap 的值作為容器啟動參數

# cli-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: cli-config

data:

log-level: "info"

port: "8080"

workers: "4"

timeout: "30s"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cli-app

spec:

replicas: 1

selector:

matchLabels:

app: cli-app

template:

metadata:

labels:

app: cli-app

spec:

containers:

- name: app

image: nginx:1.21

command: ["nginx"]

args:

- "-g"

- "daemon off;"

- "-c"

- "/etc/nginx/nginx.conf"

env:

- name: LOG_LEVEL

valueFrom:

configMapKeyRef:

name: cli-config

key: log-level

- name: PORT

valueFrom:

configMapKeyRef:

name: cli-config

key: port

這是 ConfigMap 最強大的功能之一!

實時配置更新實戰

# dynamic-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: dynamic-config

data:

app.properties: |

# 應用程式配置

feature.new_ui=false

feature.payment_v2=false

cache.ttl=3600

rate.limit=1000

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dynamic-app

spec:

replicas: 2

selector:

matchLabels:

app: dynamic-app

template:

metadata:

labels:

app: dynamic-app

spec:

containers:

- name: app

image: nginx:1.21

volumeMounts:

- name: config-volume

mountPath: /app/config

# 用於監控配置變更的 sidecar

- name: config-reloader

image: jimmidyson/configmap-reload:v0.5.0

args:

- --volume-dir=/app/config

- --webhook-url=http://localhost:8080/reload

volumeMounts:

- name: config-volume

mountPath: /app/config

volumes:

- name: config-volume

configMap:

name: dynamic-config

測試動態更新

# 部署應用

kubectl apply -f dynamic-config.yaml

# 檢查初始配置

kubectl exec -it deployment/dynamic-app -c app -- cat /app/config/app.properties

# 更新 ConfigMap

kubectl patch configmap dynamic-config --patch='

data:

app.properties: |

# 應用程式配置 - 已更新

feature.new_ui=true

feature.payment_v2=true

cache.ttl=1800

rate.limit=2000

'

# 等待幾秒後檢查配置(通常需要 30-60 秒)

sleep 60

kubectl exec -it deployment/dynamic-app -c app -- cat /app/config/app.properties

重要提醒 ⚠️

配置更新的限制:

- 環境變數方式:需要重啟 Pod 才能生效

- 檔案掛載方式:自動更新,但應用程式需要支援熱重載

- 更新延遲:通常需要 30-60 秒才會同步到 Pod

- 應用程式支援:需要應用程式主動讀取配置變更

# 良好的命名和標籤實踐

apiVersion: v1

kind: ConfigMap

metadata:

name: myapp-config-v1.2.0 # 包含版本號

namespace: production

labels:

app: myapp

component: backend

version: v1.2.0

environment: production

annotations:

description: "MyApp backend configuration for production"

last-updated: "2024-01-15T10:30:00Z"

updated-by: "devops-team"

data:

# 配置內容...

# 基礎配置 - 所有環境共用

apiVersion: v1

kind: ConfigMap

metadata:

name: myapp-base-config

data:

app.name: "MyApplication"

app.version: "1.2.0"

logging.format: "json"

---

# 環境特定配置 - 生產環境

apiVersion: v1

kind: ConfigMap

metadata:

name: myapp-prod-config

data:

database.host: "prod-mysql.company.com"

cache.size: "1000"

debug.enabled: "false"

---

# 功能開關配置 - 可以獨立更新

apiVersion: v1

kind: ConfigMap

metadata:

name: myapp-features

data:

feature.new_ui: "true"

feature.payment_v2: "false"

敏感資訊處理

# ❌ 錯誤:將敏感資訊放在 ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: bad-config

data:

database.password: "super-secret-password" # 這是錯誤的!

api.key: "sk-1234567890abcdef" # 這也是錯誤的!

---

# ✅ 正確:敏感資訊使用 Secret

apiVersion: v1

kind: Secret

metadata:

name: app-secrets

type: Opaque

data:

database.password: c3VwZXItc2VjcmV0LXBhc3N3b3Jk # base64 編碼

api.key: c2stMTIzNDU2Nzg5MGFiY2RlZg==

---

# ConfigMap 只存放非敏感配置

apiVersion: v1

kind: ConfigMap

metadata:

name: good-config

data:

database.host: "mysql.default.svc.cluster.local"

database.port: "3306"

database.name: "myapp"

# ConfigMap 的限制

大小限制: 1MB (1,048,576 bytes)

Key 數量: 沒有硬性限制,但建議不超過 100 個

Key 名稱: 必須是有效的 DNS 子域名

# 如果配置太大,考慮分割

apiVersion: v1

kind: ConfigMap

metadata:

name: large-config-part1

data:

config.part1: |

# 第一部分配置...

---

apiVersion: v1

kind: ConfigMap

metadata:

name: large-config-part2

data:

config.part2: |

# 第二部分配置...

# ConfigMap 更新到 Pod 的延遲時間

最短延遲: 30 秒

最長延遲: 60 秒 + kubelet 同步週期

# 如果需要立即更新,可以重啟 Pod

kubectl rollout restart deployment/myapp

# ConfigMap 不適合存放二進位檔案

# 如果需要,使用 base64 編碼,但有大小限制

apiVersion: v1

kind: ConfigMap

metadata:

name: binary-config

binaryData:

# 使用 binaryData 而不是 data

logo.png: iVBORw0KGgoAAAANSUhEUgAAAAEAAAABCAYAAAAfFcSJAAAADUlEQVR42mP8/5+hHgAHggJ/PchI7wAAAABJRU5ErkJggg==

假設你是一家電商公司的 DevOps 工程師,需要為不同環境(dev/staging/prod)部署 Grafana 監控系統。

每個環境都有不同的:

讓我們用 ConfigMap 來優雅地解決這個問題!

以 Dashboard 的 Text Panel 為例,演示如何設定 configMap 掛載到 Grafana Pod,然後更新 text 內容。

.

├── grafana-deployment-only.yaml

├── grafana.ini

├── kind-cluster-config.yaml

├── manage-grafana.sh

├── provisioning

│ └── dashboards

│ ├── dashboard-providers.yaml

│ └── welcome-dashboard.json

└── update-dashboard.sh

provioning 資料夾中的就是 Grafana Provisioning(配置) 的檔案,我們能用Grafana 使用 provisioning 文件進行自動化配置,支持 GitOps。有興趣了解能參考小弟的書OpenTelemetry 入門指南:建立全面可觀測性架構 ch 9。

**步驟 1:用 Kind 建立 K8s cluster **

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30300

hostPort: 30300

protocol: TCP

- containerPort: 30301

hostPort: 30301

protocol: TCP

- containerPort: 30302

hostPort: 30302

protocol: TCP

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

# 移除之前的cluser

kind delete cluster --name multi-node-cluster

# 建立k8s cluster

kind create cluster --name multi-node-cluster --config kind-cluster-config.yaml

步驟 2:創建 Deployment、 ConfigMapgrafana-deployment-only.yaml

這個文件包含了三個 Kubernetes 資源:

grafana.ini、provisioning dashboard

# grafana-deployment-only.yaml

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitoring

labels:

app: grafana

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

securityContext:

fsGroup: 472

runAsUser: 472

runAsNonRoot: true

containers:

- name: grafana

image: grafana/grafana:12.1.0

ports:

- containerPort: 3000

name: http-grafana

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /robots.txt

port: 3000

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 2

livenessProbe:

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 3000

timeoutSeconds: 1

resources:

requests:

cpu: 250m

memory: 512Mi

limits:

cpu: 500m

memory: 1Gi

volumeMounts:

- name: grafana-config

mountPath: /etc/grafana/grafana.ini

subPath: grafana.ini

- name: grafana-provisioning-dashboards

mountPath: /etc/grafana/provisioning/dashboards/dashboards.yaml

subPath: dashboard-providers.yaml

- name: grafana-dashboards

mountPath: /var/lib/grafana/dashboards

- name: grafana-storage

mountPath: /var/lib/grafana

volumes:

- name: grafana-config

configMap:

name: grafana-config

- name: grafana-provisioning-dashboards

configMap:

name: grafana-provisioning-dashboards

- name: grafana-dashboards

configMap:

name: grafana-dashboards

- name: grafana-storage

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

labels:

app: grafana

spec:

type: NodePort

ports:

- port: 3000

protocol: TCP

targetPort: 3000

nodePort: 30300

selector:

app: grafana

# 能選擇執行kubectl

kubectl wait --for=condition=available --timeout=300s deployment/grafana -n monitoring

# 或者執行 manage-grafana.sh

> ./manage-grafana.sh deploy

[INFO] 🚀 部署 Grafana...

[INFO] 📄 從獨立文件創建 ConfigMaps...

namespace/monitoring created

[INFO] 創建 grafana-config ConfigMap...

configmap/grafana-config created

[INFO] 創建 grafana-provisioning-dashboards ConfigMap...

configmap/grafana-provisioning-dashboards created

[INFO] 創建 grafana-dashboards ConfigMap...

configmap/grafana-dashboards created

[SUCCESS] ✅ 所有 ConfigMaps 創建完成

namespace/monitoring configured

deployment.apps/grafana created

service/grafana created

[INFO] ⏳ 等待 Grafana 啟動...

deployment.apps/grafana condition met

[SUCCESS] ✅ Grafana 部署完成!

🌐 訪問 URL: http://172.18.0.3:30300

👤 用戶名: admin

🔑 密碼: admin123

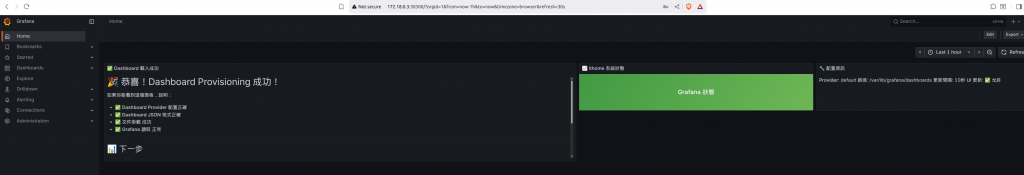

能透過http://172.18.0.3:30300看到以下畫面

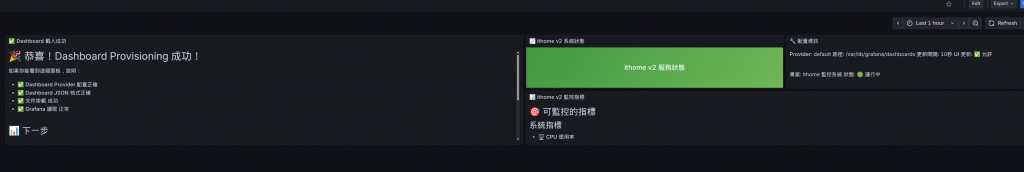

然後修改welcome-dashboard.json,執行

# 可以選擇自己更新 ConfigMap

kubectl create configmap grafana-dashboards \

--from-file="$dashboard_file" \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

# 或是透過 manage-grafana.sh update-dashboard

./manage-grafana.sh update-dashboard provisioning/dashboards/welcome-dashboard.json

等個約一分鐘後按下F5 refresh 瀏覽器,就能看到以下畫化。

manage-grafana.sh

#!/bin/bash

# manage-grafana.sh

# 顏色定義

RED='\033[0;31m'

GREEN='\033[0;32m'

YELLOW='\033[1;33m'

BLUE='\033[0;34m'

NC='\033[0m'

log_info() { echo -e "${BLUE}[INFO]${NC} $1"; }

log_success() { echo -e "${GREEN}[SUCCESS]${NC} $1"; }

log_warning() { echo -e "${YELLOW}[WARNING]${NC} $1"; }

log_error() { echo -e "${RED}[ERROR]${NC} $1"; }

# 檢查必要文件

check_files() {

local missing_files=()

if [[ ! -f "grafana.ini" ]]; then

missing_files+=("grafana.ini")

fi

if [[ ! -f "provisioning/dashboards/dashboard-providers.yaml" ]]; then

missing_files+=("provisioning/dashboards/dashboard-providers.yaml")

fi

if [[ ${#missing_files[@]} -gt 0 ]]; then

log_error "缺少必要文件:"

for file in "${missing_files[@]}"; do

echo " - $file"

done

return 1

fi

return 0

}

# 創建目錄結構

setup_directories() {

log_info "📁 創建目錄結構..."

mkdir -p provisioning/dashboards

log_success "✅ 目錄結構創建完成"

}

# 從文件創建 ConfigMaps

create_configmaps() {

log_info "📄 從獨立文件創建 ConfigMaps..."

# 檢查文件

if ! check_files; then

return 1

fi

# 創建 namespace

kubectl create namespace monitoring --dry-run=client -o yaml | kubectl apply -f -

# 創建 grafana.ini ConfigMap

log_info "創建 grafana-config ConfigMap..."

kubectl create configmap grafana-config \

--from-file=grafana.ini \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

# 創建 provisioning ConfigMap

log_info "創建 grafana-provisioning-dashboards ConfigMap..."

kubectl create configmap grafana-provisioning-dashboards \

--from-file=dashboard-providers.yaml=provisioning/dashboards/dashboard-providers.yaml \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

# 創建 dashboards ConfigMap

log_info "創建 grafana-dashboards ConfigMap..."

if [[ -d "provisioning/dashboards" ]]; then

# 排除 dashboard-providers.yaml,只包含 .json 文件

find provisioning/dashboards -name "*.json" -exec \

kubectl create configmap grafana-dashboards \

--from-file={} \

-n monitoring \

--dry-run=client -o yaml \; | kubectl apply -f -

else

# 如果沒有 dashboard 文件,創建空的 ConfigMap

kubectl create configmap grafana-dashboards \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

fi

log_success "✅ 所有 ConfigMaps 創建完成"

}

# 部署 Grafana

deploy_grafana() {

log_info "🚀 部署 Grafana..."

# 先創建 ConfigMaps

create_configmaps || return 1

# 部署 Grafana

kubectl apply -f grafana-deployment-only.yaml

# 等待部署完成

log_info "⏳ 等待 Grafana 啟動..."

kubectl wait --for=condition=available --timeout=300s deployment/grafana -n monitoring

# 獲取訪問資訊

NODE_IP=$(kubectl get nodes -o jsonpath='{.items[0].status.addresses[0].address}')

log_success "✅ Grafana 部署完成!"

echo "🌐 訪問 URL: http://$NODE_IP:30300"

echo "👤 用戶名: admin"

echo "🔑 密碼: admin123"

}

# 更新 grafana.ini (需要重啟)

update_grafana_ini() {

log_info "🔧 更新 grafana.ini 配置..."

if [[ ! -f "grafana.ini" ]]; then

log_error "grafana.ini 文件不存在"

return 1

fi

# 更新 ConfigMap

kubectl create configmap grafana-config \

--from-file=grafana.ini \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

# 重啟 Deployment

log_warning "⚠️ grafana.ini 更新需要重啟 Pod..."

kubectl rollout restart deployment/grafana -n monitoring

# 等待重啟完成

kubectl rollout status deployment/grafana -n monitoring

log_success "✅ Grafana 配置更新並重啟完成"

}

# 更新 provisioning 配置

update_provisioning() {

log_info "⚙️ 更新 provisioning 配置..."

if [[ ! -f "provisioning/dashboards/dashboard-providers.yaml" ]]; then

log_error "dashboard-providers.yaml 文件不存在"

return 1

fi

# 更新 ConfigMap

kubectl create configmap grafana-provisioning-dashboards \

--from-file=dashboard-providers.yaml=provisioning/dashboards/dashboard-providers.yaml \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

log_success "✅ Provisioning 配置更新完成 (Grafana 會自動檢測)"

}

# 更新 Dashboard

update_dashboard() {

local dashboard_file=$1

if [[ -z "$dashboard_file" ]]; then

log_error "請指定 dashboard 文件路徑"

echo "使用方法: $0 update-dashboard <dashboard-file.json>"

return 1

fi

if [[ ! -f "$dashboard_file" ]]; then

log_error "Dashboard 文件不存在: $dashboard_file"

return 1

fi

log_info "📊 更新 Dashboard: $dashboard_file"

# 獲取文件名

filename=$(basename "$dashboard_file")

# 更新 ConfigMap

kubectl create configmap grafana-dashboards \

--from-file="$dashboard_file" \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

log_success "✅ Dashboard 更新完成,Grafana 會在 10 秒內自動檢測變更"

}

# 批量更新所有 dashboards

update_all_dashboards() {

log_info "📊 批量更新所有 Dashboard 文件..."

if [[ ! -d "provisioning/dashboards" ]]; then

log_error "provisioning/dashboards 目錄不存在"

return 1

fi

# 查找所有 .json 文件

json_files=($(find provisioning/dashboards -name "*.json"))

if [[ ${#json_files[@]} -eq 0 ]]; then

log_warning "沒有找到 .json dashboard 文件"

return 0

fi

# 更新 ConfigMap

kubectl create configmap grafana-dashboards \

--from-file=provisioning/dashboards/ \

-n monitoring \

--dry-run=client -o yaml | kubectl apply -f -

log_success "✅ 所有 Dashboard 更新完成 (共 ${#json_files[@]} 個文件)"

for file in "${json_files[@]}"; do

echo " - $(basename "$file")"

done

}

# 查看狀態

show_status() {

log_info "📋 Grafana 狀態資訊"

echo "=== Namespace ==="

kubectl get namespace monitoring 2>/dev/null || echo "Namespace 'monitoring' 不存在"

echo -e "\n=== ConfigMaps ==="

kubectl get configmap -n monitoring 2>/dev/null || echo "沒有 ConfigMaps"

echo -e "\n=== Deployments ==="

kubectl get deployment -n monitoring 2>/dev/null || echo "沒有 Deployments"

echo -e "\n=== Pods ==="

kubectl get pods -n monitoring 2>/dev/null || echo "沒有 Pods"

echo -e "\n=== Services ==="

kubectl get svc -n monitoring 2>/dev/null || echo "沒有 Services"

# 訪問資訊

if kubectl get svc grafana -n monitoring &>/dev/null; then

NODE_IP=$(kubectl get nodes -o jsonpath='{.items[0].status.addresses[0].address}' 2>/dev/null)

echo -e "\n=== 訪問資訊 ==="

echo "🌐 URL: http://$NODE_IP:30300"

echo "👤 用戶名: admin"

echo "🔑 密碼: admin123"

fi

}

# 查看 ConfigMap 內容

show_configmap() {

local configmap_name=$1

if [[ -z "$configmap_name" ]]; then

echo "可用的 ConfigMaps:"

kubectl get configmap -n monitoring --no-headers | awk '{print " - " $1}'

return 0

fi

log_info "📄 查看 ConfigMap: $configmap_name"

kubectl get configmap "$configmap_name" -n monitoring -o yaml

}

# 清理資源

cleanup() {

log_warning "🗑️ 清理 Grafana 資源..."

read -p "確定要刪除所有 Grafana 資源嗎?(y/N): " -n 1 -r

echo

if [[ $REPLY =~ ^[Yy]$ ]]; then

kubectl delete namespace monitoring

log_success "✅ 清理完成"

else

log_info "取消清理操作"

fi

}

# 主函數

main() {

case $1 in

"setup")

setup_directories

;;

"deploy")

deploy_grafana

;;

"create-configmaps")

create_configmaps

;;

"update-ini")

update_grafana_ini

;;

"update-provisioning")

update_provisioning

;;

"update-dashboard")

update_dashboard $2

;;

"update-all-dashboards")

update_all_dashboards

;;

"status")

show_status

;;

"show-configmap")

show_configmap $2

;;

"cleanup")

cleanup

;;

*)

echo "Grafana 文件管理腳本"

echo ""

echo "使用方法: $0 <command> [options]"

echo ""

echo "命令列表:"

echo " setup - 創建目錄結構"

echo " deploy - 完整部署 Grafana"

echo " create-configmaps - 從文件創建 ConfigMaps"

echo " update-ini - 更新 grafana.ini (需要重啟)"

echo " update-provisioning - 更新 provisioning 配置"

echo " update-dashboard <file> - 更新指定的 dashboard"

echo " update-all-dashboards - 更新所有 dashboard 文件"

echo " status - 查看部署狀態"

echo " show-configmap [name] - 查看 ConfigMap 內容"

echo " cleanup - 清理所有資源"

echo ""

echo "文件結構:"

echo " grafana.ini"

echo " provisioning/dashboards/dashboard-providers.yaml"

echo " provisioning/dashboards/*.json"

echo " grafana-deployment-only.yaml"

exit 1

;;

esac

}

main "$@"

ConfigMap 從檔案創建的優勢:

✅ 文件分離 - JSON 和 YAML 分開管理

✅ 版本控制 - 可以用 Git 追蹤變更

✅ 易於編輯 - 使用專門的編輯器

✅ 重複使用 - 同一個 dashboard 可用於多個環境

✅ 團隊協作 - 多人可以同時編輯不同文件

關注使用者體驗