2020.06.24:修改錯誤的Schwefel

本篇主要是紀錄Particle swarm optimization (PSO). A tutorial的閱讀心得。

最近腦子動到想用PSO來訓練神經網路,所以蒐集了一些相關資料,預計將分享5篇文章

第一篇是敲門磚,主要是描述基本算法框架,並且進一步的提供超參數的設定指南

第二篇是我碩班用的演算法,我只能說這個真的很猛

第三篇的作者群和第二篇相似,趁這次機會強迫自己看一下

第四篇是很早期的PSO+NN,雖然內容簡單但勝在可讀性絕佳

第五篇我認為是近代PSO+NN的聖經,但我今天測試的結果是不太好,找機會在分享

完全沒碰過的請直接看PSO 粒子群演算法

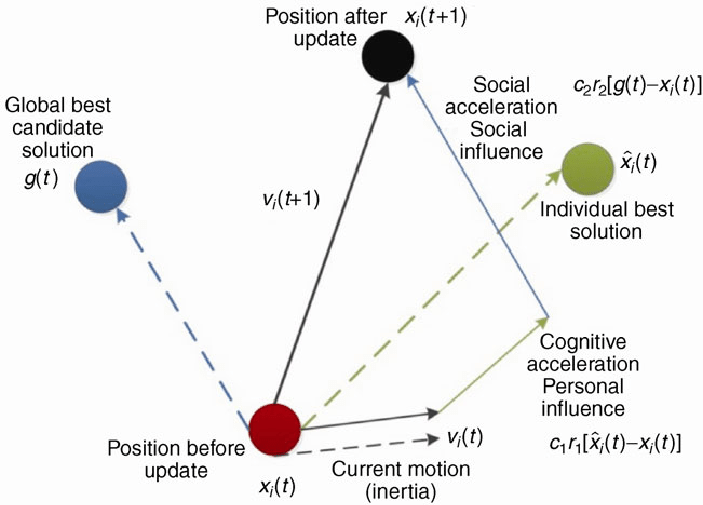

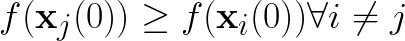

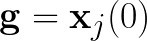

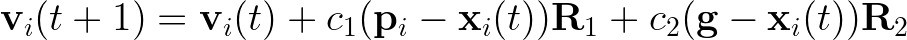

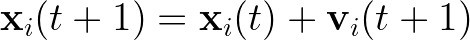

簡單來說,一群個被稱為粒子的潛在解在多維度解空間中找尋最佳解位置,粒子每次移動都會參考自身過往曾找到的的最佳解位置、所有例子的過往最佳解位置,然後再決定移動方向還有距離。

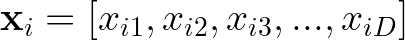

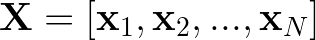

假設解空間總共有D個維度,則粒子i的結構應設計為

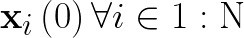

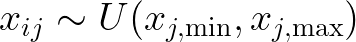

因為這個演算法明子叫作粒子'群',所以我們會將N個粒子灑在解空間中,所以

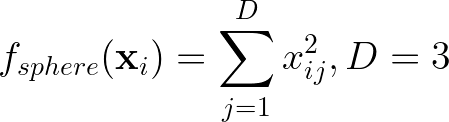

編程上,我習慣用row代表粒子,而column代表維度,用Sphere Function作為範例:

這邊我直接上結果,若想進一步了解請看原文

從前文2.2.2(b)可知,x(t+1)=x(t)+v(t+1),若v(t+1)過大會導致:

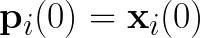

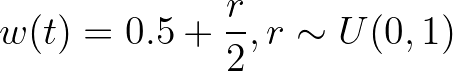

U是指uniform distribution(均勻分布)

為防止速度爆炸(velocity explosion)因此初始速度V(0)建議極小值或者直接設0

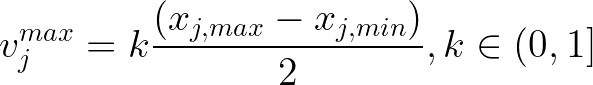

k是超參數

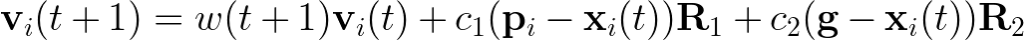

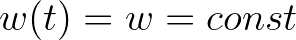

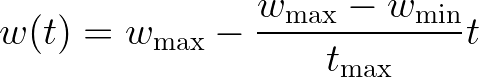

這個就是目前PSO相關文獻最常看到的速率更新公式,透過w來縮小v(t),以防止速度爆炸

原文建議50個粒子,因為當粒子數大於50後對於求解幫助明顯下降

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(42)

class PSO():

def __init__(self, fit_func, num_dim, num_particle=20, max_iter=500,

x_max=1, x_min=-1, w_max=0.9, w_min=0.4, c1=2.0, c2=2.0, k=0.2):

self.fit_func = fit_func

self.num_dim = num_dim

self.num_particle = num_particle

self.max_iter = max_iter

self.x_max = x_max

self.x_min = x_min

self.w = w_max

self.w_max = w_max

self.w_min = w_min

self.c1 = c1

self.c2 = c2

self.k = k

self._iter = 1

self.gBest_curve = np.zeros(self.max_iter)

self.X = np.random.uniform(low=self.x_min, high=self.x_max,

size=[self.num_particle, self.num_dim])

self.V = np.zeros(shape=[self.num_particle, self.num_dim])

self.v_max = self.k*(self.x_max-self.x_min)

self.pBest_X = self.X.copy()

self.pBest_score = self.fit_func(self.X)

self.gBest_X = self.pBest_X[self.pBest_score.argmin()]

self.gBest_score = self.pBest_score.min()

self.gBest_curve[0] = self.gBest_score.copy()

def opt(self):

while(self._iter<self.max_iter):

R1 = np.random.uniform(size=(self.num_particle, self.num_dim))

R2 = np.random.uniform(size=(self.num_particle, self.num_dim))

w = self.w_max - self._iter*(self.w_max-self.w_min)/self.max_iter

for i in range(self.num_particle):

self.V[i, :] = w * self.V[i, :] \

+ self.c1 * (self.pBest_X[i, :] - self.X[i, :]) * R1[i, :] \

+ self.c2 * (self.gBest_X - self.X[i, :]) * R2[i, :]

self.V[i, self.v_max < self.V[i, :]] = self.v_max[self.v_max < self.V[i, :]]

self.V[i, -self.v_max > self.V[i, :]] = -self.v_max[-self.v_max > self.V[i, :]]

self.X[i, :] = self.X[i, :] + self.V[i, :]

self.X[i, self.x_max < self.X[i, :]] = self.x_max[self.x_max < self.X[i, :]]

self.X[i, self.x_min > self.X[i, :]] = self.x_min[self.x_min > self.X[i, :]]

score = self.fit_func(self.X[i, :])

if score<self.pBest_score[i]:

self.pBest_score[i] = score.copy()

self.pBest_X[i, :] = self.X[i, :].copy()

if score<self.gBest_score:

self.gBest_score = score.copy()

self.gBest_X = self.X[i, :].copy()

self.gBest_curve[i] = self.gBest_score.copy()

self._iter = self._iter + 1

def plot_curve(self):

plt.figure()

plt.title('loss curve ['+str(round(self.gBest_curve[-1], 3))+']')

plt.plot(self.gBest_curve, label='loss')

plt.grid()

plt.legend()

plt.show()

測試代碼

from PSO import PSO

import numpy as np

import time

import pandas as pd

np.random.seed(42)

def Sphere(x):

if x.ndim==1:

x = x.reshape(1, -1)

return np.sum(x**2, axis=1)

def Schwefel_P222(x):

if x.ndim==1:

x = x.reshape(1, -1)

return np.sum(np.abs(x), axis=1) + np.prod(np.abs(x), axis=1)

def Quadric(x):

if x.ndim==1:

x = x.reshape(1, -1)

outer = 0

for i in range(x.shape[1]):

inner = np.sum(x[:, :i+1], axis=1)**2

outer = outer + inner

return outer

def Rosenbrock(x):

if x.ndim==1:

x = x.reshape(1, -1)

left = x[:, :-1].copy()

right = x[:, 1:].copy()

return np.sum(100*(right - left**2)**2 + (left-1)**2, axis=1)

def Step(x):

if x.ndim==1:

x = x.reshape(1, -1)

return np.sum(np.round((x+0.5), 0)**2, axis=1)

def Quadric_Noise(x):

if x.ndim==1:

x = x.reshape(1, -1)

matrix = np.arange(x.shape[1])+1

return np.sum((x**4)*matrix, axis=1)+np.random.rand(x.shape[0])

def Schwefel(x):

if x.ndim==1:

x = x.reshape(1, -1)

return -1*np.sum(x*np.sin(np.abs(x)**.5), axis=1)

def Rastrigin(x):

if x.ndim==1:

x = x.reshape(1, -1)

return np.sum(x**2 - 10*np.cos(2*np.pi*x) + 10, axis=1)

def Noncontinuous_Rastrigin(x):

if x.ndim==1:

x = x.reshape(1, -1)

outlier = np.abs(x)>=0.5

x[outlier] = np.round(2*x[outlier])/2

return np.sum(x**2 - 10*np.cos(2*np.pi*x) + 10, axis=1)

def Ackley(x):

if x.ndim==1:

x = x.reshape(1, -1)

left = 20*np.exp(-0.2*(np.sum(x**2, axis=1)/x.shape[1])**.5)

right = np.exp(np.sum(np.cos(2*np.pi*x), axis=1)/x.shape[1])

return -left - right + 20 + np.e

def Griewank(x):

if x.ndim==1:

x = x.reshape(1, -1)

left = np.sum(x**2, axis=1)/4000

right = np.prod( np.cos(x/((np.arange(x.shape[1])+1)**.5)), axis=1)

return left - right + 1

d = 30

g = 500

p = 20

times = 1

table = np.zeros((2, 11))

for i in range(times):

x_max = 100*np.ones(d)

x_min = -100*np.ones(d)

optimizer = PSO(fit_func=Sphere, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 0] += optimizer.gBest_score

table[1, 0] += end - start

x_max = 10*np.ones(d)

x_min = -10*np.ones(d)

optimizer = PSO(fit_func=Schwefel_P222, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 1] += optimizer.gBest_score

table[1, 1] += end - start

x_max = 100*np.ones(d)

x_min = -100*np.ones(d)

optimizer = PSO(fit_func=Quadric, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 2] += optimizer.gBest_score

table[1, 2] += end - start

x_max = 10*np.ones(d)

x_min = -10*np.ones(d)

optimizer = PSO(fit_func=Rosenbrock, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 3] += optimizer.gBest_score

table[1, 3] += end - start

x_max = 100*np.ones(d)

x_min = -100*np.ones(d)

optimizer = PSO(fit_func=Step, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 4] += optimizer.gBest_score

table[1, 4] += end - start

x_max = 1.28*np.ones(d)

x_min = -1.28*np.ones(d)

optimizer = PSO(fit_func=Quadric_Noise, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 5] += optimizer.gBest_score

table[1, 5] += end - start

x_max = 500*np.ones(d)

x_min = -500*np.ones(d)

optimizer = PSO(fit_func=Schwefel, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 6] += optimizer.gBest_score

table[1, 6] += end - start

x_max = 5.12*np.ones(d)

x_min = -5.12*np.ones(d)

optimizer = PSO(fit_func=Rastrigin, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 7] += optimizer.gBest_score

table[1, 7] += end - start

x_max = 5.12*np.ones(d)

x_min = -5.12*np.ones(d)

optimizer = PSO(fit_func=Noncontinuous_Rastrigin, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 8] += optimizer.gBest_score

table[1, 8] += end - start

x_max = 32*np.ones(d)

x_min = -32*np.ones(d)

optimizer = PSO(fit_func=Ackley, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 9] += optimizer.gBest_score

table[1, 9] += end - start

x_max = 600*np.ones(d)

x_min = -600*np.ones(d)

optimizer = PSO(fit_func=Griewank, num_dim=d, num_particle=p, max_iter=g, x_max=x_max, x_min=x_min)

start = time.time()

optimizer.opt()

end = time.time()

table[0, 10] += optimizer.gBest_score

table[1, 10] += end - start

table = table / times

table = pd.DataFrame(table)

table.columns=['Sphere', 'Schwefel_P222', 'Quadric', 'Rosenbrock', 'Step', 'Quadric_Noise', 'Schwefel',

'Rastrigin', 'Noncontinuous_Rastrigin', 'Ackley', 'Griewank']

table.index = ['mean score', 'mean time']

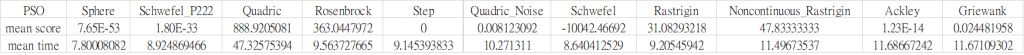

同APSO那篇作者的測試條件,設D=30;P=20;迭代10,000次;個別運作30次,PSO結果如下