今天我們要使用之前所學到的技巧做一個總結,主要有下面這幾樣。

from tensorflow.keras.datasets import mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.reshape(*x_train.shape, 1)

x_train = x_train / 255

x_test = x_test.reshape(*x_test.shape, 1)

x_test = x_test / 255

from tensorflow.keras.utils import to_categorical

y_train = to_categorical(y_train, 10)

y_test = to_categorical(y_test, 10)

from tensorflow.keras.models import Sequential

model = Sequential()

from tensorflow.keras.layers import Conv2D, Dense, Dropout, Flatten, MaxPool2D

model.add(

Conv2D(

filters = 64,

input_shape = (28, 28, 1),

kernel_size = (3, 3),

strides = (1, 1),

activation = 'relu'

)

)

model.add(

MaxPool2D(

pool_size = (2, 2)

)

)

model.add(

Conv2D(

filters = 64,

kernel_size = (3, 3),

strides = (1, 1),

activation = 'relu'

)

)

model.add(

MaxPool2D(

pool_size = (2, 2)

)

)

model.add(

Flatten()

)

model.add(

Dropout(

rate = 0.2

)

)

model.add(

Dense(

units = 10,

activation = 'softmax'

)

)

model.compile(

optimizer = 'adam',

loss = 'categorical_crossentropy',

metrics = ['accuracy']

)

model.summary()

from tensorflow.keras.callbacks import ModelCheckpoint, CSVLogger, TerminateOnNaN, EarlyStopping

mcp = ModelCheckpoint(filepath='mnist-{epoch:02d}.h5', monitor='val_loss', verbose=0, save_best_only=True, save_weights_only=False, mode='auto', save_freq='epoch')

log = CSVLogger(filename='mnist.csv', separator=',', append=False)

ton = TerminateOnNaN()

esl = EarlyStopping(monitor='val_loss', patience=5, mode='auto', restore_best_weights=True)

esa = EarlyStopping(monitor='val_accuracy', patience=5, mode='auto', restore_best_weights=True)

from tensorflow.keras.preprocessing.image import ImageDataGenerator

datagen = ImageDataGenerator(

width_shift_range = 0.1,

height_shift_range = 0.1,

shear_range = 0.1,

rotation_range = 20,

fill_mode = 'constant',

cval = 0

)

from sklearn.model_selection import train_test_split

import time

x_train, x_valid, y_train, y_valid = train_test_split(x_train, y_train, test_size=0.1, random_state=int(time.time()))

batch_size = 32

hist = model.fit(

x = datagen.flow(x_train, y_train, batch_size=batch_size),

steps_per_epoch = x_train.shape[0] // batch_size,

epochs = 30,

validation_data = (x_valid, y_valid),

callbacks = [mcp, log, ton, esl, esa],

verbose = 2

)

score = model.evaluate(x_test, y_test, verbose=0)

print('Test loss:', score[0])

print('Test accuracy:', score[1])

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 26, 26, 64) 640

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 13, 13, 64) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 11, 11, 64) 36928

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 5, 5, 64) 0

_________________________________________________________________

flatten (Flatten) (None, 1600) 0

_________________________________________________________________

dropout (Dropout) (None, 1600) 0

_________________________________________________________________

dense (Dense) (None, 10) 16010

=================================================================

Total params: 53,578

Trainable params: 53,578

Non-trainable params: 0

_________________________________________________________________

Test loss: 0.023822504748083884

Test accuracy: 0.9931

由於val_loss沒有長進,訓練在第18個epoch被停下,並退回到第13個epoch時的權重。

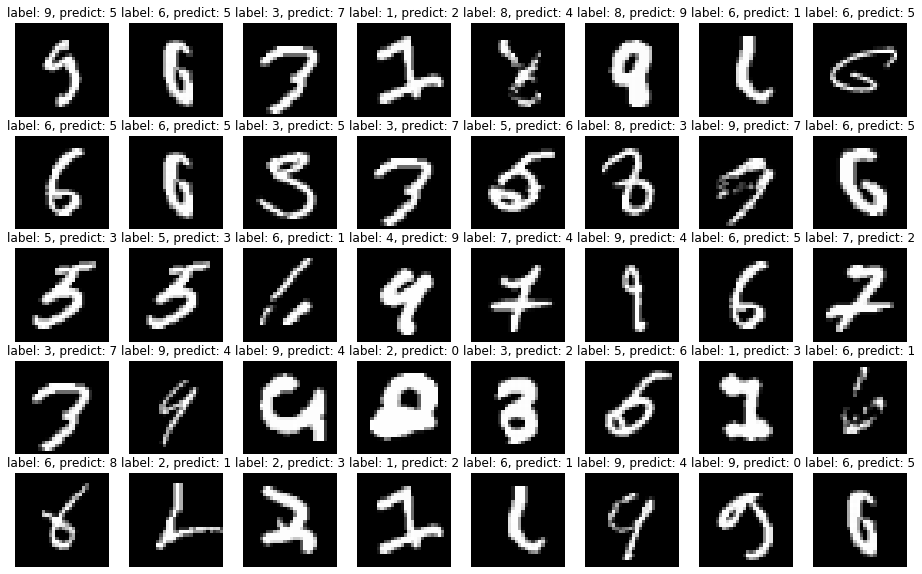

可以看到模型在測試集共10000張圖片有著99.31%的正確率的表現,正確率比第12天又前進了0.8~1%,僅有69張辨識錯誤的圖片,錯誤率0.69%,比起一開始沒有使用其他策略,單純的MLP模型大約2.6%的錯誤率有十足的長進,我們抽個幾張辨識錯誤的圖出來看看。

真的很難辨識的圖片也增加了很多,看的出來模型已經盡可能辨識出所有能辨識的圖片了,剩下的大多都是這樣的圖,由於模型在這個資料集已經得到了一個非常好的結果,已經沒有理由繼續使用這個資料集,明天我們會換一個資料集,然後再帶大家看看幾個小技巧,那我們明天見囉!