如何初始化權重。

In deep networks with many convolutional layers and different paths through the network, a good initialization of the weights is extremely important.

說明初始權重非常重要 (權重不好可能會落於 local 最佳解/不收斂)

Otherwise, parts of the network might give excessive activations, while other parts never contribute.

初始權重沒有好,那有些神經元(filter 也是廣義的神經元)不會激活。

Ideally the initial weights should be adapted such that each feature map in the network has approximately unit variance.

理想情況下,要使每一個特徵圖都有相似的方差。

For a network with our architecture (alternating convolution and ReLU layers) this can be achieved by drawing the initial weights from a Gaussian distribution with a standard

deviation of p 2/N, where N denotes the number of incoming nodes of one neuron [5]. E.g. for a 3x3 convolution and 64 feature channels in the previous layer N = 9 · 64 = 576.

這裡面有提到一篇文章說明了,如何初始權重,所以我們來看一下這篇文章吧~~

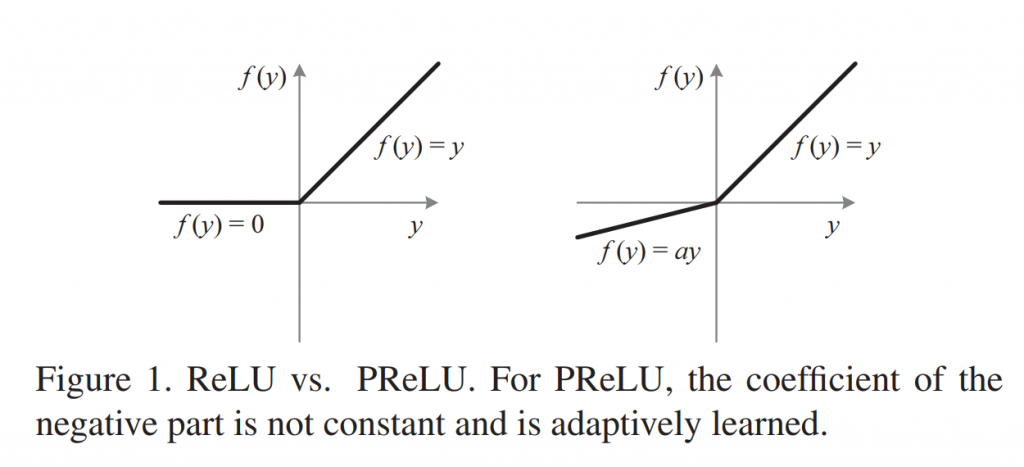

這一篇文章Delving deep into rectifiers: Surpassing human-level performance on imagenet classification主要提出了兩種貢獻,第一是提出了一種rectified unit - Parametric Rectified Linear Unit (PReLU)。

另外一個是提出了一種初始化權重的方法。Unet 的初始化方法應該就是參考了這一篇

初始化權重的說明結束了。

[0] U-net

[1] Delving deep into rectifiers: Surpassing human-level performance on imagenet classification