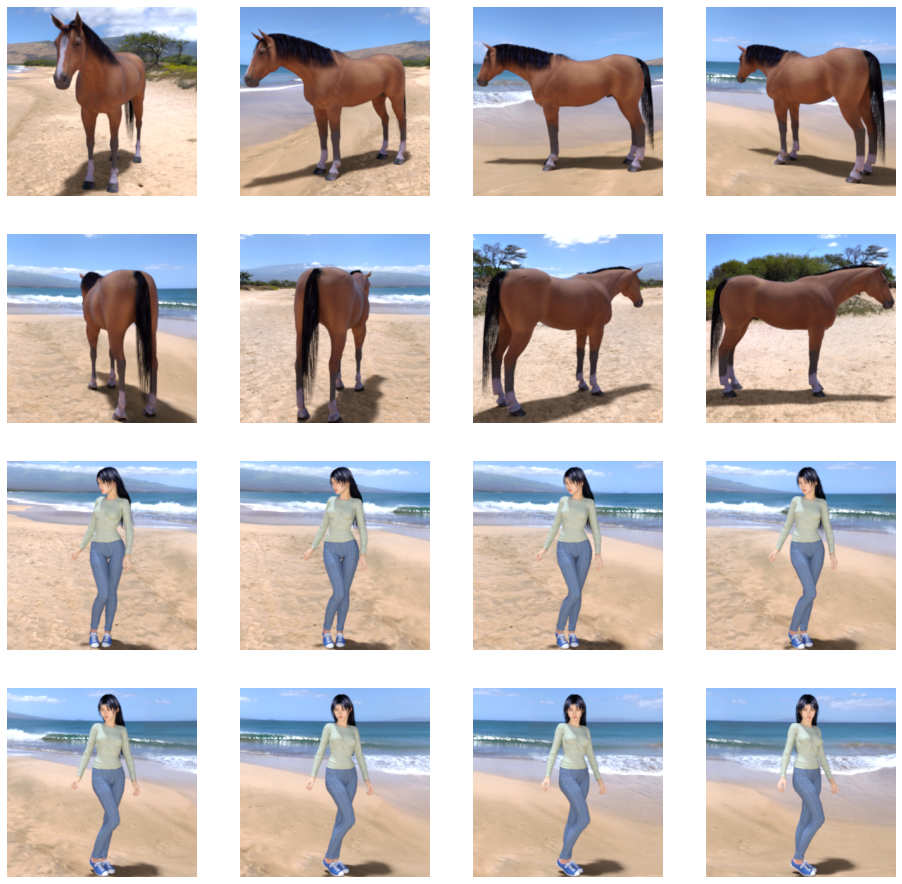

今天要舉一個有趣的範例,以電腦生成的圖像來做訓練,然後用現實的圖像來做辨識,如下圖為電腦生成的圖庫中馬和人的圖各4張,我們來練習辨識是人還是馬:

程式如下,由於是做二分法,所以在最後一層的activation改用'sigmoid',此外在compile的loss設定為'binary_crossentropy',而optimizer則是建議用RMSprop(learning_rate=0.001):

import tensorflow as tf

model = tf.keras.models.Sequential([

# Note the input shape is the desired size of the image 300x300 with 3 bytes color

# This is the first convolution

tf.keras.layers.Conv2D(16, (3,3), activation='relu', input_shape=(300, 300, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

# The second convolution

tf.keras.layers.Conv2D(32, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The third convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The fourth convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The fifth convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# Flatten the results to feed into a DNN

tf.keras.layers.Flatten(),

# 512 neuron hidden layer

tf.keras.layers.Dense(512, activation='relu'),

# Only 1 output neuron. It will contain a value from 0-1 where 0 for 1 class ('horses') and 1 for the other ('humans')

tf.keras.layers.Dense(1, activation='sigmoid')

])

#sigmoid makes the output value a single scalar between 0 and 1, encoding the probability that the current image is class 1 (as opposed to class 0).

from tensorflow.keras.optimizers import RMSprop

model.compile(loss='binary_crossentropy',

optimizer=RMSprop(learning_rate=0.001),

metrics=['accuracy'])

#Train the model with the binary_crossentropy loss because it's a binary classification problem, and the final activation is a sigmoid

#In this case, using the RMSprop optimization algorithm is preferable to stochastic gradient descent (SGD), because RMSprop automates learning-rate tuning for us.

#(Other optimizers, such as Adam and Adagrad, also automatically adapt the learning rate during training, and would work equally well here.)

我們利用tensorflow.keras.preprocessing.image中的ImageDataGenerator將圖庫資料夾中的圖匯入,並調整統一size和除以255做normalize,然後標上分類,轉成訓練資料的格式:

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1/255)

# Flow training images in batches of 128 using train_datagen generator

train_generator = train_datagen.flow_from_directory(

'./horse-or-human/', # This is the source directory for training images

target_size=(300, 300), # All images will be resized to 300x300

batch_size=128,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

#A DirectoryIterator yielding tuples of (x, y) where x is a numpy array containing a batch of images with shape (batch_size, *target_size, channels) and y is a numpy array of corresponding labels.

接下來就是training了,training完後我們可以去抓幾張圖現實的圖來試試,一樣要調整size和做normalize:

history = model.fit(

train_generator,

steps_per_epoch=8,

epochs=15,

verbose=1)

import numpy as np

from tensorflow.keras.preprocessing import image

# predicting images

test_image_file = "horse-gac58efbe9_640.jpg"

path = os.path.join('./test_image/', test_image_file)

img = image.load_img(path, target_size=(300, 300))

x = image.img_to_array(img)

x /= 255

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(classes[0])

if classes[0]>0.5:

print("This is a human")

else:

print("This is a horse")

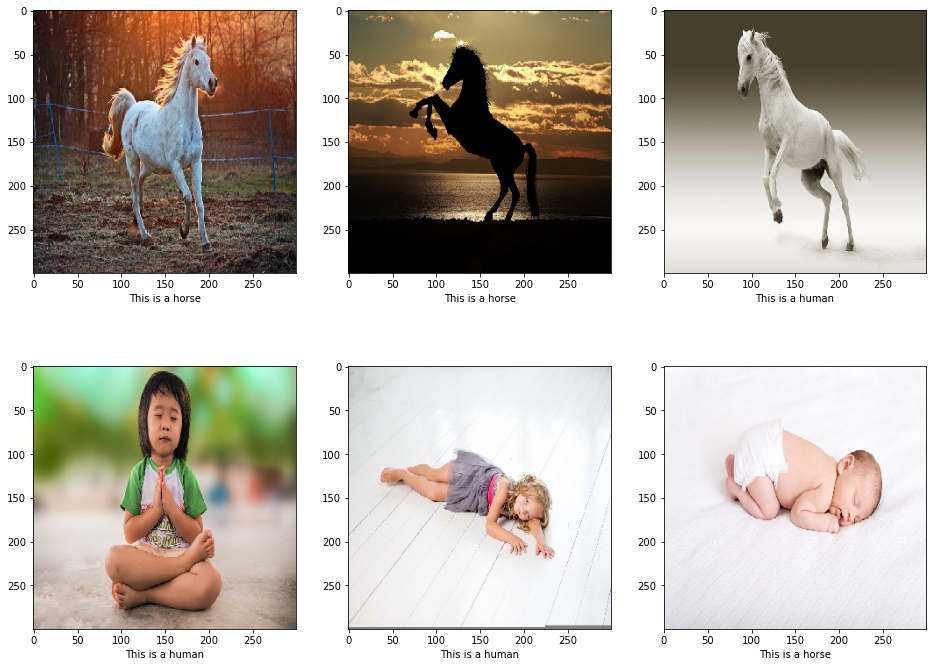

雖然training後的loss已經小於0.1,但我們來看以下幾個例子,這些圖是最後要餵進去已經調整過size和做normalize後的樣子,左邊兩欄是判斷正確的結果,最右邊一欄是判斷錯誤的結果:

但必須說我是有意圖的去挑選圖片,就是找那種我覺得幼稚園小班的時候我自己也認不出來的,雖然我找的都已經是單純乾淨的圖,就是只有單個完整的人或馬,沒有複數,沒有其他配件,更沒有同時有人有馬的。所以還欠缺一些成熟大人應有的判斷的要素。