今天來整理一下,利用p5.sound.js套件,同時讀取音樂檔及麥克風音訊資料的操作

完整的程式碼整理如下

HTML

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<!-- keep the line below for OpenProcessing compatibility -->

<script src="https://openprocessing.org/openprocessing_sketch.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/1.5.0/p5.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/1.5.0/addons/p5.sound.js"></script>

<script src="mySketch.js"></script>

<link rel="stylesheet" type="text/css" href="style.css">

</head>

<body></body>

</html>

CSS

html,

body {

margin: 0;

padding: 0;

}

JS

let can;

let sound;

let mic;

let amplitude;

let fft;

let fft_mic;

let spectrum;

let spectrum_mic;

function preload(){

sound = loadSound('assets/canon.mp3');

}

function setup() {

can = createCanvas(400, 400);

can.id("can1");

can.class("c1");

can.position(10, 10);

background(0);

fft = new p5.FFT();

fft_mic = new p5.FFT();

amplitude = new p5.Amplitude();

amplitude.setInput(sound);

fft.setInput(sound);

mic = new p5.AudioIn();

mic.getSources(gotSources);

fft_mic.setInput(mic);

}

function gotSources(devices) {

let device_name = "外接麥克風";

devices.forEach( (dev, index) => {

console.log(dev.label);

if(dev.label.indexOf(device_name) != -1 && dev.kind == 'audioinput'){

if(mic_st==0){

mic.setSource(index);

fft_mic.setInput(mic);

console.log("mic.currentSource: "+mic.currentSource+", index:"+index);

let currentSource = devices[mic.currentSource];

console.log(currentSource.deviceId+","+currentSource.label);

mic.start();

mic_st = 1;

}

}

});

}

let size1 = 0;

let size1_mic = 0;

function draw() {

background(0, 20);

drawAmplitude();

drawFFT();

}

function drawAmplitude(){

let level = amplitude.getLevel();

let level_mic = mic.getLevel();

//---- sound amplitude---

let size = map(level, 0, 1, 0, 2000);

size1 = size1 + (size-size1)*0.5;

noFill();

stroke(255);

strokeWeight(3);

ellipse(width/2-100, height/2, size1, size1);

//---- mic amplitude-----

let size_mic = map(level_mic, 0, 1, 0, 800);

size1_mic = size1_mic + (size_mic-size1_mic)*0.5;

noFill();

stroke(255, 255, 0);

strokeWeight(3);

ellipse(width/2+100, height/2, size1_mic, size1_mic);

}

function drawFFT(){

spectrum = fft.analyze();

spectrum_mic = fft_mic.analyze();

//---- sound fft---

let sp_len = spectrum.length;

for(let i=0; i<sp_len; i++) {

let x = map(i, 0, sp_len, 0, 400);

stroke(255, 130);

line(x, 380, x, 380 - map(spectrum[i], 0, 255, 0, 300));

}

//---- mic fft---

let sp_len_mic = spectrum_mic.length;

for(let i=0; i<sp_len_mic; i++) {

let x = map(i, 0, sp_len_mic, 0, 400);

stroke(255, 255, 0, 50);

line(x, 380, x, 380 - map(spectrum_mic[i], 0, 255, 0, 300));

}

}

let mic_st = 0;

function keyPressed(e) {

console.log(e);

if(e.key == 'a'){

if(mic_st==0){

mic.start();

mic_st = 1;

} else {

mic.stop();

mic_st = 0;

}

} else

if(e.key == 'b'){

if(sound.isPlaying()){

sound.pause();

} else {

sound.play() ;

}

}

}

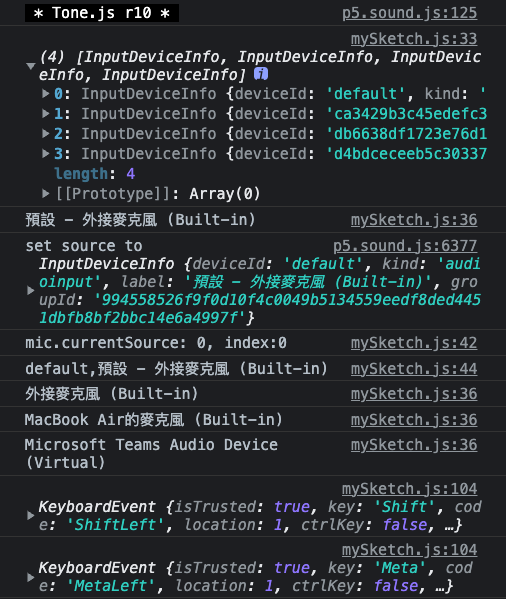

console.log的輸出資訊

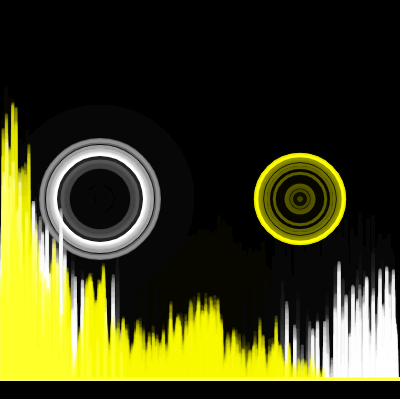

執行的結果

其中音樂檔的部份,利用

sound = loadSound('assets/canon.mp3'); 載入檔案

amplitude = new p5.Amplitude(); //-- 建立音訊振幅處理物件

amplitude.setInput(sound); //-- 設定振幅音訊輸入來源(sound)

fft.setInput(sound); //-- 設定頻率分析音訊輸入來源(sound)

level = amplitude.getLevel(); //--- 讀取音訊振幅數值

spectrum = fft.analyze(); //--- 讀取音訊頻率分佈數值

麥克風的部份,利用

mic = new p5.AudioIn(); //-- 建立輸入音源物件

mic.getSources(gotSources); //-- 讀取輸入音源清單

mic.setSource(0); //-- 設定輸入音源來源

fft_mic.setInput(mic); //-- 設定頻率分析音訊輸入來源(mic)

level_mic = mic.getLevel(); //--- 讀取麥克風音訊振幅數值

spectrum_mic = fft_mic.analyze(); //--- 讀取麥克風音訊頻率分佈數值

按下 a 鍵, 啟動/關閉 麥克風

按下 b 鍵, 播放/暫停 音樂檔

常用的物件有以下這幾個

p5.SoundFile: 載入及播放聲音檔

p5.Amplitude: 讀取音訊振幅數值

p5.AudioIn: 讀取麥克風輸入音源

p5.FFT: 讀取音訊頻率分佈數值

p5.sound.js的其他功能會在後續的文章整理

參考資料

p5.sound library

https://p5js.org/reference/#/libraries/p5.sound