昨天我們完成部署到每一台 Server 上收集了資料

今天把 K8s 的環境也裝上 Promtail 把 strout 和 strerr 都收集到 Loki 上吧

因為 Container 的 Log 並不存放在 Journald 裡面 Like this

ps: 這邊是從 /var/log/pods soft link 過來的 所以等等要用的 Documents 是 /var/log/pods

先來寫 Promtail 的 YAML 最後要把這個設定檔貼到 configmap 上

server:

http_listen_port: 9080

positions:

filename: /tmp/positions.yaml

clients:

- url: http://loki.ironman.test:3100/loki/api/v1/push

scrape_configs:

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- source_labels: [__meta_kubernetes_pod_node_name]

target_label: node_name

- source_labels: [__meta_kubernetes_pod_name]

target_label: pod_name

- source_labels: [__meta_kubernetes_namespace]

target_label: namespace

跟前面在部署 kube-state-metrics 不一樣的是這邊要使用 Daemonset 在每一個 Node 上都部署一個 Pod

因為 Promtail 只能夠抓到本機的 Log 來傳到 Loki 上 所以每一個 Node 上都要部署一個

kube-state-metrics 是透過敲 Kubernetes API 取得資訊做成 Metric 傳給 Prometheus 的

所以只需要一個

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: promtail-daemonset

spec:

selector:

matchLabels:

name: promtail

template:

metadata:

labels:

name: promtail

spec:

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

serviceAccount: promtail-serviceaccount

containers:

- name: promtail-container

image: grafana/promtail:main

args:

- -config.file=/etc/promtail/promtail.yaml

env:

- name: 'HOSTNAME' # needed when using kubernetes_sd_configs

valueFrom:

fieldRef:

fieldPath: 'spec.nodeName'

volumeMounts:

- name: logs

mountPath: /var/log

- name: promtail-config

mountPath: /etc/promtail

- name: varlogpods

mountPath: /var/log/pods

readOnly: true

volumes:

- name: logs

hostPath:

path: /var/log

- name: varlogpods

hostPath:

path: /var/log/pods

- name: promtail-config

configMap:

name: promtail-config

我這邊設定是給 Containerd CRI 使用的 所以會跟預設設定有些不同

可以參照官方 Document 去設定符合自己環境需求的 Config

把剛剛寫好的 YAML 貼到這邊

方便未來之接用 kubectl edit

apiVersion: v1

kind: ConfigMap

metadata:

name: promtail-config

data:

promtail.yaml: |

server:

http_listen_port: 9080

positions:

filename: /tmp/positions.yaml

clients:

- url: http://loki.ironman.test:3100/loki/api/v1/push

scrape_configs:

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

- source_labels: [__meta_kubernetes_pod_node_name]

target_label: node_name

- source_labels: [__meta_kubernetes_pod_name]

target_label: pod_name

- source_labels: [__meta_kubernetes_namespace]

target_label: namespace

這邊來設定 ClusterRole 開權限給 Promtail 使用

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: promtail-clusterrole

rules:

- apiGroups: [""]

resources:

- nodes

- services

- pods

verbs:

- get

- watch

- list

創 Promtail 專用的 ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

name: promtail-serviceaccount

透過 ClusterRolebinding 把 ClusterRole 跟 ServiceAccount 串起來

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: promtail-clusterrolebinding

subjects:

- kind: ServiceAccount

name: promtail-serviceaccount

namespace: default

roleRef:

kind: ClusterRole

name: promtail-clusterrole

apiGroup: rbac.authorization.k8s.io

最後來部署進 Cluster 內

kubectl apply -f ./

root@lke-main:~/promtail-k8s# kubectl apply -f ./

clusterrole.rbac.authorization.k8s.io/promtail-clusterrole created

configmap/promtail-config created

daemonset.apps/promtail-daemonset created

serviceaccount/promtail-serviceaccount created

clusterrolebinding.rbac.authorization.k8s.io/promtail-clusterrolebinding created

來看看 pod 狀況

root@lke-main:~/promtail-k8s# kubectl get pod -A | grep "promtail"

default promtail-daemonset-5qhqp 1/1 Running 0 57s

default promtail-daemonset-bm4ms 1/1 Running 0 66s

default promtail-daemonset-vjz25 1/1 Running 0 58s

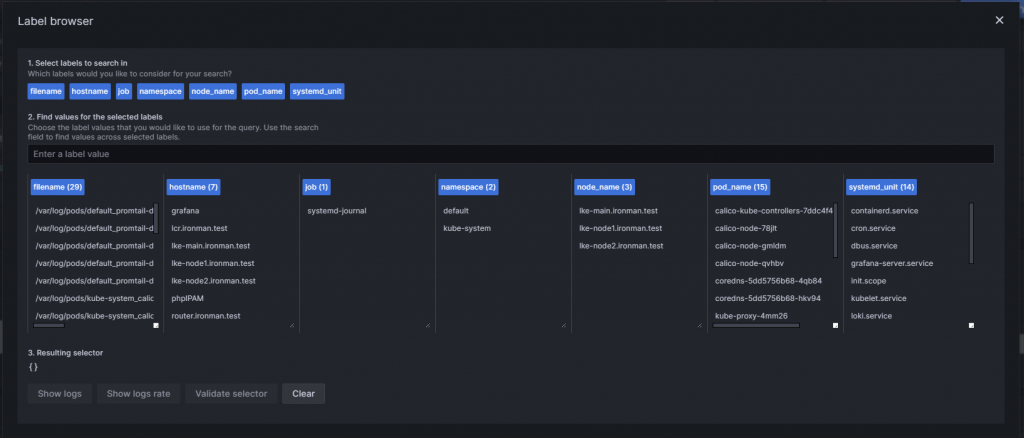

看起來都活得不錯 接著看看 Loki 上面有沒有收到 Log 吧

很棒 今天可以下班了 :D