這個文章將用 kind 帶領大家探索 Layered cgroup

創建 kind

進入 worker node

觀察 worker node 的 root cgroup, systemreserved, kubereserved

觀察空的 Layered cgroup

Apply BustableQoS

Apply GuaranteedPod

Apply BesteffortQoS

root cgroup → /sys/fs/cgroup/

systemreserved → /sys/fs/cgroup/system.slice

/system.slice/containerd.service

kubereserved → /sys/fs/cgroup/kubelet.slice/kubelet.service

kubepods → /sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice

GuaranteedPod → /sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/{pod_id}

/sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/{pod_id}/{container_id}

BurstableQoS → /sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/kubelet-kubepods-burstable.slice

BurstablePod → /sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/kubelet-kubepods-burstable.slice/{pod_id}

/sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/kubelet-kubepods-burstable.slice/{pod_id}/{container_id}

BesteffortQoS → /sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/kubelet-kubepods-besteffort.slice

BesteffortPod → /sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/kubelet-kubepods-besteffort.slice/{pod_id}

/sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/kubelet-kubepods-besteffort.slice/{pod_id}/{container_id}

為了不讓 Control Plane components 干擾我們的測試,所以我們建立一個 worker node 然後把 Pod 都佈署上去。

建立一個設定檔 cluster.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

# One control plane node and three "workers".

#

# While these will not add more real compute capacity and

# have limited isolation, this can be useful for testing

# rolling updates etc.

#

# The API-server and other control plane components will be

# on the control-plane node.

#

# You probably don't need this unless you are testing Kubernetes itself.

nodes:

- role: control-plane

- role: worker

由於準備的 Pod Manifest 裡面寫了 hostname,所以 cgroup-test 名稱要一致。

kind create cluster --config ./cluster.yaml --name cgroup-test

docker ps | grep cgroup-test-worker

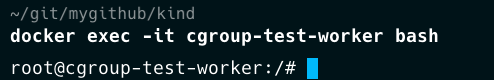

雖然前面好像在找 ID,但是這邊用名字而不是 container id,主要是讓大家熟悉一下 kind 的架構。

docker exec -it cgroup-test-worker bash

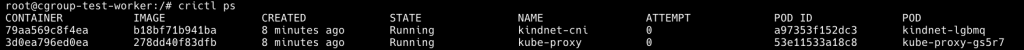

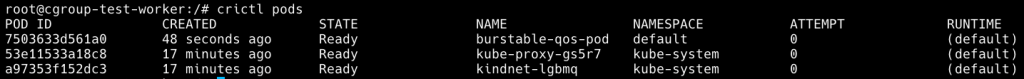

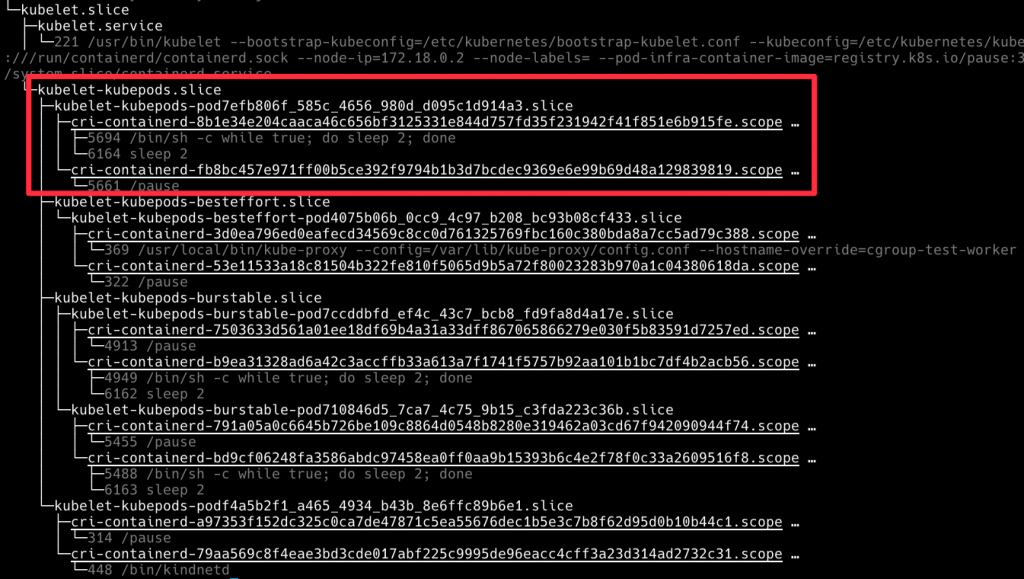

我們先用 crictl ps 看一下現在這臺機器有哪些 container

crictl ps

我們可以觀察到這個 worker node 只有必要的 container

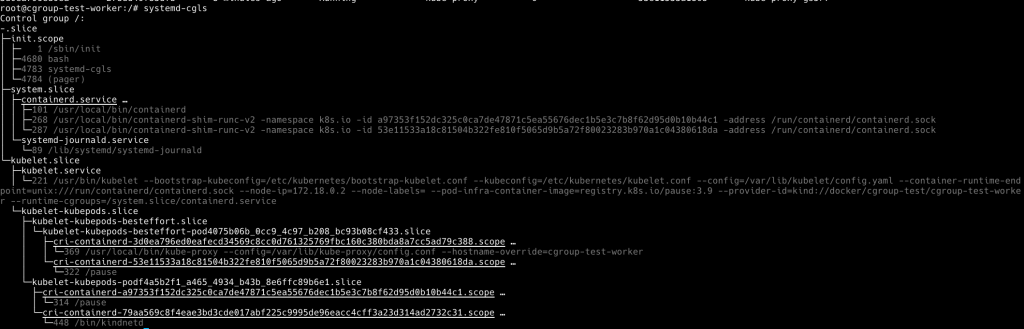

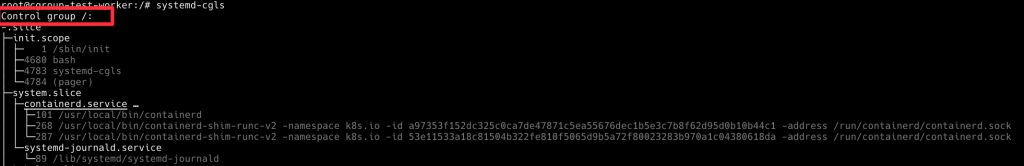

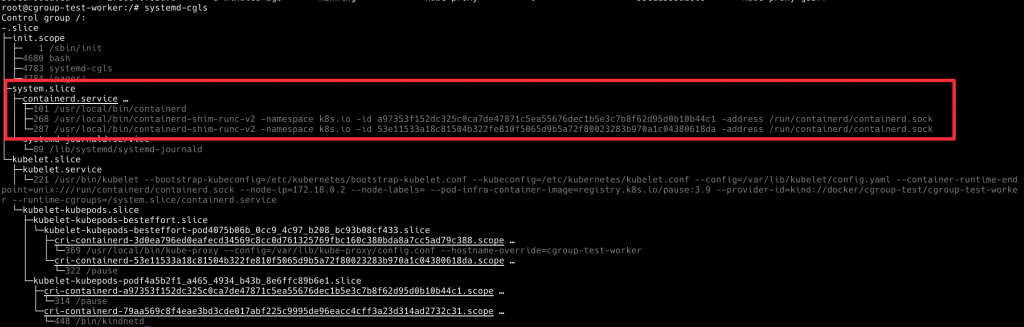

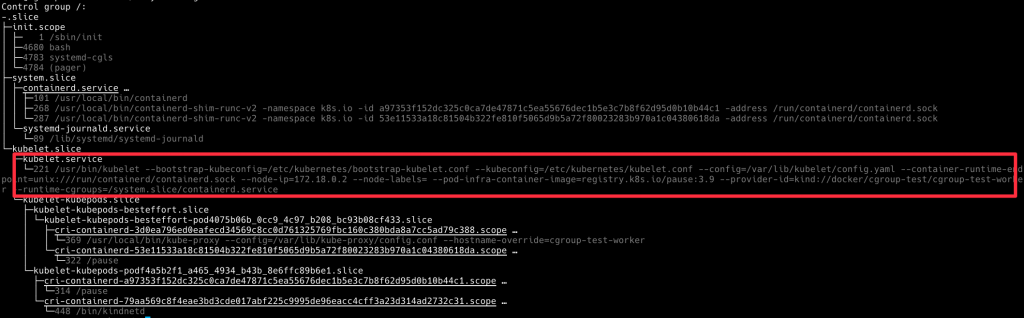

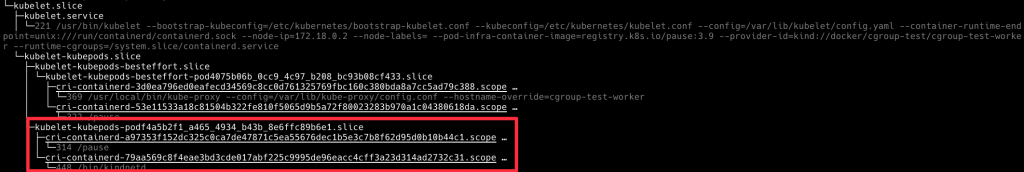

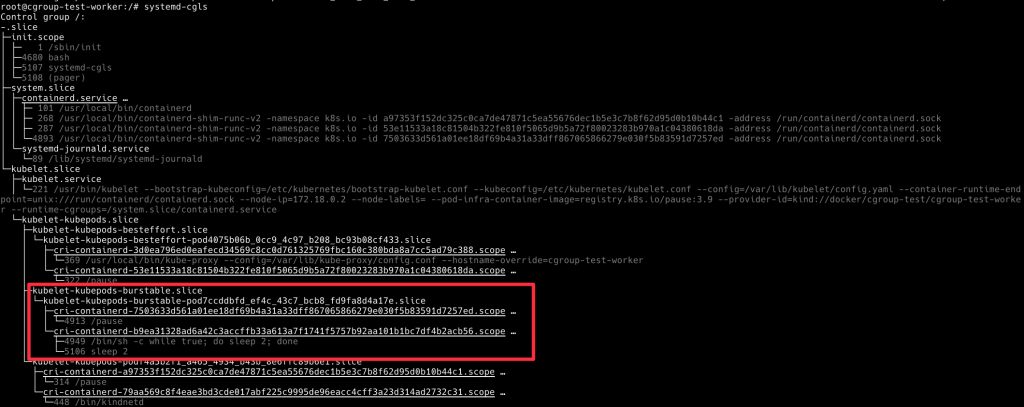

接下來用 systemd-cgls 觀察 cgroup

systemd-cgls

我們可以觀察到 containerd 被放在這邊

kubelet 本身的 cgroup 被放在這

我們觀察到了五層 cgroup,接下來佈署一些 Pod 看一下他們是怎麽變化的

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: burstable-qos-pod

spec:

nodeSelector:

kubernetes.io/hostname: cgroup-test-worker

containers:

- name: burstable-qos-container

image: busybox

command: ["/bin/sh", "-c", "while true; do sleep 2; done"]

resources:

requests:

cpu: 0.1

memory: "100Mi"

EOF

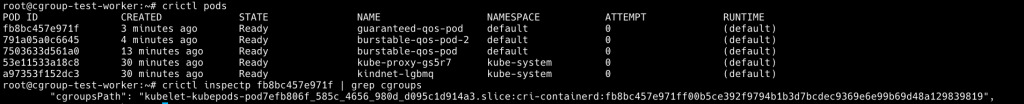

我們利用 crictl pods 查詢 pod ID 後,利用 crictl inspectp 查詢 cgroup path

crictl pods

crictl inspectp 7503633d561a0

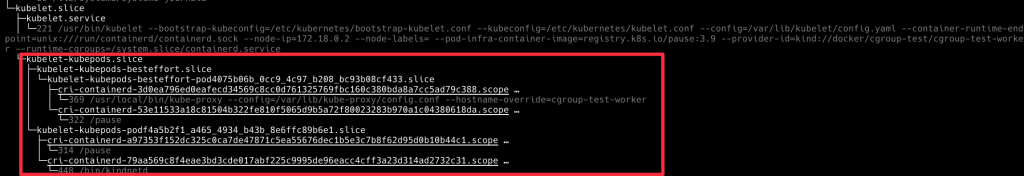

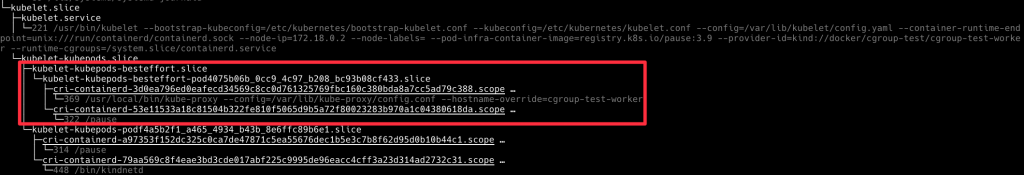

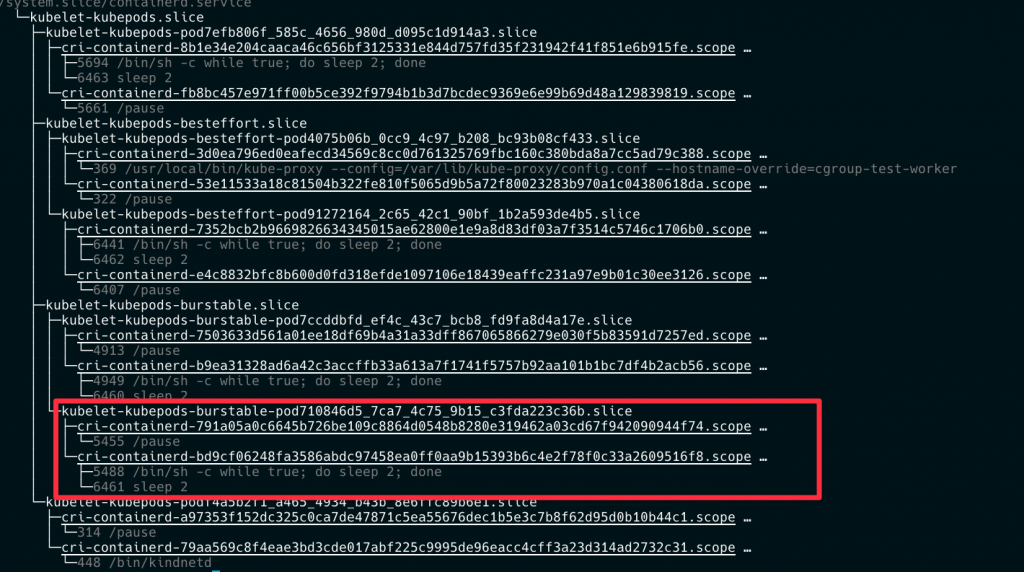

再執行一次 systemd-cgls,會看到剛剛佈署的 burstable-qos-pod cgroup,被放在 kubelet-kubepods-burstable.slice 下面

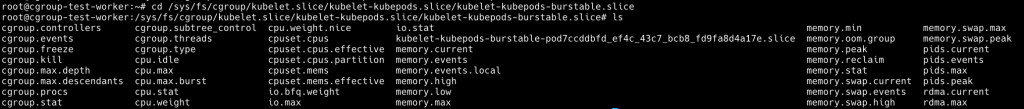

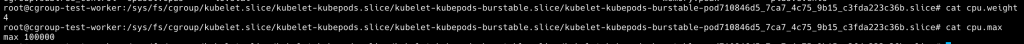

我們現在 cd 到這個檔案路徑看一下 cgroup 參數

cd /sys/fs/cgroup/kubelet.slice/kubelet-kubepods.slice/kubelet-kubepods-burstable.slice

可以看到 cpu.weight 以及 cpu.max 有值

接下來我們佈署第二個 burstable pod

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: burstable-qos-pod-2

spec:

nodeSelector:

kubernetes.io/hostname: cgroup-test-worker

containers:

- name: burstable-qos-container

image: busybox

command: ["/bin/sh", "-c", "while true; do sleep 2; done"]

resources:

requests:

cpu: 0.1

memory: "100Mi"

EOF

用同樣的方式,看到 cpu.weight 以及 cpu.max 皆為有值

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: guaranteed-qos-pod

spec:

containers:

- name: guaranteed-qos-pod

image: busybox

command: ["/bin/sh", "-c", "while true; do sleep 2; done"]

resources:

limits:

memory: "512Mi"

cpu: "1"

requests:

memory: "512Mi"

cpu: "1"

EOF

從 crictl pods 找到 podid 後一樣用 crictl inspectp 找到 cgroup path

我們可以看到,Guaranteed Pod cgroup 直接放在 kubepods 下面,沒有相關的 QoS cgroup

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: besteffort-qos-pod

spec:

nodeSelector:

kubernetes.io/hostname: cgroup-test-worker

containers:

- name: besteffort-qos-container

image: busybox

command: ["/bin/sh", "-c", "while true; do sleep 2; done"]

EOF

我們部屬的 besteffort Pod 被放在 besteffort QoS 下面

Layerd cgroup 就介紹到這邊,明天開始討論 CPU Manager。