minikube startterraform {

required_providers {

helm = {

source = "hashicorp/helm"

version = "2.12.1"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.29.0"

}

}

}

provider "kubernetes" {

config_path = "~/.kube/config"

config_context = "minikube"

}

provider "helm" {

kubernetes {

config_path = "~/.kube/config"

config_context = "minikube"

}

}

resource "helm_release" "nginx_demo" {

name = "nginx-demo"

namespace = "default"

# 推薦:先用本機 chart 避開 provider 解析 bug

chart = "./charts/nginx"

wait = false

atomic = false

set {

name = "service.type"

value = "ClusterIP"

}

set {

name = "resources.requests.cpu"

value = "50m"

}

set {

name = "resources.requests.memory"

value = "64Mi"

}

# 啟用 exporter

set {

name = "metrics.enabled"

value = "true"

}

# 讓 Prometheus Operator 自動抓(kube-prometheus-stack)

set {

name = "metrics.serviceMonitor.enabled"

value = "true"

}

set {

name = "metrics.serviceMonitor.namespace"

value = "monitoring"

}

# 這個 label 一定要對到你的 kube-prometheus-stack 的 release 名稱(你是 kps)

set {

name = "metrics.serviceMonitor.labels.release"

value = "kps"

}

}

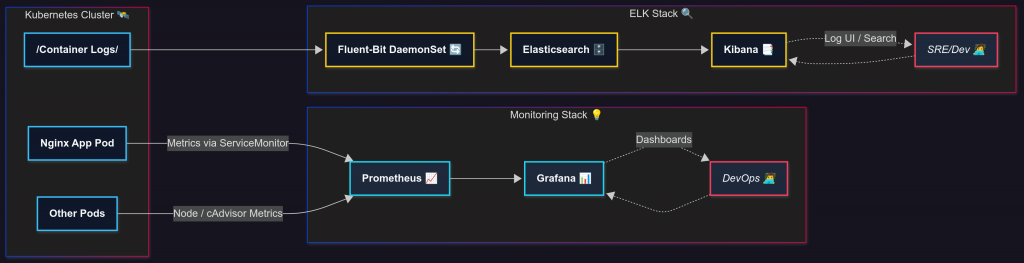

# === Monitoring:kube-prometheus-stack(含 Grafana)===

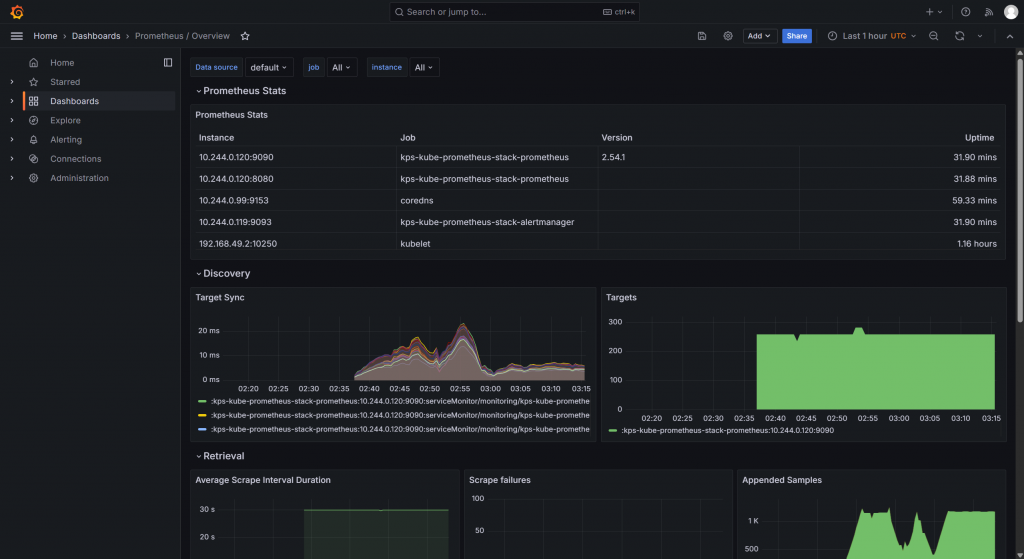

resource "helm_release" "kps" {

name = "kps"

repository = "https://prometheus-community.github.io/helm-charts"

chart = "kube-prometheus-stack"

version = "62.7.0"

namespace = "monitoring"

create_namespace = true

timeout = 600

# 預設帳密

set { name = "grafana.adminUser" value = "admin" }

set { name = "grafana.adminPassword" value = "你的強密碼" }

set { name = "grafana.service.type" value = "ClusterIP" }

set { name = "prometheus.service.type" value = "ClusterIP" }

}

# === ELK:Elasticsearch(單節點,Demo 規模)===

resource "helm_release" "elasticsearch" {

name = "elasticsearch"

repository = "https://helm.elastic.co"

chart = "elasticsearch"

version = "8.5.1"

namespace = "elk"

create_namespace = true

timeout = 600

# 極簡資源配置

set {

name = "replicas"

value = "1"

}

set {

name = "resources.requests.cpu"

value = "200m"

}

set {

name = "resources.requests.memory"

value = "1Gi"

}

set {

name = "resources.limits.cpu"

value = "1"

}

set {

name = "resources.limits.memory"

value = "2Gi"

}

set {

name = "esConfig.elasticsearch\\.yml"

value = "xpack.security.enabled: false"

}

# 10Gi PVC

set {

name = "volumeClaimTemplate.resources.requests.storage"

value = "10Gi"

}

}

# === ELK:Kibana ===

resource "helm_release" "kibana" {

name = "kibana"

repository = "https://helm.elastic.co"

chart = "kibana"

version = "8.5.1"

namespace = "elk"

# 指向上面的 ES 服務

set {

name = "elasticsearchHosts"

value = "http://elasticsearch-master.elk.svc:9200"

}

set {

name = "service.type"

value = "ClusterIP"

}

depends_on = [helm_release.elasticsearch]

}

# === Logs Collector:Fluent-Bit(DaemonSet,把 k8s 容器日誌送 ES)===

resource "helm_release" "fluent_bit" {

name = "fluent-bit"

repository = "https://fluent.github.io/helm-charts"

chart = "fluent-bit"

version = "0.46.7"

namespace = "elk"

# Backend 設為 ES

set {

name = "backend.type"

value = "es"

}

set {

name = "backend.es.host"

value = "elasticsearch-master.elk.svc"

}

set {

name = "backend.es.port"

value = "9200"

}

set {

name = "backend.es.index"

value = "kubernetes_logs"

}

set {

name = "backend.es.logstash_prefix"

value = "k8s"

}

set {

name = "backend.es.replace_dots"

value = "true"

}

# 讀取 /var/log/containers/*.log

set {

name = "inputs.tail.enabled"

value = "true"

}

set {

name = "inputs.tail.path"

value = "/var/log/containers/*.log"

}

set {

name = "inputs.tail.parser"

value = "docker"

}

set {

name = "inputs.tail.tag"

value = "kube.*"

}

depends_on = [helm_release.elasticsearch]

}

terraform init

terraform apply -auto-approve

kubectl get pods -A

/var/log/containers/*.log,輸出到 Elasticsearch。