到目前為止,我們已經建立了兩種 baseline 模型:

這些 baseline 雖然表現不佳,但能幫助我們建立一個參考基準。

👉 今天,我們要做的就是:

放在 notebooks/day8_compare_models.ipynb。

import os

import pandas as pd

import numpy as np

import mlflow

from sklearn.neighbors import NearestNeighbors

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import linear_kernel

DATA_DIR = "/usr/mlflow/data"

anime = pd.read_csv(os.path.join(DATA_DIR, "anime_clean.csv"))

ratings_train = pd.read_csv(os.path.join(DATA_DIR, "ratings_train.csv"))

ratings_test = pd.read_csv(os.path.join(DATA_DIR, "ratings_test.csv"))

print("Anime:", anime.shape)

print("Train:", ratings_train.shape)

print("Test:", ratings_test.shape)

# 建立 user-item 矩陣

user_item_matrix = ratings_train.pivot_table(

index="user_id", columns="anime_id", values="rating"

).fillna(0)

print("User-Item shape:", user_item_matrix.shape)

# 建立 KNN 模型

knn = NearestNeighbors(metric="cosine", algorithm="brute", n_neighbors=6, n_jobs=-1)

knn.fit(user_item_matrix)

def recommend_user_based(user_id, top_n=10):

if user_id not in user_item_matrix.index:

return pd.DataFrame(columns=["anime_id", "name", "genre"])

# 找鄰居

user_vector = user_item_matrix.loc[[user_id]]

distances, indices = knn.kneighbors(user_vector, n_neighbors=6)

neighbor_ids = user_item_matrix.index[indices.flatten()[1:]] # 排除自己

neighbor_ratings = user_item_matrix.loc[neighbor_ids]

mean_scores = neighbor_ratings.mean().sort_values(ascending=False)

# 排除看過的動畫

seen = user_item_matrix.loc[user_id]

seen = seen[seen > 0].index

recommendations = mean_scores.drop(seen).head(top_n)

return anime[anime["anime_id"].isin(recommendations.index)][["anime_id","name","genre"]]

# 建立文字描述欄位

anime["text"] = anime["genre"].fillna("") + " " + anime["type"].fillna("")

tfidf = TfidfVectorizer(stop_words="english")

tfidf_matrix = tfidf.fit_transform(anime["text"])

cosine_sim = linear_kernel(tfidf_matrix, tfidf_matrix)

indices = pd.Series(anime.index, index=anime["anime_id"]).drop_duplicates()

def recommend_item_based(user_id, top_n=10):

user_ratings = ratings_train[ratings_train["user_id"] == user_id]

liked = user_ratings[user_ratings["rating"] > 7]["anime_id"].tolist()

if len(liked) == 0:

return pd.DataFrame(columns=["anime_id","name"])

sim_scores = np.zeros(cosine_sim.shape[0])

for anime_id in liked:

if anime_id in indices:

idx = indices[anime_id]

sim_scores += cosine_sim[idx]

sim_scores = sim_scores / len(liked)

sim_indices = sim_scores.argsort()[::-1]

seen = set(user_ratings["anime_id"])

rec_ids = [anime.loc[i, "anime_id"] for i in sim_indices if anime.loc[i, "anime_id"] not in seen][:top_n]

return anime[anime["anime_id"].isin(rec_ids)][["anime_id","name","genre"]]

def precision_recall_at_k(user_id, model_func, k=10):

recs = model_func(user_id, top_n=k)

if recs.empty:

return np.nan, np.nan

user_test = ratings_test[ratings_test["user_id"] == user_id]

liked = set(user_test[user_test["rating"] > 7]["anime_id"])

if len(liked) == 0:

return np.nan, np.nan

hit = len(set(recs["anime_id"]) & liked)

precision = hit / k

recall = hit / len(liked)

return precision, recall

sample_users = np.random.choice(ratings_train["user_id"].unique(), 100, replace=False)

def evaluate_model(model_func, name):

precisions, recalls = [], []

for u in sample_users:

p, r = precision_recall_at_k(u, model_func, 10)

if not np.isnan(p): precisions.append(p)

if not np.isnan(r): recalls.append(r)

return {

"model": name,

"precision@10": np.mean(precisions),

"recall@10": np.mean(recalls)

}

results = []

results.append(evaluate_model(recommend_user_based, "user_based_cf"))

results.append(evaluate_model(recommend_item_based, "item_based_tfidf"))

df_compare = pd.DataFrame(results)

print(df_compare)

report_users = ratings_test["user_id"].drop_duplicates().sample(3, random_state=42)

records = []

for u in report_users:

liked = ratings_test[(ratings_test["user_id"] == u) & (ratings_test["rating"] > 7)]

liked_names = anime[anime["anime_id"].isin(liked["anime_id"])]["name"].tolist()

recs_u = recommend_user_based(u, top_n=10)["name"].tolist()

recs_i = recommend_item_based(u, top_n=10)["name"].tolist()

records.append({

"user_id": u,

"liked_in_test": liked_names,

"user_based_recommend": recs_u,

"item_based_recommend": recs_i

})

df_examples = pd.DataFrame(records)

print(df_examples)

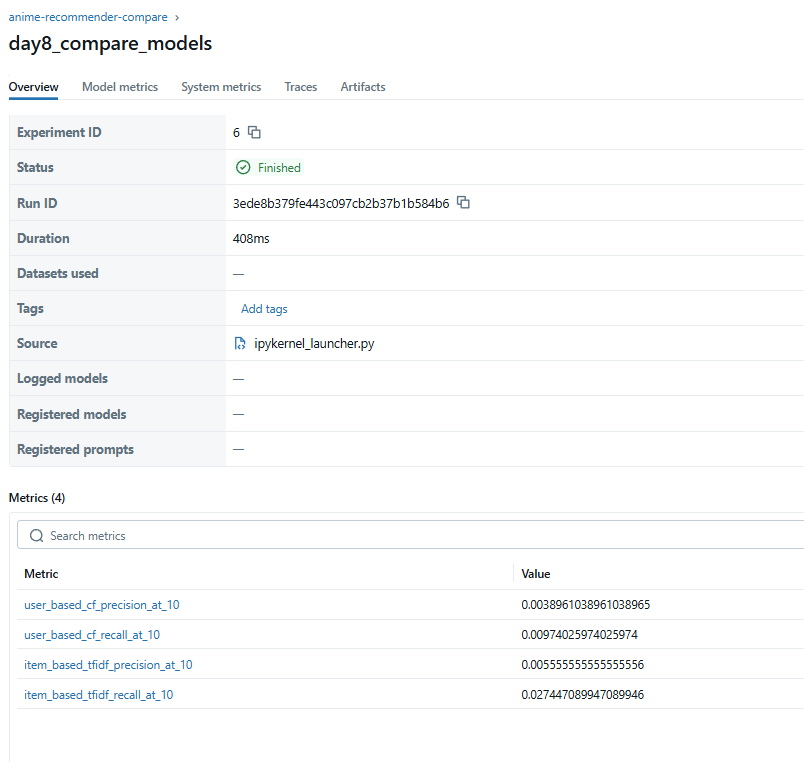

mlflow.set_tracking_uri(os.getenv("MLFLOW_TRACKING_URI", "http://mlflow:5000"))

mlflow.set_experiment("anime-recommender-compare")

# 存比較表 & 範例推薦清單

df_compare.to_csv("day8_compare_metrics.csv", index=False)

df_examples.to_csv("day8_recommend_examples.csv", index=False)

with mlflow.start_run(run_name="day8_compare_models"):

# log metrics

for row in results:

mlflow.log_metric(f"{row['model']}_precision@10", row["precision@10"])

mlflow.log_metric(f"{row['model']}_recall@10", row["recall@10"])

ratings_train.csv + ratings_test.csv

│

▼

User-based CF (KNN) → Precision/Recall

Item-based TF-IDF → Precision/Recall

│

▼

生成比較表 + 推薦範例

│

▼

MLflow log metrics + log_artifact

在 Day 9,我們會把這些比較再進一步強化,讓 MLflow 中的 artifacts 更好用,並開始規劃如何把最佳模型封裝。