Florence-2 在 Hugging Face 上有 “microsoft/Florence-2-base”,“microsoft/Florence-2-large” 預訓練模型,在Colab T4均可運行。

但目前在載入模型,會遇到錯誤 AttributeError: 'Florence2ForConditionalGeneration' object has no attribute '_supports_sdpa' , 是由於 Hugging Face Transformers 庫的版本不相容引起的。Florence-2 模型的注意力機制(attention implementation)在較新版本的 Transformers(例如 4.52.1 以上,尤其是 4.54.0+)中引入了 SDPA(Scaled Dot-Product Attention)的支援檢查,但模型尚未實現 _supports_sdpa 屬性。這導致在模型初始化時,Transformers 嘗試檢查該屬性但失敗。

這個問題在 Google Colab(T4 GPU)環境中存在,因為 Colab 的預設 Transformers 版本可能已更新到不兼容的版本。

測試結果,降級 Transformers 庫到兼容版本 4.51.3,它已確認與 Florence-2 穩定工作。

!pip show transformers

!pip uninstall -y transformers

!pip install transformers==4.51.3

import torch

from transformers import AutoProcessor, AutoModelForCausalLM

device = "cuda:0" if torch.cuda.is_available() else "cpu"

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

model = AutoModelForCausalLM.from_pretrained("microsoft/Florence-2-large", torch_dtype=torch_dtype, trust_remote_code=True).to(device)

processor = AutoProcessor.from_pretrained("microsoft/Florence-2-large", trust_remote_code=True)

# 定義推理函數

def run_example(task_prompt, text_input=None):

if text_input:

prompt = task_prompt + text_input

else:

prompt = task_prompt

inputs = processor(text=prompt, images=image, return_tensors="pt").to(device, torch_dtype)

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=4096,

num_beams=3,

do_sample=False

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task=task_prompt, image_size=(image.width, image.height))

return parsed_answer

from PIL import Image

import requests

import matplotlib.pyplot as plt

from matplotlib.patches import Rectangle

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg"

image = Image.open(requests.get(url, stream=True).raw)

# 顯示圖像

plt.imshow(image)

plt.axis('off')

plt.show()

任務指示使用統一的提示詞格示

caption = run_example("<CAPTION>")

print("簡潔描述:", caption["<CAPTION>"])

detailed_caption = run_example("<DETAILED_CAPTION>")

print("詳細描述:", detailed_caption["<DETAILED_CAPTION>"])

more_detailed = run_example("<MORE_DETAILED_CAPTION>")

print("更詳細描述:", more_detailed["<MORE_DETAILED_CAPTION>"])

(輸出結果)

簡潔描述: a green volkswagen beetle parked in front of a yellow building

詳細描述: The image shows a green Volkswagen Beetle parked in front of a yellow building with two brown doors, surrounded by trees and a clear blue sky.

更詳細描述: The image shows a vintage Volkswagen Beetle car parked on a cobblestone street in front of a yellow building with two wooden doors. The car is painted in a bright turquoise color and has a white stripe running along the side. The doors are made of wood and have a rustic, weathered look. The building behind the car has a small window and a door handle. The sky is blue and there are trees in the background. The overall atmosphere of the image is peaceful and serene.

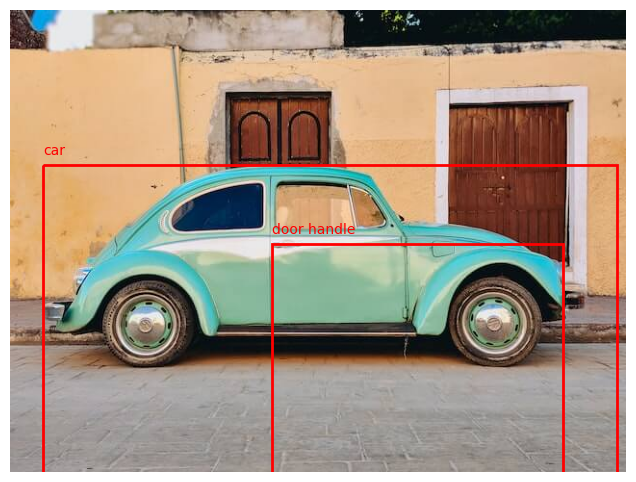

od_result = run_example("<OD>")

print("偵測結果:", od_result["<OD>"])

(輸出結果)

偵測結果: {'bboxes': [[33.599998474121094, 160.55999755859375, 596.7999877929688, 371.7599792480469], [271.67999267578125, 242.1599884033203, 302.3999938964844, 246.95999145507812]], 'labels': ['car', 'door handle']}

視覺化邊界框

bboxes = od_result["<OD>"]["bboxes"]

labels = od_result["<OD>"]["labels"]

fig, ax = plt.subplots(1, 1, figsize=(8, 6))

ax.imshow(image)

for i, bbox in enumerate(bboxes):

rect = Rectangle((bbox[0], bbox[1]), bbox[2], bbox[3], linewidth=2, edgecolor='r', facecolor='none')

ax.add_patch(rect)

ax.text(bbox[0], bbox[1]-10, labels[i], color='r', fontsize=10)

ax.axis('off')

plt.show()

物件辨識結果,邊界框的準確度目前似乎進步空間還很大。