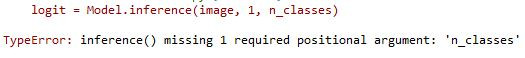

近期在學習雙流卷積神經網,在利用訓練完的model測試圖片的準確率與類別時遇到語法上的錯誤,麻煩各位給予幫助

import os

import numpy as np

from PIL import Image

import tensorflow as tf

import matplotlib.pyplot as plt

#from Model import inference

import Model

n_classes = 2

img_dir = './Input_data/test/'

log_dir = './Input_data/model/' #./Input_data/model/

lists = ['Face', 'NotFace', 'face1', 'face2', 'notface1', 'notface2']

def get_one_image(img_dir):

imgs = os.listdir(img_dir)

img_num = len(imgs)

# print(imgs, img_num)

idn = np.random.randint(0, img_num)

image = imgs[idn]

image_dir = img_dir + image

print(image_dir)

image = Image.open(image_dir)

plt.imshow(image)

plt.show()

image = image.resize([128, 128])

image_arr = np.array(image)

return image_arr

def test(image_arr):

with tf.Graph().as_default():

image = tf.cast(image_arr, tf.float32)

# print('1', np.array(image).shape)

image = tf.image.per_image_standardization(image)

# print('2', np.array(image).shape)

image = tf.reshape(image, [1, 128, 128, 3])

# print(image.shape)

** logit = Model.inference(image, 1, n_classes) **

logits = tf.nn.softmax(logit)

x = tf.placeholder(tf.float32, shape=[128, 128, 3])

saver = tf.train.Saver()

sess = tf.Session()

sess.run(tf.global_variables_initializer()) #加

ckpt = tf.train.get_checkpoint_state(log_dir)

if ckpt and ckpt.model_checkpoint_path:

# print(ckpt.model_checkpoint_path)

saver.restore(sess, ckpt.model_checkpoint_path)

# 调用saver.restore()函数,加载训练好的网络模型

print('Loading success')

# prediction = sess.run(logits, feed_dict={x: image_arr})

prediction = sess.run(logits, feed_dict={x: image_arr})

max_index = np.argmax(prediction)

print('預測的標籤:', max_index, lists[max_index])

print('預測的結果:', prediction)

if __name__ == '__main__':

img = get_one_image(img_dir)

test(img)

Model 程式如下

def weight_variable(shape, n):

initial = tf.truncated_normal(shape, stddev=n, dtype=tf.float32)

return initial

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape, dtype=tf.float32)

return initial

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

def max_pool_2x2(x, name):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name) #1331 ks st 1221

def inference(S_images,T_images, batch_size, n_classes):

with tf.variable_scope('s_conv1') as scope:

w_conv1 = tf.Variable(weight_variable([4, 4, 3, 32], 1.0), name='weights', dtype=tf.float32)

b_conv1 = tf.Variable(bias_variable([32]), name='biases', dtype=tf.float32)

s_conv1 = tf.nn.relu(conv2d(S_images, w_conv1)+b_conv1, name=scope.name)

with tf.variable_scope('s_pooling1_lrn') as scope:

pool1 = max_pool_2x2(s_conv1, 's_pooling1')

norm1 = tf.nn.lrn(pool1, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='s_norm1')

with tf.variable_scope('s_conv2') as scope:

w_conv2 = tf.Variable(weight_variable([4, 4, 32, 64], 0.1), name='weights', dtype=tf.float32) # 33 64 32

b_conv2 = tf.Variable(bias_variable([64]), name='biases', dtype=tf.float32)

s_conv2 = tf.nn.relu(conv2d(norm1, w_conv2)+b_conv2, name='s_conv2') # 得到64*64*32

with tf.variable_scope('s_pooling2_lrn') as scope:

pool2 = max_pool_2x2(s_conv2, 's_pooling2')

norm2 = tf.nn.lrn(pool2, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='s_norm2')

with tf.variable_scope('s_conv3') as scope:

w_conv3 = tf.Variable(weight_variable([4, 4, 64, 64], 0.1), name='weights', dtype=tf.float32) # 3 3 32 16

b_conv3 = tf.Variable(bias_variable([64]), name='biases', dtype=tf.float32)

s_conv3 = tf.nn.relu(conv2d(norm2, w_conv3)+b_conv3, name='s_conv3') # 得到32*32*16

with tf.variable_scope('s_pooling3_lrn') as scope:

pool3 = max_pool_2x2(s_conv3, 's_pooling3')

norm3 = tf.nn.lrn(pool3, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='s_norm3')

with tf.variable_scope('s_local4') as scope: #3

reshape = tf.reshape(norm3, shape=[batch_size, -1]) #-1

dim = reshape.get_shape()[1].value

w_fc1 = tf.Variable(weight_variable([dim, 64], 0.005), name='weights', dtype=tf.float32)

b_fc1 = tf.Variable(bias_variable([64]), name='biases', dtype=tf.float32)

s_local4 = tf.nn.relu(tf.matmul(reshape, w_fc1) + b_fc1, name=scope.name)

with tf.variable_scope('T_conv1') as scope:

w_conv1 = tf.Variable(weight_variable([4, 4, 3, 32], 1.0), name='weights', dtype=tf.float32) # 333 64

b_conv1 = tf.Variable(bias_variable([32]), name='biases', dtype=tf.float32)

T_conv1 = tf.nn.relu(conv2d(T_images, w_conv1)+b_conv1, name=scope.name)

with tf.variable_scope('T_pooling1_lrn') as scope:

pool1 = max_pool_2x2(T_conv1, 'T_pooling1')

norm1 = tf.nn.lrn(pool1, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='T_norm1')

with tf.variable_scope('T_conv2') as scope:

w_conv2 = tf.Variable(weight_variable([4, 4, 32, 64], 0.1), name='weights', dtype=tf.float32) # 33 64 32

b_conv2 = tf.Variable(bias_variable([64]), name='biases', dtype=tf.float32)

T_conv2 = tf.nn.relu(conv2d(norm1, w_conv2)+b_conv2, name='T_conv2')

with tf.variable_scope('T_pooling2_lrn') as scope:

pool2 = max_pool_2x2(T_conv2, 'T_pooling2')

norm2 = tf.nn.lrn(pool2, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='T_norm2')

with tf.variable_scope('T_conv3') as scope:

w_conv3 = tf.Variable(weight_variable([4, 4, 64, 64], 0.1), name='weights', dtype=tf.float32) # 3 3 32 16

b_conv3 = tf.Variable(bias_variable([64]), name='biases', dtype=tf.float32)

T_conv3 = tf.nn.relu(conv2d(norm2, w_conv3)+b_conv3, name='T_conv3')

with tf.variable_scope('T_pooling3_lrn') as scope:

pool3 = max_pool_2x2(T_conv3, 'T_pooling3')

norm3 = tf.nn.lrn(pool3, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='T_norm3')

with tf.variable_scope('T_local4') as scope:

reshape = tf.reshape(norm3, shape=[batch_size, -1]) #-1

dim = reshape.get_shape()[1].value

w_fc1 = tf.Variable(weight_variable([dim, 64], 0.005), name='weights', dtype=tf.float32)

b_fc1 = tf.Variable(bias_variable([64]), name='biases', dtype=tf.float32)

T_local4 = tf.nn.relu(tf.matmul(reshape, w_fc1) + b_fc1, name=scope.name)

local4 = s_local4 + T_local4

with tf.variable_scope('local5') as scope:

w_fc2 = tf.Variable(weight_variable([64 ,64], 0.005),name='weights', dtype=tf.float32) #128

b_fc2 = tf.Variable(bias_variable([64]), name='biases', dtype=tf.float32) #128

h_fc2 = tf.nn.relu(tf.matmul(local4, w_fc2) + b_fc2, name=scope.name)

h_fc2_dropout = tf.nn.dropout(h_fc2, 0.5)

with tf.variable_scope('softmax_linear') as scope:

weights = tf.Variable(weight_variable([64, n_classes], 0.005), name='softmax_linear', dtype=tf.float32) #128

biases = tf.Variable(bias_variable([n_classes]), name='biases', dtype=tf.float32)

softmax_linear = tf.add(tf.matmul(h_fc2_dropout, weights), biases, name='softmax_linear')

return softmax_linear

def losses(logits, labels):

with tf.variable_scope('loss') as scope:

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logits, labels=labels, name='xentropy_per_example')

loss = tf.reduce_mean(cross_entropy, name='loss')

tf.summary.scalar(scope.name + '/loss', loss)

return loss

def trainning(loss, learning_rate):

with tf.name_scope('optimizer'):

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

global_step = tf.Variable(0, name='global_step', trainable=False)

train_op = optimizer.minimize(loss, global_step=global_step)

return train_op

def evaluation(logits, labels):

with tf.variable_scope('accuracy') as scope:

correct = tf.nn.in_top_k(logits, labels, 1)

accuracy = tf.reduce_mean(tf.cast(correct, tf.float16))

tf.summary.scalar(scope.name + '/accuracy', accuracy)

return accuracy

少一個image參數

logit = Model.inference(image, 1, n_classes)

def inference(S_images,T_images, batch_size, n_classes):

加下去還是沒辦法 可能我需要讓S_images,T_images能使用還需要修改一些程式

在test函數那一區有幾個問題:

placeholder的size[128, 128, 3]和image的size[1, 128, 128, 3]不一樣

在建立model的時候,輸入的部分要使用placeholder和那些weight之類的連接,而不是直接用image,所以 logit = Model.inference(...)那邊裡面image要改成x

承上,所以記得placeholder的宣告要往前拉

model的部分我沒細看,如有還有出問題的話也許你能畫個圖解釋一下你CNN的架構

先這樣,有錯誤訊息再貼出來~