大家好,今天跟大家學習 利用Keras中的卷積神經網路(Convolutional Neural Networks,CNN)來進行數字辨識。

謎之聲:說好的ASP.NET C#呢?

作者:今天吃喜酒,只好把以前的筆記拿來當作鐵人賽文章 (掩面

本文開始

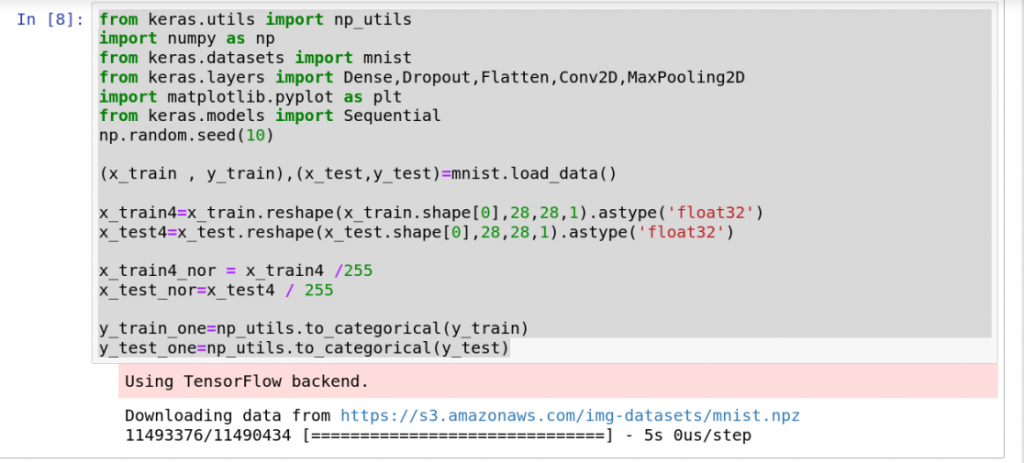

(1)準備資料

#宣告要使用到的函式庫

from keras.utils import np_utils

import numpy as np

from keras.datasets import mnist

from keras.layers import Dense,Dropout,Flatten,Conv2D,MaxPooling2D

import matplotlib.pyplot as plt

from keras.models import Sequential

np.random.seed(10)

#讀入Mnist資料

(x_train ,y_train),(x_test,y_test)=mnist.load_data()

#將28x28的圖片矩陣轉為784個數字(將28x28的圖片轉為矩陣)

x_train4=x_train.reshape(x_train.shape[0],28,28,1).astype('float32')

x_test4=x_test.reshape(x_test.shape[0],28,28,1).astype('float32')

#將資料正規化

x_train4_nor = x_train4 /255

x_test_nor=x_test4 / 255

y_train_one=np_utils.to_categorical(y_train)

y_test_one=np_utils.to_categorical(y_test)

如果沒有mnist資料:需要點選Downloading data from下載的連結

https://s3.amazonaws.com/img-datasets/mnist.npz

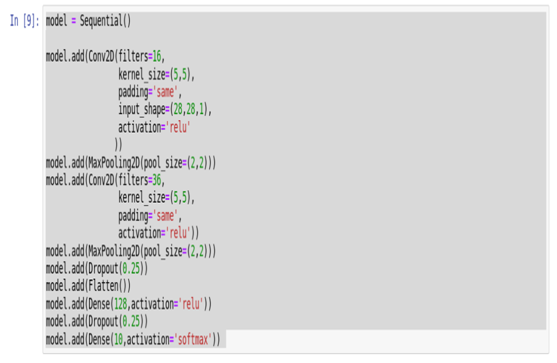

(2)建立模型

#建立一個線性堆疊的模型

model = Sequential()

#建立卷積層 1

model.add(Conv2D(filters=16,

kernel_size=(5,5),

padding='same',

input_shape=(28,28,1),

activation='relu'

))

#建立池化層1。

model.add(MaxPooling2D(pool_size=(2,2)))

#建立卷積層2

model.add(Conv2D(filters=36,

kernel_size=(5,5),

padding='same',

activation='relu'))

#filters:濾鏡的層數

#kernel_size(a,b):濾鏡的大小為axb

#padding='same':使影像經過卷積計算後大小不變

#input_shape(a,b,c):a,b為輸入影像的大小,c為單色或彩色

#activation:設定激勵函數

#建立池化層1

model.add(MaxPooling2D(pool_size=(2,2)))

#避免overfitting

model.add(Dropout(0.25))

#建立平坦層

model.add(Flatten())

#建立隱藏層,且避免overfitting

model.add(Dense(128,activation='relu'))

model.add(Dropout(0.25))

#建立輸出層

model.add(Dense(10,activation='softmax'))

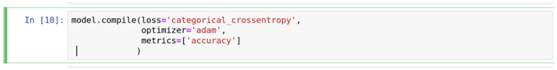

(3)定義訓練方式

#設定損失函數,最佳化方法,以及評估模型等。

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy']

)

(4)開始訓練

train_history=model.fit(x=x_train4_nor,y=y_train_one,validation_split=0.1,epochs=15,batch_size=300,verbose=2)

#說明:

#x=>影像特徵值

#y=>影像實際值

#validation_split=>設定訓練及驗證資料比例

#epochs=>訓練周期

#batch_size=>每一批次多少筆資料

#verbose=>顯示訓練過程

#acc是指模型訓練精度,val_acc是指模型在驗證集上的精度,loss是訓練的的損失值。

(5) 一、訓練週期15次

Train on 54000 samples, validate on 6000 samples

Epoch 1/15

- 59s - loss: 0.3893 - acc: 0.8806 - val_loss: 0.0755 - val_acc: 0.9783

Epoch 2/15

- 57s - loss: 0.1047 - acc: 0.9680 - val_loss: 0.0527 - val_acc: 0.9850

Epoch 3/15

- 66s - loss: 0.0723 - acc: 0.9778 - val_loss: 0.0424 - val_acc: 0.9888

Epoch 4/15

- 75s - loss: 0.0590 - acc: 0.9818 - val_loss: 0.0372 - val_acc: 0.9890

Epoch 5/15

- 63s - loss: 0.0489 - acc: 0.9851 - val_loss: 0.0335 - val_acc: 0.9908

Epoch 6/15

- 66s - loss: 0.0433 - acc: 0.9866 - val_loss: 0.0321 - val_acc: 0.9897

Epoch 7/15

- 66s - loss: 0.0398 - acc: 0.9875 - val_loss: 0.0345 - val_acc: 0.9902

Epoch 8/15

- 60s - loss: 0.0334 - acc: 0.9891 - val_loss: 0.0278 - val_acc: 0.9925

Epoch 9/15

- 65s - loss: 0.0320 - acc: 0.9896 - val_loss: 0.0310 - val_acc: 0.9913

Epoch 10/15

- 57s - loss: 0.0275 - acc: 0.9913 - val_loss: 0.0266 - val_acc: 0.9928

Epoch 11/15

- 57s - loss: 0.0243 - acc: 0.9921 - val_loss: 0.0245 - val_acc: 0.9928

Epoch 12/15

- 56s - loss: 0.0221 - acc: 0.9930 - val_loss: 0.0285 - val_acc: 0.9918

Epoch 13/15

- 44s - loss: 0.0220 - acc: 0.9929 - val_loss: 0.0256 - val_acc: 0.9912

Epoch 14/15

- 63s - loss: 0.0185 - acc: 0.9938 - val_loss: 0.0274 - val_acc: 0.9923

Epoch 15/15

- 54s - loss: 0.0186 - acc: 0.9933 - val_loss: 0.0248 - val_acc: 0.9927

二、訓練週期15次 (第16~30次)

Train on 54000 samples, validate on 6000 samples

Epoch 1/15

- 59s - loss: 0.0169 - acc: 0.9942 - val_loss: 0.0276 - val_acc: 0.9923

Epoch 2/15

- 53s - loss: 0.0151 - acc: 0.9948 - val_loss: 0.0261 - val_acc: 0.9922

Epoch 3/15

- 50s - loss: 0.0161 - acc: 0.9946 - val_loss: 0.0262 - val_acc: 0.9927

Epoch 4/15

- 56s - loss: 0.0141 - acc: 0.9954 - val_loss: 0.0251 - val_acc: 0.9930

Epoch 5/15

- 58s - loss: 0.0140 - acc: 0.9953 - val_loss: 0.0227 - val_acc: 0.9940

Epoch 6/15

- 56s - loss: 0.0133 - acc: 0.9958 - val_loss: 0.0224 - val_acc: 0.9935

Epoch 7/15

- 59s - loss: 0.0119 - acc: 0.9959 - val_loss: 0.0278 - val_acc: 0.9938

Epoch 8/15

- 63s - loss: 0.0117 - acc: 0.9961 - val_loss: 0.0254 - val_acc: 0.9932

Epoch 9/15

- 64s - loss: 0.0104 - acc: 0.9966 - val_loss: 0.0278 - val_acc: 0.9920

Epoch 10/15

- 52s - loss: 0.0105 - acc: 0.9962 - val_loss: 0.0252 - val_acc: 0.9940

Epoch 11/15

- 66s - loss: 0.0112 - acc: 0.9960 - val_loss: 0.0243 - val_acc: 0.9932

Epoch 12/15

- 64s - loss: 0.0098 - acc: 0.9967 - val_loss: 0.0231 - val_acc: 0.9937

Epoch 13/15

- 49s - loss: 0.0103 - acc: 0.9964 - val_loss: 0.0238 - val_acc: 0.9932

Epoch 14/15

- 59s - loss: 0.0085 - acc: 0.9972 - val_loss: 0.0263 - val_acc: 0.9943

Epoch 15/15

- 66s - loss: 0.0088 - acc: 0.9969 - val_loss: 0.0236 - val_acc: 0.9937

(6)畫出圖形

defplot_images_labels(images,labels,prediction,idx,num=10):

fig=plt.gcf()

fig.set_size_inches(12,14)

if num>25:

num=15

for i in range(0,num):

ax=plt.subplot(5,5,1+i)

ax.imshow(np.reshape(images[idx],(28,28)), cmap='binary')

title="label=" +str(labels[idx])

if len(prediction)>0:

title+=",predict="+str(prediction[idx])

ax.set_title(title,fontsize=10)

ax.set_xticks([])

ax.set_yticks([])

idx=idx+1

plt.show()

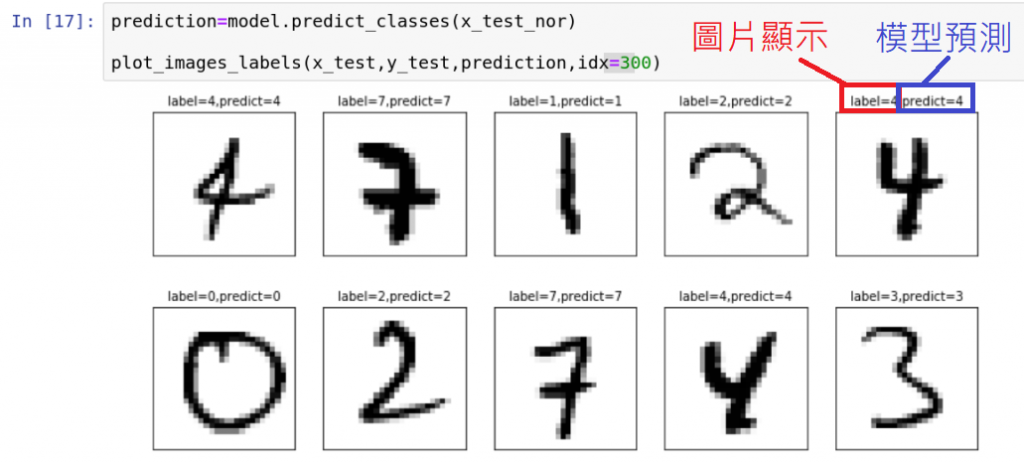

(7)進行預測

#輸入影像,並儲存結果。

prediction=model.predict_classes(x_test_nor)

#印出預測值,從第300筆開始,印10筆,也就是(300-309)

plot_images_labels(x_test,y_test,prediction,idx=300)

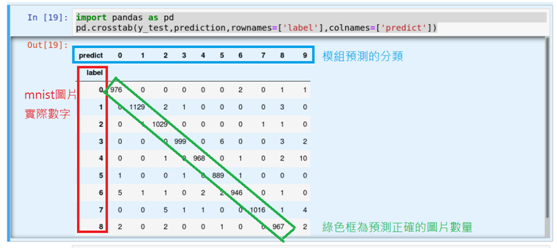

(8)顯示混淆矩陣

import pandas as pd

pd.crosstab(y_test,prediction,rownames=['label'],colnames=['predict'])

參考資料:https://ithelp.ithome.com.tw/articles/10197257

https://morvanzhou.github.io/tutorials/machine-learning/keras/2-2-classifier/