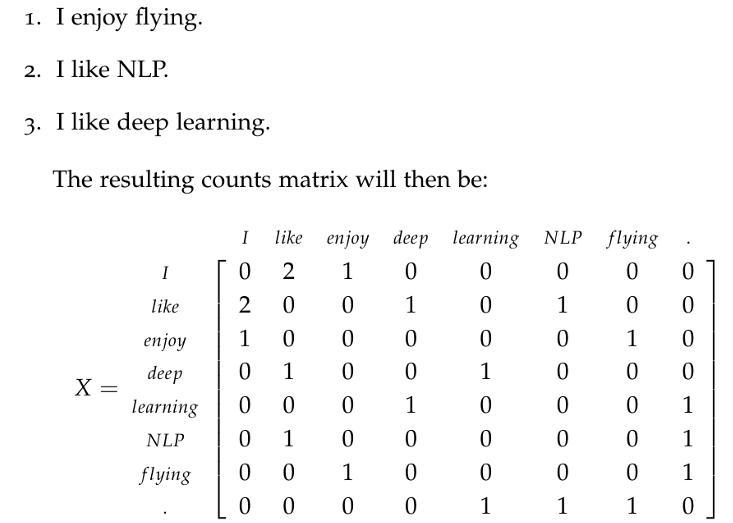

如果類別型特徵的目標值與類別筆數呈相關,可將筆數本身當作特徵,例如:自然語言處理中,字詞的計數編碼稱為詞頻,是自然語言處理中很重要的特徵。

If the target value of the categorical data and the counting are correlated, we can then use the counting as a feature. For example, in Natural Language Processing, word counts itself is a very important and useful feature.

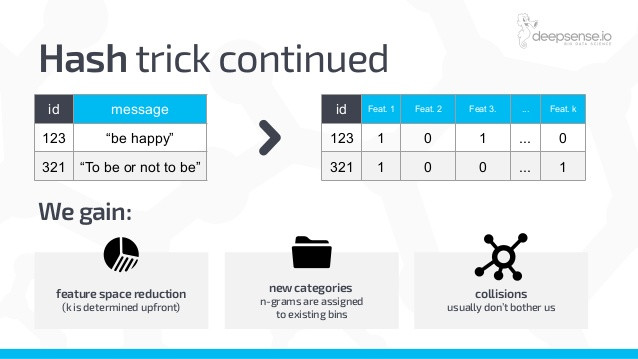

特徵雜湊將類別型特透過徵雜湊函數對應到一組數字,調整雜湊函數控制對應值的數量,在計算成本與鑑別度間取折衷,提高訊息密度並減少無用的標籤。當相異類別數量相當大時可考慮使用雜湊編碼以節省時間。

Feature hashing is projecting categorical features onto numbers using hash functions. It is a method compromised computational costs and discrimination. Feature hashing could reduce useless labels and increase the density of information of the data. We could consider using it when there are a lot of categorical features to save time.

本篇程式碼請參考Github。The code is available on Github.

文中若有錯誤還望不吝指正,感激不盡。

Please let me know if there’s any mistake in this article. Thanks for reading.

Reference 參考資料:

[1] 第二屆機器學習百日馬拉松內容

[2] Word to Vectors

[3] 数据特征处理之特征哈希