前一天我們講完了GAN的核心概念,今天要來用python去實作看看GAN囉!我們這次的程式碼是參考 https://keras.io/examples/generative/cyclegan/ 這篇文章的,由於程式碼過長,因此筆者只會拿部分重要程式碼來討論,若是想要完整程式碼,可以參考網站看看。

首先第一步要先下載這次要做訓練的資料集,我們這次用到了tensorflow裡的horse2zebra資料集。筆者在直接用程式碼下載資料的時候遇到了點問題,如果讀者也遇到相同問題無法下載,可以到 https://people.eecs.berkeley.edu/%7Etaesung_park/CycleGAN/datasets/ 直接下載資料集,下載後放到 C:\Users\使用者名稱\tensor_flow_datasets\cycle_gan\horse2zebra。

dataset, _ = tfds.load("cycle_gan/horse2zebra", with_info=True, as_supervised=True)

train_horses, train_zebras = dataset["trainA"], dataset["trainB"]

test_horses, test_zebras = dataset["testA"], dataset["testB"]

接下來要來建構GAN模型,首先我們先建立好GAN模型『生成』的部分。

def get_resnet_generator(

filters=64,

num_downsampling_blocks=2,

num_residual_blocks=9,

num_upsample_blocks=2,

gamma_initializer=gamma_init,

name=None,

):

img_input = layers.Input(shape=input_img_size, name=name + "_img_input")

x = ReflectionPadding2D(padding=(3, 3))(img_input)

x = layers.Conv2D(filters, (7, 7), kernel_initializer=kernel_init, use_bias=False)(

x

)

x = tfa.layers.InstanceNormalization(gamma_initializer=gamma_initializer)(x)

x = layers.Activation("relu")(x)

# Downsampling

for _ in range(num_downsampling_blocks):

filters *= 2

x = downsample(x, filters=filters, activation=layers.Activation("relu"))

# Residual blocks

for _ in range(num_residual_blocks):

x = residual_block(x, activation=layers.Activation("relu"))

# Upsampling

for _ in range(num_upsample_blocks):

filters //= 2

x = upsample(x, filters, activation=layers.Activation("relu"))

# Final block

x = ReflectionPadding2D(padding=(3, 3))(x)

x = layers.Conv2D(3, (7, 7), padding="valid")(x)

x = layers.Activation("tanh")(x)

model = keras.models.Model(img_input, x, name=name)

return model

生成的部分完成過後,我們要來做discriminator,也就是評估生成資料和真實資料的部分。

def get_discriminator(

filters=64, kernel_initializer=kernel_init, num_downsampling=3, name=None

):

img_input = layers.Input(shape=input_img_size, name=name + "_img_input")

x = layers.Conv2D(

filters,

(4, 4),

strides=(2, 2),

padding="same",

kernel_initializer=kernel_initializer,

)(img_input)

x = layers.LeakyReLU(0.2)(x)

num_filters = filters

for num_downsample_block in range(3):

num_filters *= 2

if num_downsample_block < 2:

x = downsample(

x,

filters=num_filters,

activation=layers.LeakyReLU(0.2),

kernel_size=(4, 4),

strides=(2, 2),

)

else:

x = downsample(

x,

filters=num_filters,

activation=layers.LeakyReLU(0.2),

kernel_size=(4, 4),

strides=(1, 1),

)

x = layers.Conv2D(

1, (4, 4), strides=(1, 1), padding="same", kernel_initializer=kernel_initializer

)(x)

model = keras.models.Model(inputs=img_input, outputs=x, name=name)

return model

# Get the generators

gen_G = get_resnet_generator(name="generator_G")

gen_F = get_resnet_generator(name="generator_F")

# Get the discriminators

disc_X = get_discriminator(name="discriminator_X")

disc_Y = get_discriminator(name="discriminator_Y")

生成和discriminator都完成過後就可以將他們給合在一起、正式建構GAN模型啦!

class CycleGan(keras.Model):

def __init__(

self,

generator_G,

generator_F,

discriminator_X,

discriminator_Y,

lambda_cycle=10.0,

lambda_identity=0.5,

):

super().__init__()

self.gen_G = generator_G

self.gen_F = generator_F

self.disc_X = discriminator_X

self.disc_Y = discriminator_Y

self.lambda_cycle = lambda_cycle

self.lambda_identity = lambda_identity

def compile(

self,

gen_G_optimizer,

gen_F_optimizer,

disc_X_optimizer,

disc_Y_optimizer,

gen_loss_fn,

disc_loss_fn,

):

super().compile()

self.gen_G_optimizer = gen_G_optimizer

self.gen_F_optimizer = gen_F_optimizer

self.disc_X_optimizer = disc_X_optimizer

self.disc_Y_optimizer = disc_Y_optimizer

self.generator_loss_fn = gen_loss_fn

self.discriminator_loss_fn = disc_loss_fn

self.cycle_loss_fn = keras.losses.MeanAbsoluteError()

self.identity_loss_fn = keras.losses.MeanAbsoluteError()

def train_step(self, batch_data):

# x is Horse and y is zebra

real_x, real_y = batch_data

with tf.GradientTape(persistent=True) as tape:

# Horse to fake zebra

fake_y = self.gen_G(real_x, training=True)

# Zebra to fake horse -> y2x

fake_x = self.gen_F(real_y, training=True)

# Cycle (Horse to fake zebra to fake horse): x -> y -> x

cycled_x = self.gen_F(fake_y, training=True)

# Cycle (Zebra to fake horse to fake zebra) y -> x -> y

cycled_y = self.gen_G(fake_x, training=True)

# Identity mapping

same_x = self.gen_F(real_x, training=True)

same_y = self.gen_G(real_y, training=True)

# Discriminator output

disc_real_x = self.disc_X(real_x, training=True)

disc_fake_x = self.disc_X(fake_x, training=True)

disc_real_y = self.disc_Y(real_y, training=True)

disc_fake_y = self.disc_Y(fake_y, training=True)

# Generator adversarial loss

gen_G_loss = self.generator_loss_fn(disc_fake_y)

gen_F_loss = self.generator_loss_fn(disc_fake_x)

# Generator cycle loss

cycle_loss_G = self.cycle_loss_fn(real_y, cycled_y) * self.lambda_cycle

cycle_loss_F = self.cycle_loss_fn(real_x, cycled_x) * self.lambda_cycle

# Generator identity loss

id_loss_G = (

self.identity_loss_fn(real_y, same_y)

* self.lambda_cycle

* self.lambda_identity

)

id_loss_F = (

self.identity_loss_fn(real_x, same_x)

* self.lambda_cycle

* self.lambda_identity

)

# Total generator loss

total_loss_G = gen_G_loss + cycle_loss_G + id_loss_G

total_loss_F = gen_F_loss + cycle_loss_F + id_loss_F

# Discriminator loss

disc_X_loss = self.discriminator_loss_fn(disc_real_x, disc_fake_x)

disc_Y_loss = self.discriminator_loss_fn(disc_real_y, disc_fake_y)

# Get the gradients for the generators

grads_G = tape.gradient(total_loss_G, self.gen_G.trainable_variables)

grads_F = tape.gradient(total_loss_F, self.gen_F.trainable_variables)

# Get the gradients for the discriminators

disc_X_grads = tape.gradient(disc_X_loss, self.disc_X.trainable_variables)

disc_Y_grads = tape.gradient(disc_Y_loss, self.disc_Y.trainable_variables)

# Update the weights of the generators

self.gen_G_optimizer.apply_gradients(

zip(grads_G, self.gen_G.trainable_variables)

)

self.gen_F_optimizer.apply_gradients(

zip(grads_F, self.gen_F.trainable_variables)

)

# Update the weights of the discriminators

self.disc_X_optimizer.apply_gradients(

zip(disc_X_grads, self.disc_X.trainable_variables)

)

self.disc_Y_optimizer.apply_gradients(

zip(disc_Y_grads, self.disc_Y.trainable_variables)

)

return {

"G_loss": total_loss_G,

"F_loss": total_loss_F,

"D_X_loss": disc_X_loss,

"D_Y_loss": disc_Y_loss,

}

建構完成後就會進入漫長的訓練過程,筆者這邊大概訓練了2個小時左右。如果讀者們想要趕快看到結果,可以嘗試將learning rate給提高一些,雖然這樣會導致結果可能沒有那麼的好,這邊是讀者們需要自己去評估、衡量的部分。

cycle_gan_model.fit(

tf.data.Dataset.zip((train_horses, train_zebras)),

epochs=1,

callbacks=[plotter, model_checkpoint_callback],

)

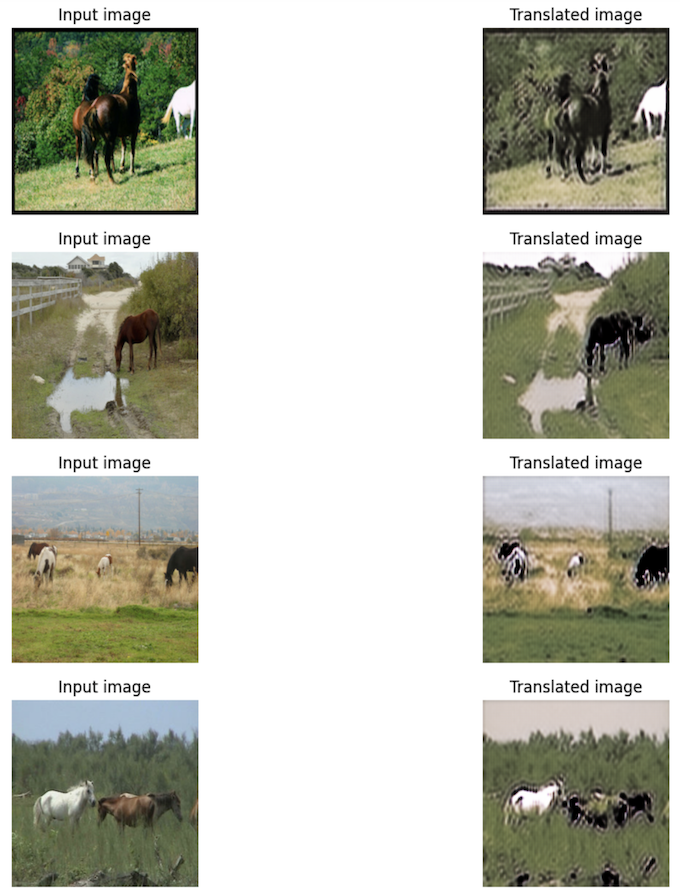

完成後就可以看到GAN模型所創造出來的『仿品』囉!

參考資料:https://keras.io/examples/generative/cyclegan/