首先,先注意底下幾個限制:

或是以底下這個範例來說明:

databricks jobs create --json '{

"name": "My hello notebook job",

"tasks": [

{

"task_key": "my_hello_notebook_task",

"notebook_task": {

"notebook_path": "/Workspace/Users/someone@example.com/hello",

"source": "WORKSPACE"

},

"libraries": [

{

"pypi": {

"package": "wheel==0.41.2"

}

}

],

"new_cluster": {

"spark_version": "13.3.x-scala2.12",

"node_type_id": "i3.xlarge",

"num_workers": 1,

"spark_env_vars": {

"PYSPARK_PYTHON": "/databricks/python3/bin/python3"

}

}

}

]

}'

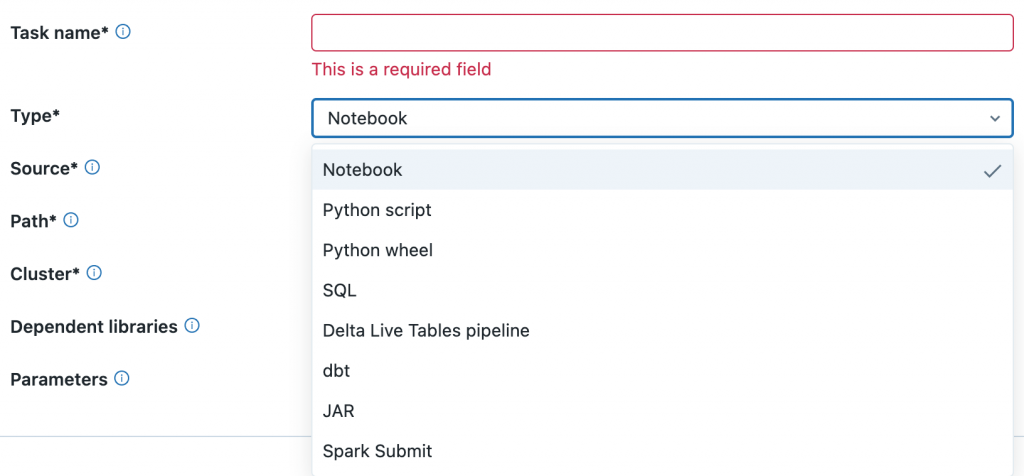

有 Notebook, JAR, Spark Submit, Python script, Delta Live Tables Pipeline, Python Wheel, SQL, dbt, Run Job

各種 Job 也都支援傳遞參數,請參考這裡的說明

參考這篇文件。

前提是 workspace 必須支援 Unity Catalog,且必須有一個 Delta table 作為目標資料表。

Reference: