昨天稍微簡單介紹了GAN的數學原理,今天則會分享實作GAN生成MNIST圖像,

那我們廢話不多說,正文開始!

先導入所需的套件

from tensorflow.keras.datasets import mnist

from tensorflow.keras.layers import Input, Dense, Reshape, Flatten

from tensorflow.keras.models import Sequential, Model

from tensorflow.keras.optimizers import Adam

from matplotlib import pyplot as plt

import numpy as np

先建立生成器:

class MnistGAN():

def build_generator(self):

model_input = Input(shape=(self.latent_dim,))

x = Dense(64, activation='relu')(model_input)

x = Dense(128, activation='relu')(x)

x = Dense(784, activation='sigmoid')(x)

generated_image = Reshape(self.shape)(x)

return Model(inputs=model_input, outputs=generated_image)

這裡我先建立一個Sequential的模型,裡面具有輸入層以及三個全連階層,前兩個激活函數為relu,最後一個為sigmoid

接下來是建立鑑別器:

def build_discriminator(self):

model_input = Input(shape=self.shape)

x = Flatten()(model_input)

x = Dense(128, activation='relu')(x)

x = Dense(64, activation='relu')(x)

validity = Dense(1, activation='sigmoid')(x)

return Model(inputs=model_input, outputs=validity)

注意:最後一層的激活函數為sigmoid

以及模型合併和基本設置

def __init__(self):

self.rows = 28

self.cols = 28

self.channels = 1

self.shape = (self.rows, self.cols, self.channels)

self.latent_dim = 100

optimizer = Adam(0.0002, 0.5)

self.discriminator = self.build_discriminator()

self.discriminator.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics=['accuracy'])

self.generator = self.build_generator()

self.generator.compile(loss='binary_crossentropy',

optimizer=optimizer, )

z = Input(shape=(self.latent_dim,))

img = self.generator(z)

self.discriminator.trainable = False

validity = self.discriminator(img)

self.combined = Model(z, validity)

self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

這裡分別進行圖像大小設定(28 * 28 * 1)、創建以及編譯生成器與鑑別器,並輸入噪聲

最後使用二元交叉熵作為損失函數,優化器為Adam

最後是訓練的部分:

def train(self, epochs, batch_size, sample_interval):

(X_train, _), (_, _) = mnist.load_data()

# Rescale -1 to 1

X_train = X_train / 255

X_train = np.expand_dims(X_train, axis=3)

valid = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

allDloss = []

allGloss = []

for epoch in range(epochs):

idx = np.random.randint(0, X_train.shape[0], batch_size)

imgs = X_train[idx]

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

gen_imgs = self.generator.predict(noise)

d_loss_real = self.discriminator.train_on_batch(imgs, valid)

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

noise = np.random.normal(0, 1, (batch_size, self.latent_dim))

g_loss1 = self.combined.train_on_batch(noise, valid)

g_loss2 = self.combined.train_on_batch(noise, valid)

g_loss3 = self.combined.train_on_batch(noise, valid)

g_loss = (g_loss2 + g_loss1 + g_loss3) / 3

print("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100 * d_loss[1], g_loss))

allDloss.append(d_loss[0])

allGloss.append(g_loss)

if epoch % sample_interval == 0:

self.sample_images(epoch)

if epoch == epochs - 1:

self.sample_images(epoch)

np.save('d_loss.npy', np.array(allGloss))

np.save('g_loss.npy', np.array(allDloss))

這裡我特別建立了allDloss和allGloss來儲存每次訓練的損失,這樣就能方便評估模型的表現

接下來是產生圖片

def sample_images(self, epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, self.latent_dim))

gen_imgs = self.generator.predict(noise)

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i, j].imshow(gen_imgs[cnt, :, :, 0], cmap='gray')

axs[i, j].axis('off')

cnt += 1

fig.savefig("你要儲存的位置" % epoch)

plt.close()

這裡使用了savefig來將生成的照片儲存到特定的位置,這樣就能了解訓練時生成的圖片成效

最後決定訓練多少次就大功告成啦

if __name__ == '__main__':

gan = MnistGAN()

gan.train(epochs=3000, batch_size=128, sample_interval=10)

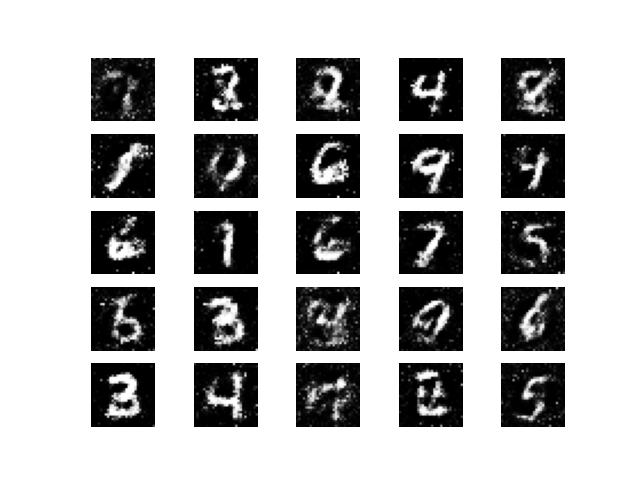

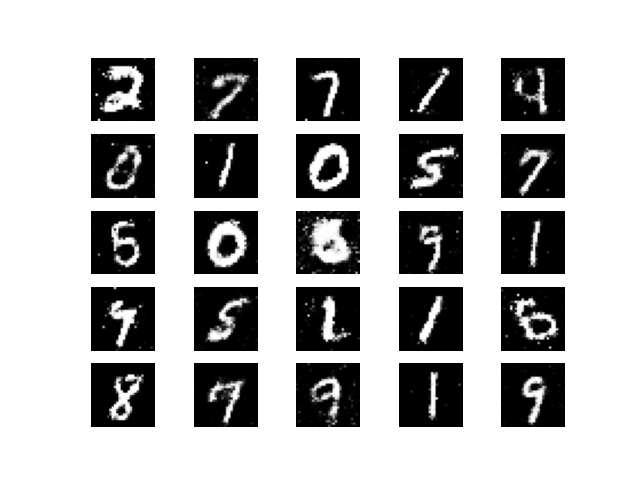

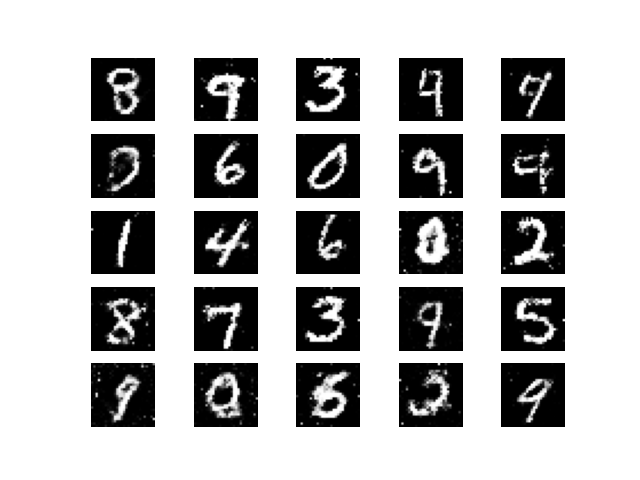

這裡我設定訓練30000次,以下是訓練10000次、20000次以及最後的結果

可以發現訓練越後面越能看出數字,圖片也比較清晰

以上就是小弟我今天分享有關於用GAN實現MNIST數據集圖像生成,明天將會分享GA的另一個種類—DCGAN,那我們明天見!

程式參考網站:github