在這一回中,我們將演示如何挖出「各個命名空間」中的「Secret」和「ConfigMap」,進而從中觀察憑證類型,提取內容資訊後,找出期限喔。

oc get secret -n <namespace名稱> -o 輸出格式

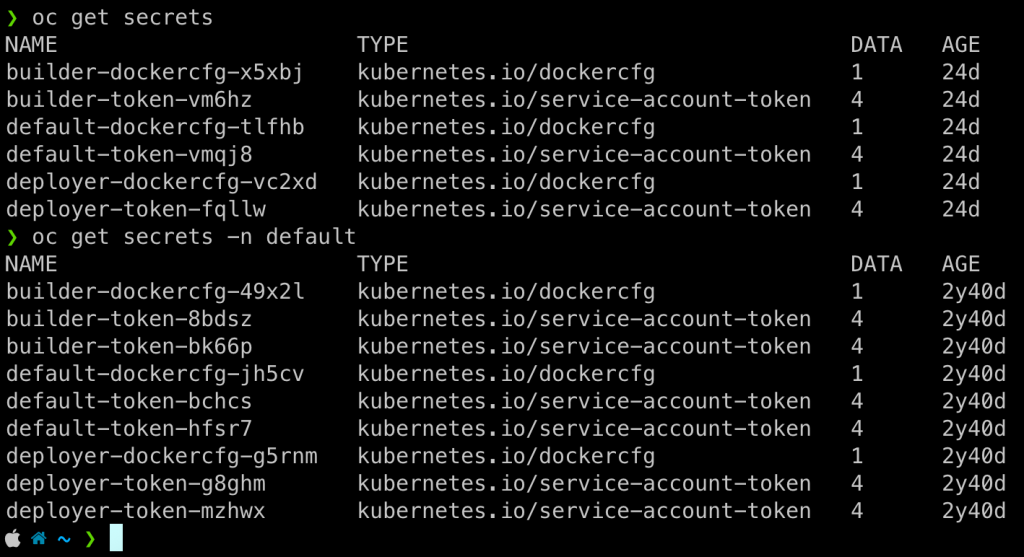

# 這指令可以看出有哪些 secrets

# 沒指名 namespace 的話,就看你的 `~/.kube/config` 裡面怎麼寫,那會決定預設的 namespace

oc get secrets

# 這兩條指令都是去挖出 default namespace 裡面的 secret 的詳細內容

oc get secret -n default -o yaml

oc get secret -n default -o json

上述指令試圖去列出 預設的命名空間中 (default namespace) 有哪些 Secrets

兩種格式特色是,當資料量多的時候⋯⋯

用 yaml 相對容易給人閱讀,因為一行一行的切齊縮排。

而 json 格式則方便快速餵給其他指令處理。

apiVersion: v1

items:

- apiVersion: v1

data:

.dockercfg: e30=

kind: Secret

metadata:

annotations:

kubernetes.io/service-account.name: builder

kubernetes.io/service-account.uid: xxxxxxxxxx

openshift.io/token-secret.name: builder-token-xxxx

openshift.io/token-secret.value: <在這裡是一把私鑰>

creationTimestamp: "2023-07-20T17:06:22Z"

name: builder-dockercfg-49x2l

namespace: default

ownerReferences:

- apiVersion: v1

blockOwnerDeletion: false

controller: true

kind: Secret

name: builder-token-bk66p

uid: xxxxxxxx

resourceVersion: "37994"

uid: xxxxxxxx

type: kubernetes.io/dockercfg

- apiVersion: v1

data:

ca.crt: <這邊是一串 CA_CRT>

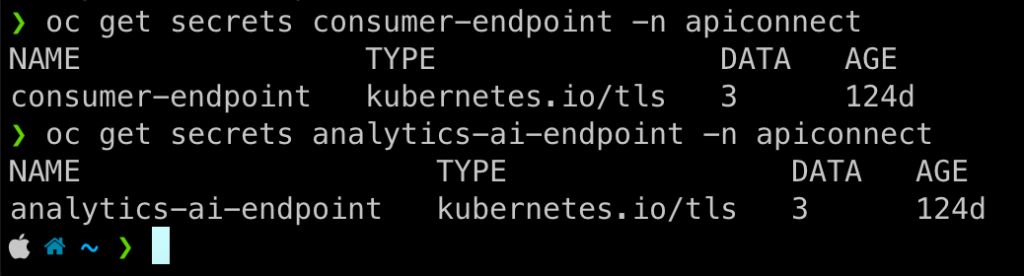

在 Type 的地方...

目標要是kubernetes.io/tls

oc get secrets analytics-ai-endpoint -n apiconnect

oc get secrets analytics-ai-endpoint -n apiconnect

base64 指令處理、使用 openssl 解開後,便可以拿回有效期限。

oc get secrets analytics-ai-endpoint -n apiconnect -o jsonpath="{.data['tls\.crt']}" | base64 -d | openssl x509 -noout -subject -issuer -enddate

subject=CN=analytics.xxxxxxxxxx.tw

issuer=CN=ingress-ca

notAfter=Apr 27 07:46:50 2027 GMT

#!/usr/bin/env bash

set -euo pipefail

# Inventory all namespaces for CA/cert expiration across Secrets & ConfigMaps

# Output: ca_expiry_inventory.tsv

# Requirements: oc, jq, openssl, awk, sed

OUT="ca_expiry_inventory.tsv"

TMPDIR="$(mktemp -d)"

trap 'rm -rf "$TMPDIR"' EXIT

echo -e "Namespace\tKind\tName\tKey\tCertIndex\tSubject\tIssuer\tNotAfter(UTC)\tDaysLeft\tSerial\tSHA256" > "$OUT"

now_epoch="$(date +%s)"

# Parse all PEM certs in a file; print one TSV line per cert

# args: ns kind name key file

parse_pem_file() {

local ns="$1" kind="$2" name="$3" key="$4" file="$5"

# Extract multiple certs from bundle and feed each to openssl

# Use awk to split into per-cert temporary files

local idx=0

awk '

/-----BEGIN CERTIFICATE-----/ {inblk=1; fn=sprintf("%s.%d", fbase, ++idx); print > fn; next}

inblk { print >> fn; if (/-----END CERTIFICATE-----/) {inblk=0} }

' fbase="$TMPDIR/cert" "$file"

# If no certs extracted (idx==0), maybe the whole file is a single PEM

if ! ls "$TMPDIR"/cert.* >/dev/null 2>&1; then

cp "$file" "$TMPDIR/cert.1" 2>/dev/null || true

fi

for pem in "$TMPDIR"/cert.*; do

[ -s "$pem" ] || continue

idx=$((idx+1)) # display index (1-based)

# Pull fields via openssl

local sub iss end ser fp dt

sub="$(openssl x509 -in "$pem" -noout -subject 2>/dev/null | sed 's/^subject= *//; s/\t/ /g')"

iss="$(openssl x509 -in "$pem" -noout -issuer 2>/dev/null | sed 's/^issuer= *//; s/\t/ /g')"

end="$(openssl x509 -in "$pem" -noout -enddate 2>/dev/null | sed 's/^notAfter=//')"

ser="$(openssl x509 -in "$pem" -noout -serial 2>/dev/null | sed 's/^serial=//')"

fp="$(openssl x509 -in "$pem" -noout -fingerprint -sha256 2>/dev/null | sed 's/^SHA256 Fingerprint=//')"

# normalize enddate to epoch; some locales require explicit "UTC"/"GMT"

local end_epoch=""

if [ -n "$end" ]; then

# try parse as-is first; if fail, append "UTC"

end_epoch="$(date -u -d "$end" +%s 2>/dev/null || date -u -d "$end UTC" +%s 2>/dev/null || echo "")"

fi

local days=""

if [ -n "$end_epoch" ]; then

days=$(( (end_epoch - now_epoch) / 86400 ))

fi

# Print line

printf "%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\n" \

"$ns" "$kind" "$name" "$key" "${pem##*.}" \

"${sub:-<n/a>}" "${iss:-<n/a>}" "${end:-<n/a>}" "${days:-<n/a>}" "${ser:-<n/a>}" "${fp:-<n/a>}" \

>> "$OUT"

done

# cleanup per-call extracted files

rm -f "$TMPDIR"/cert.* 2>/dev/null || true

}

# Handle Secrets: look for data keys that look like certs (base64 encoded)

scan_secrets_in_ns() {

local ns="$1"

local json

json="$(oc get secret -n "$ns" -o json 2>/dev/null || echo '{"items":[]}')"

echo "$json" \

| jq -r '

.items[]?

| {name: .metadata.name, type: (.type // ""), data: (.data // {})}

| select((.data | length) > 0)

| @base64

' \

| while read -r row; do

obj="$(echo "$row" | base64 -d)"

name="$(echo "$obj" | jq -r '.name')"

type="$(echo "$obj" | jq -r '.type')"

# iterate keys that are likely certs

echo "$obj" \

| jq -r '

.data

| to_entries[]

| select(.key | test("(^ca\\.crt$)|(^tls\\.crt$)|(.+\\.crt$)|(.+bundle\\.crt$)"))

| @base64

' \

| while read -r kv; do

ent="$(echo "$kv" | base64 -d)"

key="$(echo "$ent" | jq -r '.key')"

b64="$(echo "$ent" | jq -r '.value')"

# decode to file

f="$TMPDIR/${ns}__secret__${name}__${key}.pem"

echo "$b64" | base64 -d > "$f" 2>/dev/null || true

# quick heuristic: only proceed if file contains a PEM header

if grep -q "BEGIN CERTIFICATE" "$f" 2>/dev/null; then

parse_pem_file "$ns" "Secret" "$name" "$key" "$f"

fi

done

done

}

# Handle ConfigMaps: values are plaintext; pick keys that contain PEM blocks

scan_configmaps_in_ns() {

local ns="$1"

local json

json="$(oc get configmap -n "$ns" -o json 2>/dev/null || echo '{"items":[]}')"

echo "$json" \

| jq -r '

.items[]?

| {name: .metadata.name, data: (.data // {})}

| select((.data | length) > 0)

| @base64

' \

| while read -r row; do

obj="$(echo "$row" | base64 -d)"

name="$(echo "$obj" | jq -r '.name')"

echo "$obj" \

| jq -r '

.data

| to_entries[]

| select(.value | contains("BEGIN CERTIFICATE"))

| @base64

' \

| while read -r kv; do

ent="$(echo "$kv" | base64 -d)"

key="$(echo "$ent" | jq -r '.key')"

val="$(echo "$ent" | jq -r '.value')"

f="$TMPDIR/${ns}__configmap__${name}__${key}.pem"

printf "%s" "$val" > "$f"

parse_pem_file "$ns" "ConfigMap" "$name" "$key" "$f"

done

done

}

# Build namespace list

NAMESPACES="$(oc get ns -o jsonpath='{range .items[*]}{.metadata.name}{"\n"}{end}')"

echo "$NAMESPACES" | while read -r ns; do

[ -z "$ns" ] && continue

# Secrets

scan_secrets_in_ns "$ns"

# ConfigMaps

scan_configmaps_in_ns "$ns"

done

echo "Done. Output -> $OUT"

目前拿到兩個 TSV 表格。 剩下的部分用 Excel 之類的東西去翻找查詢就好,別字幹一堆程式出來,那樣蠻浪費時間的。

我認為更聰明的方法,是要把這份表格的資料,塞到資料庫、或是放到 S3 搭配 Athena 用 SQL Query 的會更快。 這樣我只要專注第一份表格就行。