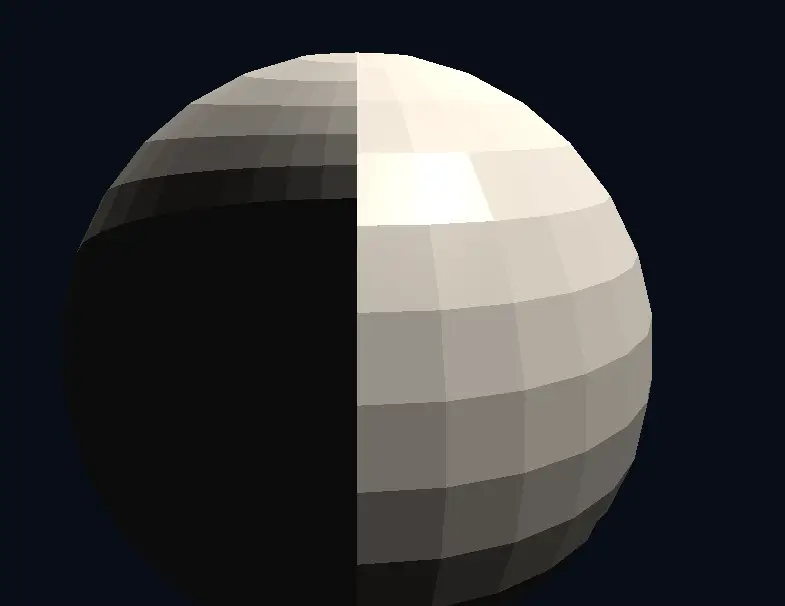

今天把昨天的「三角形」升級成「載入 .obj 模型 + 基本打光(Blinn-Phong)」。

你會學到三件事:(1) 讀取模型並放進 Vertex/Index Buffer、(2) 用 UBO(Uniform Buffer)把相機與光線參數送進 Shader、(3) 在 Render Pass 中綁好 Pipeline/Descriptor 然後畫出來。

我們繼續使用 傳統 Render Pass + Framebuffer(不使用 dynamic rendering),沿用你 Day 13 已經建好的 swapchain、render pass、framebuffers、command buffers、同步物件等。

[載入 .obj] → (頂點/法線/索引) → 建立 Vertex/Index Buffer

│

[建立 UBO + Descriptor](攝影機、MVP、燈光)

│

[建立 Graphics Pipeline](加上頂點輸入描述)

│

每幀:

更新 UBO(相機/模型轉動)

錄製 Cmd:

BeginRenderPass → 綁 Pipeline/Descriptor/Vertex/Index → vkCmdDrawIndexed → EndRenderPass

Submit → Present

mat4 與 vec3。.obj):Header-only,放進 external/tiny_obj_loader.h 即可。小提醒:初學先用 無材質、無 UV 也行,但我們今天要做打光,需要法線。選一個含法線或可由工具匯出法線的 .obj。

我們需要「位置 + 法線」。為了和 Vulkan 對齊,C++ 端定義一個 Vertex 結構,並提供描述(告訴 pipeline:每個頂點 stride、對應到哪個 layout(location))。

struct Vertex {

glm::vec3 pos; // 位置 (location = 0)

glm::vec3 normal; // 法線 (location = 1)

};

直覺:tinyobj 會把「每個面」拆出來,可能有重複頂點;我們把(位置+法線)的唯一組合去重、建立

vertices與indices。

#define TINYOBJLOADER_IMPLEMENTATION

#include "tiny_obj_loader.h"

// 把 obj 讀成去重後的 vertices / indices

void loadModel(const std::string& path,

std::vector<Vertex>& outVertices,

std::vector<uint32_t>& outIndices)

{

tinyobj::ObjReader reader;

if(!reader.ParseFromFile(path)) throw std::runtime_error(reader.Error());

const auto& attrib = reader.GetAttrib();

const auto& shapes = reader.GetShapes();

struct Key { int vi, ni; }; // 位置索引 + 法線索引

struct KeyHash {

size_t operator()(Key const& k) const { return ((size_t)k.vi<<32) ^ (size_t)k.ni; }

};

struct KeyEq {

bool operator()(Key const& a, Key const& b) const { return a.vi==b.vi && a.ni==b.ni; }

};

std::unordered_map<Key, uint32_t, KeyHash, KeyEq> cache;

for(const auto& s : shapes){

size_t index_offset = 0;

for(size_t f=0; f<s.mesh.num_face_vertices.size(); ++f){

int fv = s.mesh.num_face_vertices[f]; // 3(一般三角)

for(int v=0; v<fv; ++v){

auto idx = s.mesh.indices[index_offset + v];

Key key{ idx.vertex_index, idx.normal_index };

auto it = cache.find(key);

if(it == cache.end()){

glm::vec3 p( attrib.vertices[3*idx.vertex_index+0],

attrib.vertices[3*idx.vertex_index+1],

attrib.vertices[3*idx.vertex_index+2] );

glm::vec3 n = glm::normalize( glm::vec3(

attrib.normals[3*idx.normal_index+0],

attrib.normals[3*idx.normal_index+1],

attrib.normals[3*idx.normal_index+2]) );

uint32_t newIndex = (uint32_t)outVertices.size();

outVertices.push_back({p, n});

cache[key] = newIndex;

outIndices.push_back(newIndex);

}else{

outIndices.push_back(it->second);

}

}

index_offset += fv;

}

}

}

為了簡化,我們假設 .obj 有法線。如果沒有,可以在 DCC(Blender、Maya)重新計算並匯出。

教學版我們先用「Host 可見」記憶體(簡單但非最佳)。之後你可以改為「Staging → Device Local」。

uint32_t findMemoryType(uint32_t typeFilter, VkMemoryPropertyFlags props,

VkPhysicalDevice phys)

{

VkPhysicalDeviceMemoryProperties mp; vkGetPhysicalDeviceMemoryProperties(phys, &mp);

for(uint32_t i=0;i<mp.memoryTypeCount;i++){

if(typeFilter & (1<<i)){

if((mp.memoryTypes[i].propertyFlags & props)==props) return i;

}

}

throw std::runtime_error("no memtype");

}

struct Buffer {

VkBuffer buf{};

VkDeviceMemory mem{};

size_t size{};

};

void createBuffer(VkDevice device, VkPhysicalDevice phys,

VkDeviceSize size, VkBufferUsageFlags usage,

VkMemoryPropertyFlags props, Buffer& out)

{

VkBufferCreateInfo bi{VK_STRUCTURE_TYPE_BUFFER_CREATE_INFO};

bi.size = size;

bi.usage = usage;

bi.sharingMode = VK_SHARING_MODE_EXCLUSIVE;

VK_CHECK(vkCreateBuffer(device, &bi, nullptr, &out.buf));

VkMemoryRequirements req; vkGetBufferMemoryRequirements(device, out.buf, &req);

VkMemoryAllocateInfo ai{VK_STRUCTURE_TYPE_MEMORY_ALLOCATE_INFO};

ai.allocationSize = req.size;

ai.memoryTypeIndex = findMemoryType(req.memoryTypeBits, props, phys);

VK_CHECK(vkAllocateMemory(device, &ai, nullptr, &out.mem));

VK_CHECK(vkBindBufferMemory(device, out.buf, out.mem, 0));

out.size = (size_t)size;

}

建立與填充:

std::vector<Vertex> vertices;

std::vector<uint32_t> indices;

Buffer vbo, ibo;

void createMeshBuffers(){

loadModel("assets/monkey.obj", vertices, indices);

VkDeviceSize vbSize = sizeof(Vertex) * vertices.size();

VkDeviceSize ibSize = sizeof(uint32_t) * indices.size();

createBuffer(device, phys, vbSize,

VK_BUFFER_USAGE_VERTEX_BUFFER_BIT,

VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT|VK_MEMORY_PROPERTY_HOST_COHERENT_BIT, vbo);

createBuffer(device, phys, ibSize,

VK_BUFFER_USAGE_INDEX_BUFFER_BIT,

VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT|VK_MEMORY_PROPERTY_HOST_COHERENT_BIT, ibo);

// 直接 map & copy(教學版;正式版建議 staging 上 DeviceLocal)

void* p; vkMapMemory(device, vbo.mem, 0, vbSize, 0, &p); memcpy(p, vertices.data(), (size_t)vbSize); vkUnmapMemory(device, vbo.mem);

vkMapMemory(device, ibo.mem, 0, ibSize, 0, &p); memcpy(p, indices.data(), (size_t)ibSize); vkUnmapMemory(device, ibo.mem);

}

為了避免 std140 對齊地雷,我們都用 vec4/mat4:

struct UBO {

glm::mat4 mvp; // 投影 * 觀察 * 模型

glm::mat4 model; // 世界變換(給 normalMatrix 用)

glm::mat4 normalMat; // 供 (normal,0) 乘上去後取 xyz

glm::vec4 lightDir; // 世界空間方向(指向物體),w 忽略

glm::vec4 lightColor; // 光顏色

glm::vec4 cameraPos; // 世界空間相機位置

};

Buffer ubo;

VkDescriptorSetLayout dsl;

VkDescriptorPool dsp;

VkDescriptorSet dset;

void createUBOandDescriptor(){

createBuffer(device, phys, sizeof(UBO),

VK_BUFFER_USAGE_UNIFORM_BUFFER_BIT,

VK_MEMORY_PROPERTY_HOST_VISIBLE_BIT|VK_MEMORY_PROPERTY_HOST_COHERENT_BIT, ubo);

// Layout: set=0, binding=0 → uniform buffer

VkDescriptorSetLayoutBinding b{};

b.binding = 0; b.descriptorType = VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER;

b.descriptorCount = 1; b.stageFlags = VK_SHADER_STAGE_VERTEX_BIT | VK_SHADER_STAGE_FRAGMENT_BIT;

VkDescriptorSetLayoutCreateInfo li{VK_STRUCTURE_TYPE_DESCRIPTOR_SET_LAYOUT_CREATE_INFO};

li.bindingCount = 1; li.pBindings = &b;

VK_CHECK(vkCreateDescriptorSetLayout(device, &li, nullptr, &dsl));

// Pool

VkDescriptorPoolSize ps{VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER, 1};

VkDescriptorPoolCreateInfo pi{VK_STRUCTURE_TYPE_DESCRIPTOR_POOL_CREATE_INFO};

pi.maxSets = 1; pi.poolSizeCount = 1; pi.pPoolSizes = &ps;

VK_CHECK(vkCreateDescriptorPool(device, &pi, nullptr, &dsp));

// Allocate + Write

VkDescriptorSetAllocateInfo ai{VK_STRUCTURE_TYPE_DESCRIPTOR_SET_ALLOCATE_INFO};

ai.descriptorPool = dsp; ai.descriptorSetCount=1; ai.pSetLayouts=&dsl;

VK_CHECK(vkAllocateDescriptorSets(device, &ai, &dset));

VkDescriptorBufferInfo dbi{ ubo.buf, 0, sizeof(UBO) };

VkWriteDescriptorSet w{VK_STRUCTURE_TYPE_WRITE_DESCRIPTOR_SET};

w.dstSet = dset; w.dstBinding=0; w.descriptorCount=1;

w.descriptorType = VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER;

w.pBufferInfo = &dbi;

vkUpdateDescriptorSets(device, 1, &w, 0, nullptr);

}

shaders/model.vert#version 450

layout(location=0) in vec3 inPos;

layout(location=1) in vec3 inNormal;

layout(location=0) out vec3 vNormalWS;

layout(location=1) out vec3 vPosWS;

layout(set=0, binding=0, std140) uniform UBO {

mat4 mvp;

mat4 model;

mat4 normalMat;

vec4 lightDir; // world dir (toward surface)

vec4 lightColor;

vec4 cameraPos;

} ubo;

void main(){

vec4 posWS = ubo.model * vec4(inPos,1.0);

vPosWS = posWS.xyz;

vNormalWS = normalize( (ubo.normalMat * vec4(inNormal,0.0)).xyz );

gl_Position = ubo.mvp * vec4(inPos,1.0);

}

shaders/model.frag#version 450

layout(location=0) in vec3 vNormalWS;

layout(location=1) in vec3 vPosWS;

layout(location=0) out vec4 outColor;

layout(set=0, binding=0, std140) uniform UBO {

mat4 mvp;

mat4 model;

mat4 normalMat;

vec4 lightDir;

vec4 lightColor;

vec4 cameraPos;

} u;

void main(){

vec3 N = normalize(vNormalWS);

vec3 L = normalize(u.lightDir.xyz);

vec3 V = normalize(u.cameraPos.xyz - vPosWS);

vec3 H = normalize(L + V);

float NdotL = max(dot(N, L), 0.0);

// Blinn-Phong:ambient + diffuse + specular

vec3 ambient = 0.05 * u.lightColor.rgb;

vec3 diffuse = u.lightColor.rgb * NdotL;

float specPow = 64.0;

float spec = pow(max(dot(N, H), 0.0), specPow);

vec3 specular = 0.3 * spec * u.lightColor.rgb;

vec3 color = ambient + diffuse + specular;

outColor = vec4(color, 1.0);

}

我們用世界空間計算:VS 輸出世界座標與世界法線,FS 與世界光向量/相機位置做 Blinn-Phong。

注意normalMat:是model的上 3×3 的逆轉置,以 mat4 傳入避免 std140 對齊陷阱。

在 Day 13 的 createPipelineLayout() / createGraphicsPipeline() 基礎上,加兩點:

dslvoid createPipelineLayout(){

VkPipelineLayoutCreateInfo ci{VK_STRUCTURE_TYPE_PIPELINE_LAYOUT_CREATE_INFO};

ci.setLayoutCount = 1;

ci.pSetLayouts = &dsl; // ★ 讓 set=0 可用

VK_CHECK(vkCreatePipelineLayout(device, &ci, nullptr, &pipelineLayout));

}

// binding: 每個頂點 stride

VkVertexInputBindingDescription bind{};

bind.binding = 0;

bind.stride = sizeof(Vertex);

bind.inputRate = VK_VERTEX_INPUT_RATE_VERTEX;

// attributes: location 對應 pos/normal

std::array<VkVertexInputAttributeDescription, 2> attrs{};

attrs[0] = { 0, 0, VK_FORMAT_R32G32B32_SFLOAT, offsetof(Vertex, pos) };

attrs[1] = { 1, 0, VK_FORMAT_R32G32B32_SFLOAT, offsetof(Vertex, normal) };

VkPipelineVertexInputStateCreateInfo vi{VK_STRUCTURE_TYPE_PIPELINE_VERTEX_INPUT_STATE_CREATE_INFO};

vi.vertexBindingDescriptionCount = 1; vi.pVertexBindingDescriptions = &bind;

vi.vertexAttributeDescriptionCount= 2; vi.pVertexAttributeDescriptions = attrs.data();

把這個 vi 塞回你 Day 13 的 gp.pVertexInputState = &vi;。其餘(IA、Viewport、Raster、Blend、renderPass、subpass)維持原本流程。

我們用 GLM 做簡單的攝影機與投影。記得 Vulkan 投影的 y 要翻轉(proj[1][1] *= -1.f)。

void updateUBO(float timeSec)

{

glm::vec3 eye = { 0.0f, 0.8f, 2.2f };

glm::vec3 center= { 0.0f, 0.3f, 0.0f };

glm::vec3 up = { 0.0f, 1.0f, 0.0f };

glm::mat4 model = glm::rotate(glm::mat4(1.0f), timeSec*0.7f, glm::vec3(0,1,0));

glm::mat4 view = glm::lookAt(eye, center, up);

glm::mat4 proj = glm::perspective(glm::radians(60.0f),

swapExtent.width/(float)swapExtent.height, 0.1f, 100.0f);

proj[1][1] *= -1.0f; // Vulkan NDC Y 翻轉

glm::mat3 n3 = glm::transpose( glm::inverse( glm::mat3(model) ) );

glm::mat4 normalMat = glm::mat4( glm::vec4(n3[0],0), glm::vec4(n3[1],0),

glm::vec4(n3[2],0), glm::vec4(0,0,0,1) );

UBO data{};

data.mvp = proj * view * model;

data.model = model;

data.normalMat = normalMat;

data.lightDir = glm::vec4(glm::normalize(glm::vec3(-0.4f, -1.0f, -0.3f)), 0.0f);

data.lightColor= glm::vec4(1.0f, 0.96f, 0.9f, 1.0f);

data.cameraPos = glm::vec4(eye, 1.0f);

void* p=nullptr;

vkMapMemory(device, ubo.mem, 0, sizeof(UBO), 0, &p);

memcpy(p, &data, sizeof(UBO));

vkUnmapMemory(device, ubo.mem);

}

在主迴圈 drawFrame() 前呼叫 updateUBO(time) 即可。

把 Day 13 的 recordCmd(imageIndex) 改成(重點標在 ★):

void recordCmd(uint32_t imageIndex) {

VkCommandBuffer cmd = cmdBufs[imageIndex];

VkCommandBufferBeginInfo bi{VK_STRUCTURE_TYPE_COMMAND_BUFFER_BEGIN_INFO};

VK_CHECK(vkBeginCommandBuffer(cmd, &bi));

VkClearValue clear; clear.color = {{0.03f, 0.05f, 0.09f, 1.0f}};

VkRenderPassBeginInfo rbi{VK_STRUCTURE_TYPE_RENDER_PASS_BEGIN_INFO};

rbi.renderPass = renderPass;

rbi.framebuffer= framebuffers[imageIndex];

rbi.renderArea = {{0,0}, swapExtent};

rbi.clearValueCount = 1; rbi.pClearValues = &clear;

vkCmdBeginRenderPass(cmd, &rbi, VK_SUBPASS_CONTENTS_INLINE);

vkCmdBindPipeline(cmd, VK_PIPELINE_BIND_POINT_GRAPHICS, pipeline);

// ★ 綁 Descriptor(UBO)

vkCmdBindDescriptorSets(cmd, VK_PIPELINE_BIND_POINT_GRAPHICS,

pipelineLayout, 0, 1, &dset, 0, nullptr);

// ★ 綁定頂點/索引緩衝

VkDeviceSize offs=0;

vkCmdBindVertexBuffers(cmd, 0, 1, &vbo.buf, &offs);

vkCmdBindIndexBuffer(cmd, ibo.buf, 0, VK_INDEX_TYPE_UINT32);

vkCmdDrawIndexed(cmd, (uint32_t)indices.size(), 1, 0, 0, 0);

vkCmdEndRenderPass(cmd);

VK_CHECK(vkEndCommandBuffer(cmd));

}

(因為沒有depth buffer,所以渲染順序錯亂)

建立(一次性):

createMeshBuffers()

createUBOandDescriptor()

createPipelineLayout()(要用 dsl)createGraphicsPipeline()(頂點輸入已設定)重建 swapchain(視窗大小變更):

清理:按建立的反向順序 vkDestroy*(pipeline → layout → descriptor pool/layout → buffers…)。

全黑或只看到清色:

vkCmdBindDescriptorSets,FS/VS 拿不到 UBO。Vertex 對應的 location、format、offset。Validation Layers 抱怨 UBO 對齊:

mat4/vec4;不要用 mat3 直接放進 std140。模型歪/光怪角度怪:

normalMat 是 model 的逆轉置,VS 用 (normalMat * vec4(n,0)).xyz。proj[1][1] *= -1.f。看不到正面:

cullMode = NONE;或切換面向 frontFace。視窗改大小 → 畫面停止:

VK_ERROR_OUT_OF_DATE_KHR/SUBOPTIMAL 時,記得完整做 swapchain 重建流程。vec4 baseColor,FS 乘上它。lightDir/lightColor 換成陣列(或 SSBO),在 FS loop(注意效能)。combined image sampler 的 descriptor,FS 用 texture(...) 取樣(需要 sampler/image view/layout 轉換等,日後章節會做)。把模型畫亮的公式很固定:

「載入模型 → 頂點/索引 Buffer」+「UBO(相機/光)+ Descriptor」+「Pipeline(含頂點輸入)」→ 在 Render Pass 中綁好就畫。

今天你已經從三角形邁向「真模型 + 光照」——接下來可以加入貼圖、法線貼圖、甚至 PBR,慢慢把你的 Vulkan 小引擎養大!

完整Code : [https://pastecode.io/s/zi2nfs0j]