把設計好的 10條 FAQ 句子轉成向量

用sentence-transformers(SBERT 家族)製作句子/句段的向量表示

把向量存成 numpy 檔

from sentence_transformers import SentenceTransformer

import numpy as np

# 選模型(小、支援中文且速度好):paraphrase-multilingual-MiniLM-L12-v2 (384-d)

MODEL_NAME = "paraphrase-multilingual-MiniLM-L12-v2"

embedder = SentenceTransformer(MODEL_NAME)

# 決定要 embed 的欄位(question / answer / question+answer)

texts = df["question"].astype(str).tolist()

# 產生 embeddings

embeddings = embedder.encode(

texts, # list[str],要轉換成 embeddings 的句子

show_progress_bar=True,

batch_size=32,

convert_to_numpy=True, # 取得 numpy array(方便儲存)

normalize_embeddings=False # 設成 True,encode 就會直接在輸出做 L2 正規化

)

# 轉成 float32 並檢查 shape / dtype

embeddings = embeddings.astype("float32")

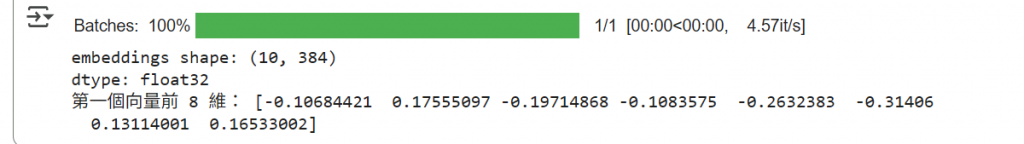

print("embeddings shape:", embeddings.shape)

print("dtype:", embeddings.dtype)

print("第一個向量前 8 維:", embeddings[0][:8])

結果 :

# 對比:question-only vs question+answer

texts_q = df["question"].astype(str).tolist()

texts_qa = (df["question"].astype(str) + " " + df["answer"].astype(str)).tolist()

emb_q = embedder.encode(texts_q, convert_to_numpy=True).astype("float32")

emb_qa = embedder.encode(texts_qa, convert_to_numpy=True).astype("float32")

print("question emb shape:", emb_q.shape)

print("question+answer emb shape:", emb_qa.shape)

# 計算 question 與 question+answer 的 cosine similarity(同一條目的相似度)

from sentence_transformers.util import cos_sim

import torch

sim = cos_sim(torch.from_numpy(emb_q), torch.from_numpy(emb_qa)).diag() # 對角線是同一條目的相似度

print("question vs question+answer (對角相似度) 前 10:", sim[:10])

結果 :

question emb shape: (10, 384)

question+answer emb shape: (10, 384)

question vs question+answer (對角相似度) 前 10: tensor([0.5858, 0.7163, 0.8482, 0.8058, 0.8331, 0.8145, 0.5490, 0.5493, 0.8589,0.6089])

# 儲存 numpy 檔

np.save("faq_question_embeddings.npy", embeddings) # question embeddings

np.save("faq_question_answer_embeddings.npy", emb_qa)

# 儲存 mapping(id 與 index 對應)

ids = df["id"].astype(str).tolist()

import json

with open("faq_ids.json", "w", encoding="utf-8") as f:

json.dump(ids, f, ensure_ascii=False, indent=2)

print("已儲存 faq_question_embeddings.npy 與 faq_ids.json")

結果 :