Word2Vec其實是Word to Vector的簡稱,意在將每一個字轉換成一條向量,並讓這字的語意透過這條向量描繪出來。早期做自然語言處理時,很難對讓電腦對詞背後的意思有更深一層的理解,因此詞與詞之間的關係很難被挖掘出來,像是相似詞、相反詞、對應詞等,因此Word2Vec在這樣的背景下產生就顯得極其珍貴。

而當初這演算法發明出來時,最大的賣點在於:

至於這樣的model如何訓練出來的,內部運作過於複雜,就不交代了,如果大家有興趣,可以看我的這篇網誌

由於這樣的模型大多是透過非常大的文章集進行訓練,如果用自己的電腦訓練,很有可能會燒掉你的電腦,因此google很貼心的把已經訓練好的模型釋出,當然除了google在2013釋出的模型之外,也有其他模型可以給大家下載來玩。

google的模型使用上很方便,直接透過gensim這個套件便可以達成。不過下面產品標籤分類,為了讓大家表較快速的練習,我把用到的字直接抽出來變成小一點的word2vec model,一方面推上github比較方便,一方面跑的時間也比較短。

import gensim

import pandas as pd

model = gensim.models.KeyedVectors.load_word2vec_format('D:\\word2vec\\GoogleNews-vectors-negative300.bin', binary=True)

gensim把整個word2vec的功能做的很齊全,除了可以找到字的向量以及相似字之外,可以算向量之間的相似度,甚至實作word2vec演算法向演伸出來的WMDistance(永來衡兩個句子之間的相似度),不過因為時間關係,這邊就示範如何抓出像像及相似字出來。

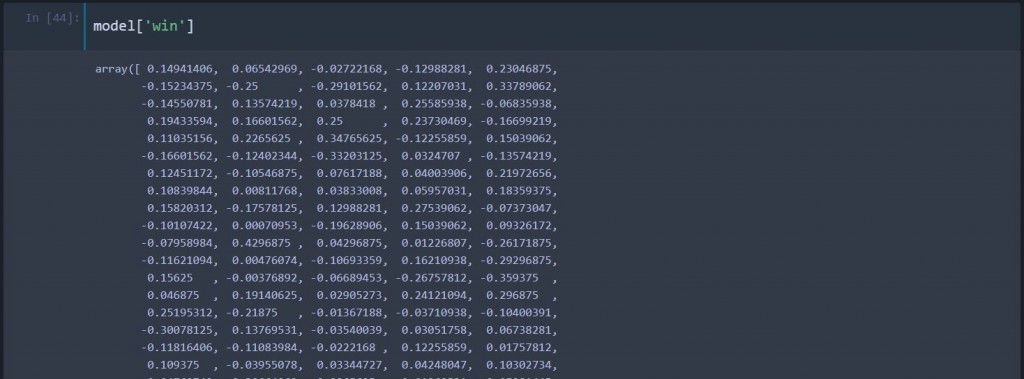

model['win']

terms = ['taipei', 'queen', 'teacher', 'taiwan', 'dumpling'] ## 建立我們要查找的詞彙

df = {}

for t in terms:

if t in model.vocab: ## 確認在訓練資療集當中是否有這個字,沒有這一步會出現錯誤

df[t] = [term for term, score in model.most_similar(t)] ## 原本會回傳(term, score)的List,現在只抓term

else:

print(t, ' not in vocab')

df = pd.DataFrame(df)

df

| dumpling | queen | taiwan | teacher | |

|---|---|---|---|---|

| 0 | dumplings | queens | asus | teachers |

| 1 | gyoza | princess | motorola | Teacher |

| 2 | beef_noodle_soup | king | hong_kong | guidance_counselor |

| 3 | steamed_bun | monarch | autodesk_autocad_architecture | elementary |

| 4 | steamed_buns | very_pampered_McElhatton | vpn | PE_teacher |

| 5 | noodles | Queen | beijing | schoolteacher |

| 6 | steamed_dumpling | NYC_anglophiles_aflutter | asia | school |

| 7 | noodle | Queen_Consort | india | pupil |

| 8 | baozi | princesses | singapore | student |

| 9 | manju | royal | ibm | paraprofessional |

import pandas as pd

import numpy as np

import nltk

from nltk.corpus import stopwords

stops = set(stopwords.words('english'))

import string

puns = string.punctuation

from sklearn.cluster import KMeans

from sklearn.cluster import AgglomerativeClustering

from sklearn.cluster import DBSCAN

from collections import Counter

X = all_categories_vecs

n_clusters= 30

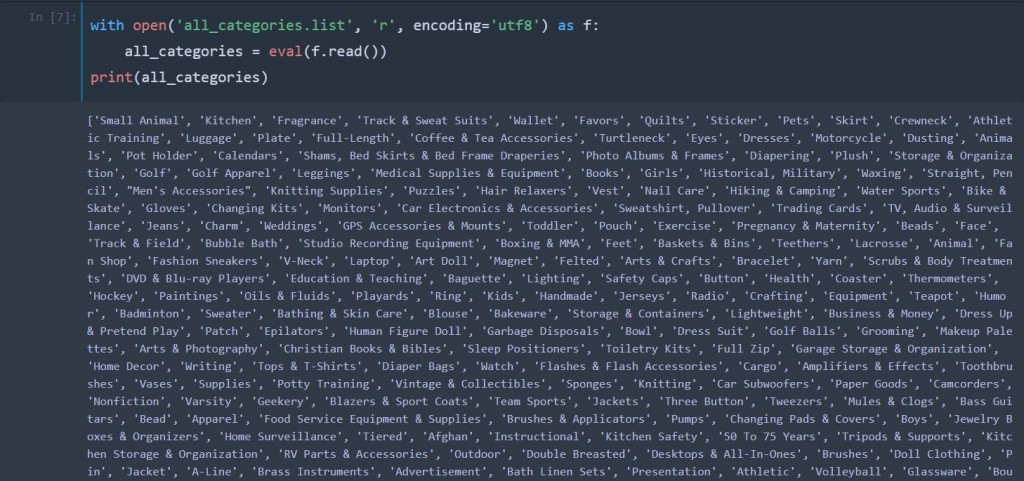

with open('all_categories.list', 'r', encoding='utf8') as f:

all_categories = eval(f.read())

print(all_categories)

這個model的前身是上面其他模型中twitter那一個,我選了50個維度的向量來使用。

word_vec_mapping = {}

path = "word2vec.txt"

with open(path, 'r', encoding='utf8') as f: ## 這個文檔的格式是一行一個字並配上他的向量,以空白鍵分隔

for line in f:

tokens = line.split()

token = tokens[0] ## 第一個token就是詞彙

vec = tokens[1:] ## 後面的token向量

word_vec_mapping[token] = np.array(vec, dtype=np.float32) ## 把整個model做成一個字典,以利查找字對應的向量

count += 1

vec_dimensions = len(word_vec_mapping.get('men')) ## 記錄這個mdoel每一個字的維度

這邊比較簡單的去做,就把每一個類別中每個字的向量相加做平均,就可以得到一個類別的向量了。如果是文章,長度不長的話可以考慮這個作法,但是嘗了之後效果就會變得很差。

def doc2vec(doc, word2vec=word_vec_mapping):

docvec=np.zeros(vec_dimensions, ) ## 先處使劃一條向量,如果某個類別裡面的字都沒有在字典裡,那麼會回傳這條向量

vec_count = 1

if pd.notnull(doc):

terms = tokenize(doc) ## 把類別tokenize成一個個的詞彙

for term in terms:

termvec = word_vec_mapping.get(term, None) ## 得到詞向量

if termvec is not None:

docvec += np.array(termvec, dtype=np.float32) ## 把詞向量家道類別向量中

vec_count += 1

return (docvec/vec_count) ## 記得加了幾條向量,就要處以相應的數字取平均

all_categories_vecs = np.concatenate((pd.Series(all_categories).apply(doc2vec).values)).reshape(len(all_categories), -1)

其他方法我在這個專案也有實作,不過因為文章太長了,就不放上來了有興趣可以直接點進去看

kmeans = KMeans(n_clusters=n_clusters, random_state=0)

all_categories_labels_kmeans = kmeans.fit_predict(X)

df_cat = pd.DataFrame(all_categories_labels_kmeans, index=all_categories, columns=['label'])

for i in range(len(set(all_categories_labels_kmeans))):

cats = list(df_cat[df_cat['label'] == i].index)

print("cluster " + str(i) + ": ")

print(list(cats))

print("=============================================")

print("=============================================")

因為並不是每個群都特別準確,整個結果又很長,所以挑幾個比較精準的群出來給大家欣賞。

# cluster 13: 室外活動

# ['Motorcycle', 'Golf', 'Golf Apparel', 'Hiking & Camping', 'Bike & Skate', 'Coaster', 'Potty Training', 'Outdoor', 'Yoga & Pilates', 'Gear', 'Motorcycle & Powersports', 'Swim Trunks', 'Outdoors', 'Fishing', 'Skateboard', 'Indoor', 'Costume', 'Ballet', 'Snowboard', 'Board, Surf']

# =============================================

# =============================================

# cluster 14: 衣著褲子

# ['Skirt', 'Dresses', 'Leggings', 'Jeans', 'Fashion Sneakers', 'Dress Suit', 'Denim', 'Sandals', 'Jean Jacket', 'Capris, Cropped', 'Panties', 'Pants', 'Dress Shorts', 'Suits', 'Bras', 'Casual Shorts', 'Shirts & Tops', 'Dress Shirts', 'Tops', 'Swim Briefs', 'Pants, Tights, Leggings', 'Pant Suit', 'Skirts, Skorts & Dresses', 'Dress - Pleat', 'Dress', 'Dress Pants', 'Underwear', 'Skirt Suit', 'Tops & Blouses', 'Skirts', 'Casual Pants', 'Shoes', 'Bottoms', 'Shorts', 'Flats', 'Lingerie', 'Baggy, Loose']

# =============================================

# =============================================

# cluster 15: 珠寶飾品

# ['Charm', 'Beads', 'Bracelet', 'Handmade', 'Blouse', 'Vintage & Collectibles', 'Bead', 'Double Breasted', 'Frame', 'Earrings', 'Scarf', 'Leather', 'Trim', 'Collar', 'Chain', 'Jewelry', 'Brooch', 'Antique', 'Necklace', 'Necklaces', 'Crochet', 'Cowl Neck', 'Fabric', 'Pendant', 'Vintage', 'Bracelets', 'Pattern', 'Fabric Postcard', 'Shawl']

# =============================================

# =============================================

# cluster 16: 體育競賽

# ['Athletic Training', 'Track & Field', 'Boxing & MMA', 'Lacrosse', 'Hockey', 'Badminton', 'Bowl', 'Varsity', 'Team Sports', 'Athletic', 'Volleyball', 'MLB', 'NBA', 'Games', 'Game', 'Basket', 'Football', 'Bowling', 'Pitcher', 'Tennis & Racquets', 'Cup', 'Outdoor Games', 'Baseball & Softball', 'Baseball', 'Sports Bras', 'Sports & Outdoor Play', 'Sport', 'Athletic Apparel', 'Sports & Outdoors', 'Sports', "Women's Golf Clubs", 'Soccer', 'NHL', 'NFL', 'NCAA', 'Basketball', "Men's Golf Clubs", 'Polo, Rugby']

# =============================================

# =============================================

# cluster 18: 寵物玩具

# ['Small Animal', 'Pets', 'Animals', 'Animal', 'Afghan', 'Cage', 'Pet Lover', 'Toys', 'Toy', 'Walkers', 'Children', 'Baby & Toddler Toys', 'Stuffed Animals & Plush', 'Serving', 'Teething Relief', 'Military', 'Feeding', 'Pet Supplies', 'Dogs']

# =============================================

# =============================================

# cluster 20: 家電用品

# ['Monitors', 'Thermometers', 'Amplifiers & Effects', 'Toothbrushes', 'Camcorders', 'Tweezers', 'Tripods & Supports', 'Tricycles, Scooters & Wagons', 'Dolls and Miniatures', 'Slipcovers', 'Backpacks & Carriers', 'Televisions', 'Prams', 'Cables & Adapters', 'Harnesses & Leashes', 'Fireplaces & Accessories', 'Batteries', 'Binoculars & Telescopes', 'Magnets', 'Humidifiers & Vaporizers', 'Microphones & Accessories', 'Microwaves', 'Lenses & Filters', 'Headsets', 'Strollers', 'Refrigerators', 'Laptops & Netbooks', 'Keyboards', 'Potties & Seats', 'Pacifiers & Accessories', 'Chargers & Cradles', 'Printers, Scanners & Supplies']

# =============================================

# =============================================

# cluster 24: 各式娛樂

# ['Books', 'Puzzles', 'Christian Books & Bibles', 'Writing', 'Nonfiction', 'Instructional', 'Cookbook', 'Puzzle', 'Biographies & Memoirs', 'Religion', 'Biography', 'Scifi', 'Hobbies', 'Religious', 'Sci-Fi, Fantasy', 'Journal', 'Poetry', 'Religion & Spirituality', 'Illustrated', 'Woodworking', 'Educational', 'Literature & Fiction', 'Fantasy', 'Horror', 'VHS', 'Fiction', 'Reference', 'eBook Readers', 'Books and Zines', 'Comic', 'Comics']

# =============================================

# =============================================

# cluster 25: 3C產品

# ['TV, Audio & Surveillance', 'Studio Recording Equipment', 'Fan Shop', 'DVD & Blu-ray Players', 'Radio', 'Car Video', 'Music', 'Video Game', 'Dance', 'Toy Remote Control & Play Vehicles', 'DVD', 'Video Gaming Merchandise', 'Live Sound & Stage', 'Video Games & Consoles', 'Cover-Ups', 'Media', 'Car Audio, Video & GPS', 'Lighting & Studio', 'Television', 'DJ, Electronic Music & Karaoke', 'Home Audio', 'CD']

# =============================================

# =============================================

# cluster 28: 節慶節日

# ['Calendars', 'Weddings', 'Totes & Shoppers', 'Thanksgiving', 'Halloween', 'Holidays', 'Decorating', 'Calendar', 'Christmas', 'Valentine', 'Invitations', 'Easter', 'Stationery & Party Supplies', 'Decorations', 'Flight', 'Party Supplies']

# =============================================

# =============================================

# cluster 29: 音樂樂器

# ['Bass Guitars', 'Brass Instruments', 'Stringed Instruments', 'Guitars', 'Band & Orchestra', 'Instrument', 'Musical instruments', 'Wind & Woodwind Instruments', 'Drums & Percussion']

# =============================================

# =============================================

all_categories_labels_dbscam = DBSCAN().fit_predict(X)

Counter(all_categories_labels_dbscam)

df_cat = pd.DataFrame(all_categories_labels_dbscam, index=all_categories, columns=['label'])

print(list(df_cat[df_cat['label'] == 0].index))

# ['Teethers', 'Playards', 'Epilators', 'Sweatercoat', 'Rainwear', 'Needlecraft', 'Bedspreads & Coverlets', 'Dehumidifiers', 'Humidifiers', 'Paperweights', 'Papermaking', 'other']