昨天跟各位簡單了解Variational Autoencoder(VAE),而今天將會分享如何用VAE實現MNIST數據集圖像生成,那我們廢話不多說,正文開始!

先載入所需的套件

import tensorflow as tf

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.datasets import mnist # Import MNIST dataset

先建立Encoder

class Encoder(tf.keras.layers.Layer):

def __init__(self, latent_dim):

super(Encoder, self).__init__()

self.latent_dim = latent_dim

self.encoder_layers = [

tf.keras.layers.InputLayer(input_shape=(28, 28, 1)), # Input shape for MNIST

tf.keras.layers.Conv2D(filters=32, kernel_size=3, strides=(2, 2), activation='relu'),

tf.keras.layers.Conv2D(filters=64, kernel_size=3, strides=(2, 2), activation='relu'),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(latent_dim + latent_dim)

]

def call(self, inputs):

x = inputs

for layer in self.encoder_layers:

x = layer(x)

mean, logvar = tf.split(x, num_or_size_splits=2, axis=-1)

return mean, logvar

在建立的Encoder中,先將輸入形狀為(28,28,1),然後建立了兩層卷積層再透過flatten將他扁平化,最後再使用一個全連階層並輸出latent_dim + latent_dim

而在call的方法中,我們將X分割成mean和logvar,以便計算VAE模型中的KL 散度損失

再來建立Decoder

class Decoder(tf.keras.layers.Layer):

def __init__(self, latent_dim):

super(Decoder, self).__init__()

self.latent_dim = latent_dim

self.decoder_layers = [

tf.keras.layers.Dense(units=7*7*64, activation='relu'),

tf.keras.layers.Reshape(target_shape=(7, 7, 64)),

tf.keras.layers.Conv2DTranspose(filters=64, kernel_size=3, strides=(2, 2), padding="SAME", activation='relu'),

tf.keras.layers.Conv2DTranspose(filters=32, kernel_size=3, strides=(2, 2), padding="SAME", activation='relu'),

tf.keras.layers.Conv2DTranspose(filters=1, kernel_size=3, strides=(1, 1), padding="SAME", activation='sigmoid')

]

def call(self, inputs):

x = inputs

for layer in self.decoder_layers:

x = layer(x)

return x

在Decoder中,先使用全連階層並具有7 * 7 * 64個單元,在使用Reshape將其改變成(7, 7, 64),最後使用反卷積將圖像放大成28 * 28 * 1的圖像

接下來我們要計算損失、計算梯度並更新模型權重。

def compute_loss(encoder, decoder, x, real_data):

mean, logvar = encoder(x)

eps = tf.random.normal(shape=mean.shape)

z = eps * tf.exp(logvar * 0.5) + mean

x_recon = decoder(z)

recon_loss = tf.reduce_sum(tf.square(real_data - x_recon), axis=[1, 2, 3])

kl_loss = -0.5 * tf.reduce_sum(1 + logvar - tf.square(mean) - tf.exp(logvar), axis=-1)

loss = tf.reduce_mean(recon_loss + kl_loss)

return loss

def train_step(encoder, decoder, x, real_data, optimizer):

with tf.GradientTape() as tape:

loss = compute_loss(encoder, decoder, x, real_data)

gradients = tape.gradient(loss, encoder.trainable_variables + decoder.trainable_variables)

optimizer.apply_gradients(zip(gradients, encoder.trainable_variables + decoder.trainable_variables))

return loss

最後是生成圖片數據的部分

def generate_data(decoder, encoder, n_samples, input_):

start = np.random.randint(0, input_.shape[0] - n_samples)

x = input_[start:start+n_samples]

mean, logvar = encoder(x)

eps = tf.random.normal(shape=mean.shape)

z = eps * tf.exp(logvar * 0.5) + mean

x_generated = decoder(z)

return x_generated

最後就是主程式的部分啦

if __name__ == '__main__':

num_epochs = 50

batch_size = 128

latent_dim = 16

(mnist_train, _), (mnist_test, _) = mnist.load_data()

mnist_train = mnist_train.astype(np.float32) / 255.0

mnist_test = mnist_test.astype(np.float32) / 255.0

mnist_train = np.expand_dims(mnist_train, axis=-1)

mnist_test = np.expand_dims(mnist_test, axis=-1)

num_batches = mnist_train.shape[0] // batch_size

encoder = Encoder(latent_dim)

decoder = Decoder(latent_dim)

optimizer = tf.keras.optimizers.Adam(learning_rate=1e-4)

loss_lst = np.zeros(shape=(num_batches))

for epoch in range(num_epochs):

for batch_idx in range(num_batches):

start_idx = batch_idx * batch_size

end_idx = (batch_idx + 1) * batch_size

x_batch = mnist_train[start_idx:end_idx]

loss = train_step(encoder, decoder, x_batch, x_batch, optimizer)

loss_lst[batch_idx] = loss

print("Epoch: {}, Loss: {}".format(epoch+1, loss))

x_generated = generate_data(decoder, encoder, 16, mnist_test)

fig, axs = plt.subplots(4, 4, figsize=(6, 6))

axs = axs.flatten()

for i in range(16):

axs[i].imshow(x_generated[i, :, :, 0], cmap='gray')

axs[i].axis("off")

plt.tight_layout()

plt.show()

在這裡先定義了潛在向量、批次以及訓練次數,然後就跟之前一樣載入MNIST數據集,最後就是訓練過程以及生成圖片了

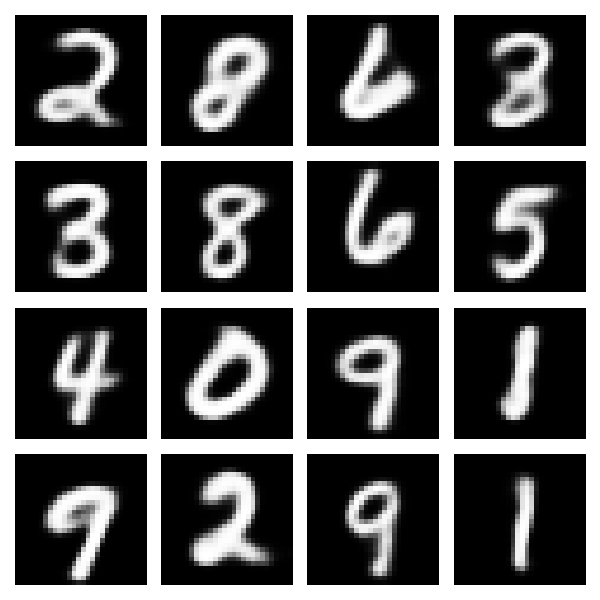

以下是生成的結果

以上就是VAE生成MNIST圖像的實作啦!我們的Autoencoder之旅也接近尾聲了,明天將會分享如何優化Autoencoder,那我們明天見!

參考網站:Tensorflow