這一章節我們要補充如何將單個序列轉換成適合模型輸入的格式,以及處理維度或批次不符合的問題

import torch

from transformers import AutoTokenizer

checkpoint = "bert-base-cased"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

sequence = "Using a Transformer network is simple"

tokens = tokenizer.tokenize(sequence)

ids = tokenizer.convert_tokens_to_ids(tokens)

# ------------------------------- 以上是 Day11範例

# 轉換成張量

input_ids = torch.tensor(ids)

在這裡將產生出的 ids 經過 torch.tensor 轉換為 PyTorch 張量,以下是最後產生的 input_ids 這時候看似都沒有問題

tensor([ 7993, 170, 13809, 23763, 2443, 1110, 3014])

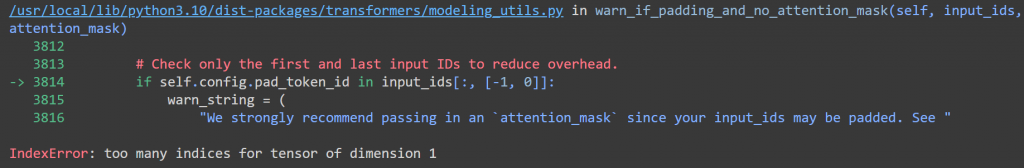

但假如我們將傳給 model 要輸出 logits 的時候我們來看看會發生什麼事

from transformers import BertForSequenceClassification

model = BertForSequenceClassification.from_pretrained(checkpoint)

model(input_ids)

會出現以下錯誤

我們將 sequence 直接傳入 tokenizer

tokenized_inputs = tokenizer(sequence, return_tensors="pt")

print(tokenized_inputs["input_ids"])

可以看到輸出時多了一層 dimension

tensor([[ 101, 7993, 170, 13809, 23763, 2443, 1110, 3014, 102]])

import torch

from transformers import AutoTokenizer, BertForSequenceClassification

checkpoint = "bert-base-cased"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

model = BertForSequenceClassification.from_pretrained(checkpoint)

sequence = "Using a Transformer network is simple"

tokens = tokenizer.tokenize(sequence)

ids = tokenizer.convert_tokens_to_ids(tokens)

# --------------------------------------------

# 添加批次維度

# 作法 1

input_ids = torch.tensor(ids)

input_ids = input_ids.unsqueeze(0)

# 作法 2

input_ids = torch.tensor([ids])

# --------------------------------------------

output = model(input_ids)

print(output.logits)

這樣最後就能得到 logits 了

tensor([[-0.0946, 0.3242]], grad_fn=<AddmmBackward0>)

恭喜今天是三十天的一半了( ̄︶ ̄)↗