在昨天,我們已經完成檢查資料缺失值與編碼,將原始資料轉換成適合輸入機器學習模型的數字矩陣。今天,我們要建立一個簡單的 Baseline Model,目的是確保資料處理正確,並取得一個基準分數,作為之後模型優化的比較基準。

昨天我們介紹了 Target Encoding、Frequency Encoding 和 One-Hot Encoding,今天我們來看看這三種編碼方法對模型表現有什麼影響。這次我選擇使用 RandomForestClassifier 作為 baseline model,因為它能快速收斂,且對資料標準化與特徵縮放(feature scaling)不敏感,很適合用來當這次比賽的初期驗證模型。

# === One-Hot Encoding + RandomForest Baseline ===

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

train_oh = pd.get_dummies(X, columns=cat_cols)

X_train, X_val, y_train, y_val = train_test_split(train_oh, y, test_size=0.2, random_state=42)

rf_oh = RandomForestClassifier(random_state=42)

rf_oh.fit(X_train, y_train)

y_pred_oh = rf_oh.predict(X_val)

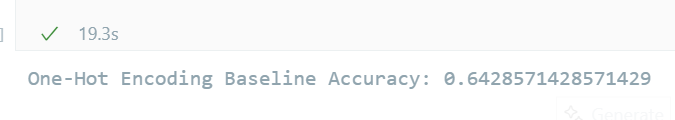

print("One-Hot Encoding Baseline Accuracy:", accuracy_score(y_val, y_pred_oh))

# === Frequency Encoding ===

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

train_fe = X.copy()

for col in cat_cols:

freq_map = train_fe[col].value_counts(normalize=True)

train_fe[col] = train_fe[col].map(freq_map)

X_train, X_val, y_train, y_val = train_test_split(train_fe, y, test_size=0.2, random_state=42)

rf_fe = RandomForestClassifier(random_state=42)

rf_fe.fit(X_train, y_train)

y_pred_fe = rf_fe.predict(X_val)

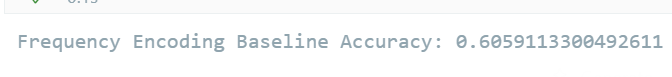

print("Frequency Encoding Baseline Accuracy:", accuracy_score(y_val, y_pred_fe))

## === Target Encoding + RandomForest Baseline ===

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# 建立 Baseline Model

X_train, X_val, y_train, y_val = train_test_split(train_te, y, test_size=0.2, random_state=42)

rf_te = RandomForestClassifier(random_state=42)

rf_te.fit(X_train, y_train)

y_pred_te = rf_te.predict(X_val)

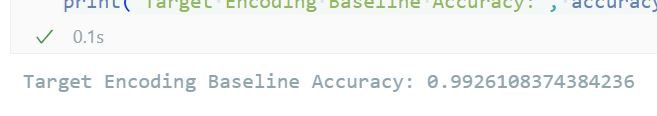

print("Target Encoding Baseline Accuracy:", accuracy_score(y_val, y_pred_te))

我們可以仔細觀察 Target Encoding 搭配 RandomForestClassifier 建立第一個 baseline model,結果驗證集 Accuracy 居然高達 0.9926!乍看之下好像超強,跑出來後我嚇一大跳,但其實這個分數可能太過完美,反而讓我懷疑是不是哪裡出錯了。

也因為這樣明天我需要把這個bug修理好:

程式就是要多嘗試、多 debug 才會進步!

明天我們就來好好修正這個 bug,分數應該就會回到合理的樣子