當你擁有越來越多建構模型的經驗後,下個該注意的重點就是評估這個模型的效能,以我最常處理的影像來說,很常會考量運算是放在雲端還在邊緣裝置,這都會需要測試模型的 benchmark。

那麼,我該如何做才能比較有保握訓練出來的模型是適合該裝置上的呢?模型建完後,做幾次推論,就大概可以略知一二!

舉個例子:假設我現在要在部署人臉辨識模型,希望每秒可以辨識 10 張(即FPS=10),我會希望我的模型每算一張畫面所花費的時間落在 100 ms(1000 / 10),反正一開始只需知道推論速度而不管準確性,所以 session init 初始變數後,就可以丟進網路中計算啦。

以下示範在 tensorflow 建造一個 alexnet

input_node = tf.placeholder(shape=[None, 224, 224, 3], dtype=tf.float32, name='input_node')

net = tf.layers.conv2d(input_node, 96, (11, 11),

strides=(4, 4),

activation=tf.nn.relu,

padding='same',

name='conv_1')

net = tf.nn.lrn(net, depth_radius=5,

bias=1.0,

alpha=0.0001 / 5.0,

beta=0.75,

name='norm_1')

net = tf.layers.max_pooling2d(net, pool_size=(3, 3),

strides=2,

name='max_pool_1')

net = tf.layers.conv2d(net, 256, (5, 5),

strides=(1, 1),

activation=tf.nn.relu,

padding="same",

name='conv_2')

net = tf.nn.lrn(net, depth_radius=5,

bias=1.0,

alpha=0.0001 / 5.0,

beta=0.75,

name='norm_2')

net = tf.layers.max_pooling2d(net, pool_size=(3, 3),

strides=2,

name='max_pool_2')

net = tf.layers.conv2d(net, 384, (3, 3),

strides=(1, 1),

padding="same",

activation=tf.nn.relu,

name='conv_3')

net = tf.layers.conv2d(net, 384, (3, 3),

strides=(1, 1),

padding="same",

activation=tf.nn.relu,

name='conv_4')

net = tf.layers.conv2d(net, 256, (3, 3),

strides=(1, 1),

padding="same",

activation=tf.nn.relu,

name='conv_5')

net = tf.layers.max_pooling2d(net, pool_size=(3, 3),

strides=2,

padding="valid",

name='max_pool_5')

net = tf.reshape(net, [-1, 6 * 6 * 256], name='flat')

net = tf.layers.dense(net, 4096, activation=tf.nn.relu, name='dense_1')

net = tf.layers.dense(net, 4096, activation=tf.nn.relu, name='dense_2')

logits = tf.layers.dense(net, NUM_CLASSES, name="logits_layer")

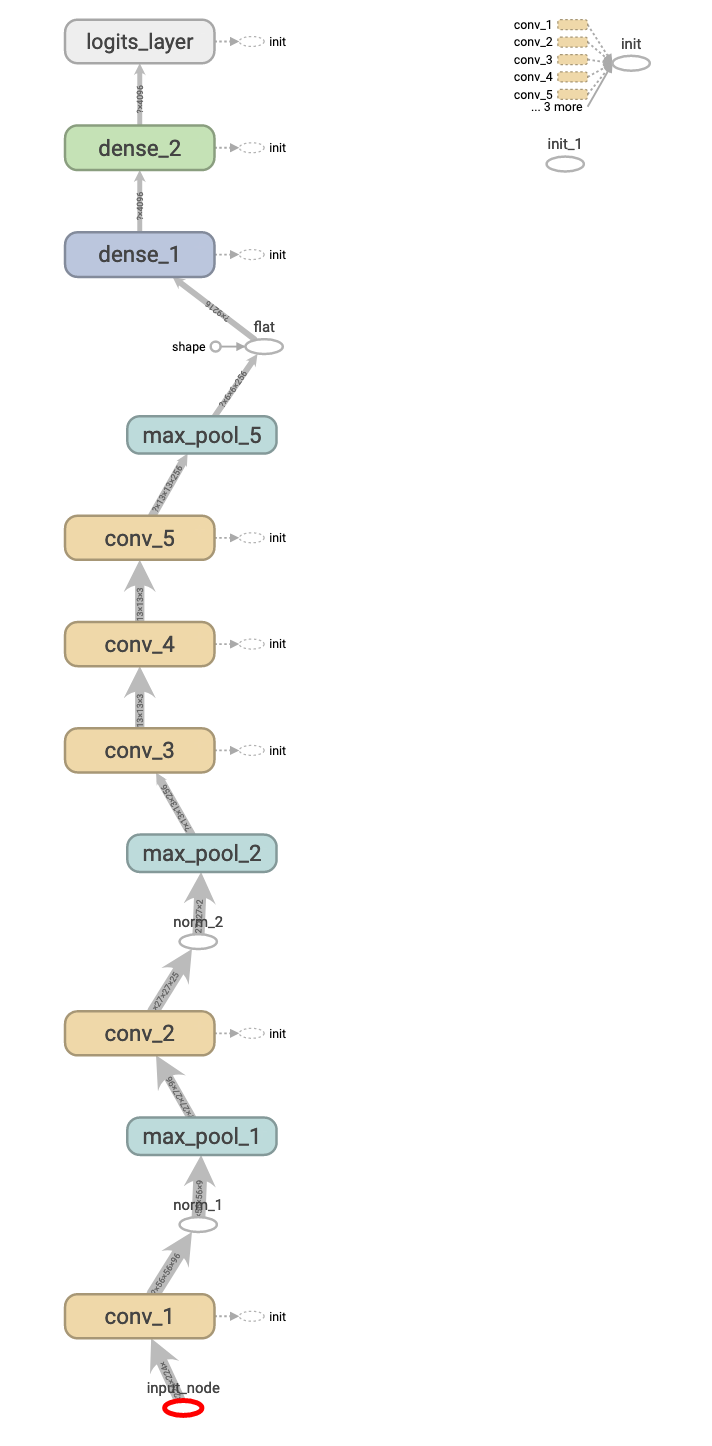

節點圖:

Alexnet 是一個很精簡的模型,為了演示,我再建造另一個較大的模型:VGG16

input_node = tf.placeholder(shape=[None, 224, 224, 3], dtype=tf.float32, name='input_node')

net = tf.layers.conv2d(input_node, 64, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_1_1')

net = tf.layers.conv2d(net, 64, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_1_2')

net = tf.layers.max_pooling2d(net, pool_size=(2, 2),

strides=2,

name='max_pool_1')

net = tf.layers.conv2d(net, 128, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_2_1')

net = tf.layers.conv2d(net, 128, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_2_2')

net = tf.layers.max_pooling2d(net, pool_size=(2, 2),

strides=2,

name='max_pool_2')

net = tf.layers.conv2d(net, 256, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_3_1')

net = tf.layers.conv2d(net, 256, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same', name='conv_3_2')

net = tf.layers.conv2d(net, 256, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_3_3')

net = tf.layers.max_pooling2d(net, pool_size=(2, 2),

strides=2,

name='max_pool_3')

net = tf.layers.conv2d(net, 512, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_4_1')

net = tf.layers.conv2d(net, 512, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_4_2')

net = tf.layers.conv2d(net, 512, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_4_3')

net = tf.layers.max_pooling2d(net, pool_size=(2, 2),

strides=2,

name='max_pool_4')

net = tf.layers.conv2d(net, 512, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_5_1')

net = tf.layers.conv2d(net, 512, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_5_2')

net = tf.layers.conv2d(net, 512, (3, 3),

strides=(1, 1),

activation=tf.nn.relu,

padding='same',

name='conv_5_3')

net = tf.layers.max_pooling2d(net, pool_size=(2, 2), strides=2, name='max_pool_5')

net = tf.reshape(net, [-1, 7 * 7 * 512], name='flat')

net = tf.layers.dense(net, 4096, activation=tf.nn.relu, name='dense_1')

net = tf.layers.dense(net, 4096, activation=tf.nn.relu, name='dense_2')

net = tf.layers.dense(net, 4096, activation=tf.nn.relu, name='dense_3')

logits = tf.layers.dense(net, NUM_CLASSES, name='logits_layer')

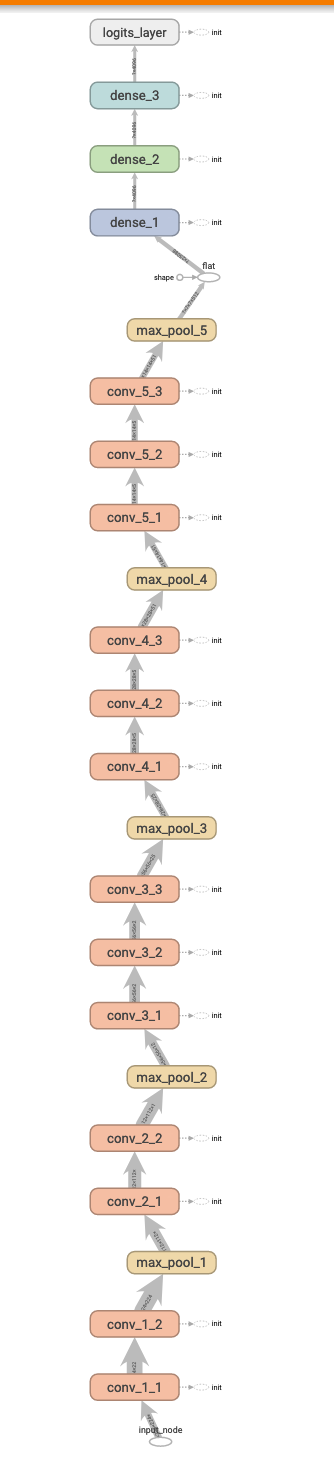

節點圖:

有了這兩個網路後,我們就可以攥寫一些評測的方法,第一個就是該模型的變數數量啦,透過 tf.get_collection 取得 tf.GraphKeys.TRAINABLE_VARIABLES 底下的權重(被訓練的變數數量),然後因為有時候會有 dense layer ,所以變數數量的計算要從每個 dimension 相乘再加總。

total_parameters = 0

for variable in tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES):

shape = variable.get_shape()

variable_parameters = 1

for dim in shape:

variable_parameters *= dim.value

total_parameters += variable_parameters

print(f'trainable parameters count: {total_parameters}')

再來,我們需要一個評測運算速度的方法,有個小地方要注意,tensorflow 在 run 第一次時,他的效率並不好,個人猜測其機制可能是真的需要運算時,物件才會初始化 (lazy loading),因此我們不將第一次的計算列入評測,以下我們讓模型推論10次,然後把總花費的時間印出。

TIMES = 10

image = cv2.imread('../05/ithome.jpg')

image = cv2.resize(image, (input_node.shape[1], input_node.shape[2]))

image = np.expand_dims(image, 0)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

sess.run(tf.local_variables_initializer())

sess.run(logits, feed_dict={input_node: image}) # <-- first time is slow

start = timeit.default_timer()

for _ in range(0, TIMES):

sess.run(logits, feed_dict={input_node: image})

print(f'cost time:{(timeit.default_timer() - start)} sec')

接著,我們就可以開始進行測試。

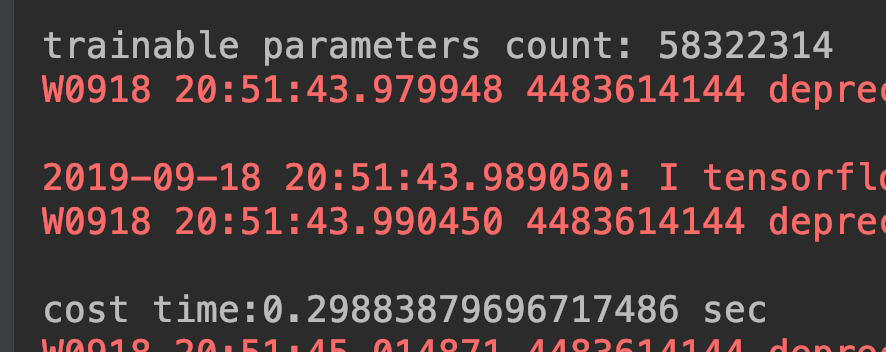

Alexnet:

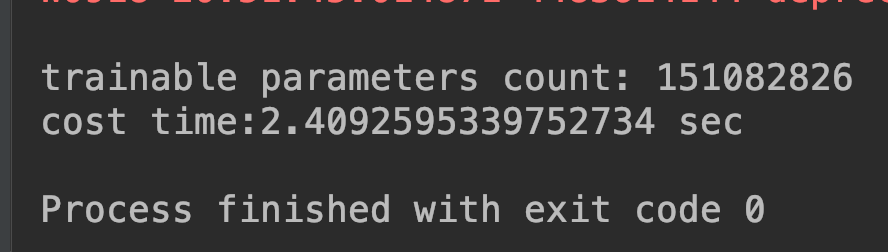

VGG16:

可以觀察到 VGG16 模型變數比 alexnet 多兩倍多,而速度上 alexnet 比 VGG16 退段速度快了近 8 倍!

這邊還是要補充,模型變數的數量不一定是多者一定比較慢,所以這邊的評測速度先參考參考囉,還有這邊所測量出來的速度也還沒有經過優化,像 tensorflow 有特別針對 ARM 架構的 CPU 做像是 tflite 的優化!