照著前幾天的邏輯今天來用python執行xgboost,剛開始一樣先寫score function方便之後的比較:

#from sklearn.metrics import accuracy_score, confusion_matrix, precision_score, recall_score, f1_score

def score(m, x_train, y_train, x_test, y_test, train=True):

if train:

pred=m.predict(x_train)

print('Train Result:\n')

print(f"Accuracy Score: {accuracy_score(y_train, pred)*100:.2f}%")

print(f"Precision Score: {precision_score(y_train, pred)*100:.2f}%")

print(f"Recall Score: {recall_score(y_train, pred)*100:.2f}%")

print(f"F1 score: {f1_score(y_train, pred)*100:.2f}%")

print(f"Confusion Matrix:\n {confusion_matrix(y_train, pred)}")

elif train == False:

pred=m.predict(x_test)

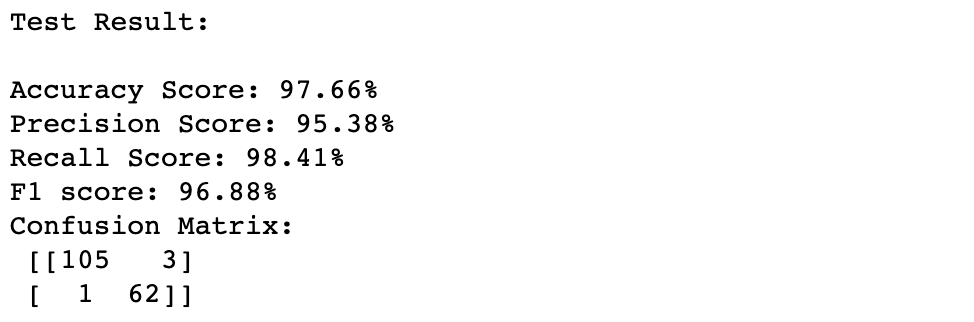

print('Test Result:\n')

print(f"Accuracy Score: {accuracy_score(y_test, pred)*100:.2f}%")

print(f"Precision Score: {precision_score(y_test, pred)*100:.2f}%")

print(f"Recall Score: {recall_score(y_test, pred)*100:.2f}%")

print(f"F1 score: {f1_score(y_test, pred)*100:.2f}%")

print(f"Confusion Matrix:\n {confusion_matrix(y_test, pred)}")

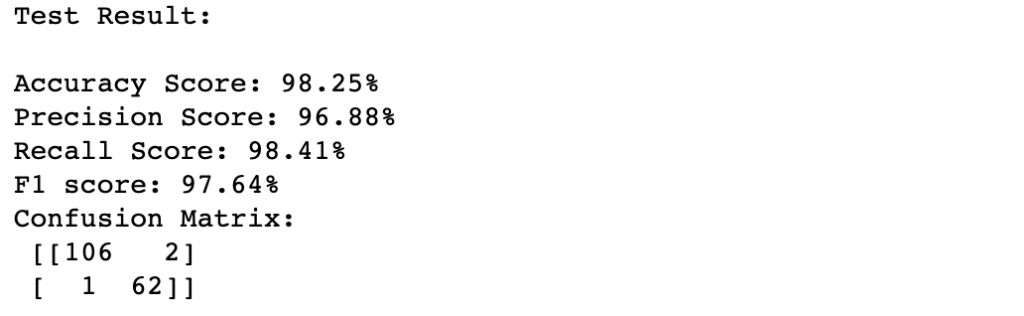

然後建立一個最簡單的xgboost來看看(然後發現default的模型表現已經超過前天tuning後的random forest了):

from xgboost import XGBClassifier

xg1 = XGBClassifier()

xg1=xg1.fit(x_train, y_train)

score(xg1, x_train, y_train, x_test, y_test, train=False)

XGBClassifier tuning重要的參數有:

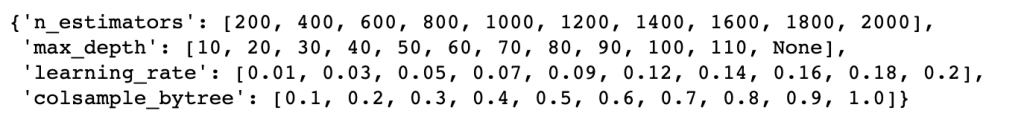

開始tuning,這裡我們一樣使用RandomizedSearchCV:

from sklearn.model_selection import RandomizedSearchCV

n_estimators = [int(x) for x in np.linspace(start=200, stop=2000, num=10)]

max_depth = [int(x) for x in np.linspace(10, 110, num=11)]

max_depth.append(None)

learning_rate=[round(float(x),2) for x in np.linspace(start=0.01, stop=0.2, num=10)]

colsample_bytree =[round(float(x),2) for x in np.linspace(start=0.1, stop=1, num=10)]

random_grid = {'n_estimators': n_estimators,

'max_depth': max_depth,

'learning_rate': learning_rate,

'colsample_bytree': colsample_bytree}

random_grid

xg4 = XGBClassifier(random_state=42)

#Random search of parameters, using 3 fold cross validation, search across 100 different combinations, and use all available cores

xg_random = RandomizedSearchCV(estimator = xg4, param_distributions=random_grid,

n_iter=100, cv=3, verbose=2, random_state=42, n_jobs=-1)

xg_random.fit(x_train,y_train)

xg_random.best_params_

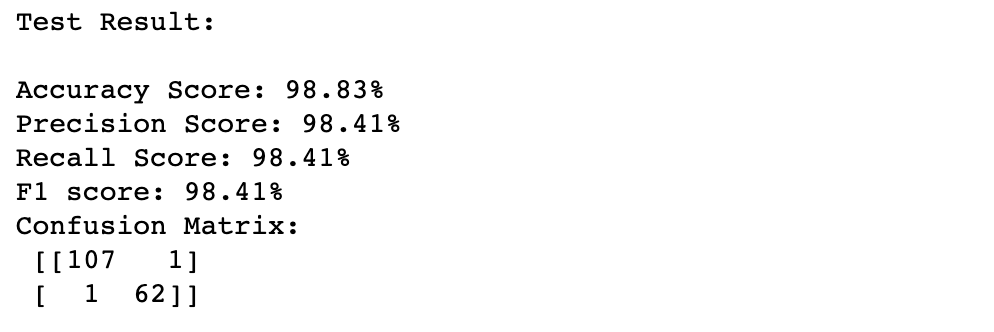

xg5 = XGBClassifier(colsample_bytree= 0.2, learning_rate=0.09, max_depth= 10, n_estimators=1200)

xg5=xg5.fit(x_train, y_train)

score(xg5, x_train, y_train, x_test, y_test, train=False)

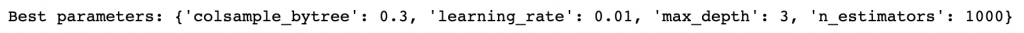

另外一個常見的tuning方法是使用GridSearch function,兩者的差異是grid search會使用所有的參數組合,而RandomizedSearchCV是在每個參數設定的分佈中隨機配置,這裡也放上grid search的方法給大家參考:

from sklearn.model_selection import GridSearchCV

params = { 'max_depth': [3,6,10],

'learning_rate': [0.01, 0.05, 0.1],

'n_estimators': [100, 500, 1000],

'colsample_bytree': [0.3, 0.7]}

xg2 = XGBClassifier(random_state=1)

clf = GridSearchCV(estimator=xg2,

param_grid=params,

scoring='neg_mean_squared_error',

verbose=1)

clf.fit(x_train, y_train)

print("Best parameters:", clf.best_params_)

xg3 = XGBClassifier(colsample_bytree= 0.3, learning_rate=0.01, max_depth= 3, n_estimators=1000)

xg3=xg3.fit(x_train, y_train)

score(xg3, x_train, y_train, x_test, y_test, train=False)

reference:

https://www.analyticsvidhya.com/blog/2016/03/complete-guide-parameter-tuning-xgboost-with-codes-python/

https://towardsdatascience.com/xgboost-fine-tune-and-optimize-your-model-23d996fab663

https://xgboost.readthedocs.io/en/latest/python/python_api.html#xgboost.XGBClassifier