前四篇介紹梯度下降法,對神經網路求解,這次再舉幾個例子,幫助我們更靈活的應用梯度下降法,包括:

上一篇實作單變數的函數及雙變數的廻歸,面對28x28=784個變數的手寫阿拉伯數字辨識要如何處理呢? 請看下列說明。

範例1. 以自動微分實作手寫阿拉伯數字辨識。

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

y_train = tf.one_hot(y_train, depth=10)

y_test = tf.one_hot(y_test, depth=10)

x_train, x_test = x_train / 255.0, x_test / 255.0

optimizer = tf.keras.optimizers.Adam()

loss_fn = tf.keras.losses.CategoricalCrossentropy()

epochs = 5

batch_size = 1000

for epoch in range(epochs):

for batch in range(x_train.shape[0] // batch_size):

x_batch = x_train[batch * batch_size : (batch + 1) * batch_size]

y_batch = y_train[batch * batch_size : (batch + 1) * batch_size]

with tf.GradientTape() as tape:

# Forward pass

pred = model(x_batch)

# Calculate the loss

loss = loss_fn(y_batch, pred)

# Calculate gradients

gradients = tape.gradient(loss, model.trainable_variables)

# Update model weights

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

print(f"Epoch {epoch+1}, Loss: {loss.numpy()}")

model.compile(optimizer=optimizer, loss=loss_fn, metrics=['accuracy'])

loss, accuracy = model.evaluate(x_test, y_test, verbose=False)

print(f'loss={loss}, accuracy={accuracy}')

# 手寫阿拉伯數字辨識

# 載入套件

import tensorflow as tf

# 載入手寫阿拉伯數字訓練資料(MNIST)

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

y_train = tf.one_hot(y_train, depth=10)

y_test = tf.one_hot(y_test, depth=10)

# 特徵縮放

x_train, x_test = x_train / 255.0, x_test / 255.0

# 建立模型

model = tf.keras.models.Sequential([

tf.keras.layers.Input((28, 28)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation='softmax')

])

# 設定優化器(optimizer)、損失函數(loss)

optimizer = tf.keras.optimizers.Adam()

loss_fn = tf.keras.losses.CategoricalCrossentropy() #from_logits=True)

# 訓練模型

epochs = 5

batch_size = 1000

for epoch in range(epochs):

for batch in range(x_train.shape[0] // batch_size):

x_batch = x_train[batch * batch_size : (batch + 1) * batch_size]

y_batch = y_train[batch * batch_size : (batch + 1) * batch_size]

with tf.GradientTape() as tape:

# Forward pass

pred = model(x_batch)

# Calculate the loss

loss = loss_fn(y_batch, pred)

# Calculate gradients

gradients = tape.gradient(loss, model.trainable_variables)

# Update model weights

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

print(f"Epoch {epoch+1}, Loss: {loss.numpy()}")

# 模型評分

model.compile(optimizer=optimizer, loss=loss_fn, metrics=['accuracy'])

loss, accuracy = model.evaluate(x_test, y_test, verbose=False)

print(f'loss={loss}, accuracy={accuracy}')

使用自動微分的好處如下:

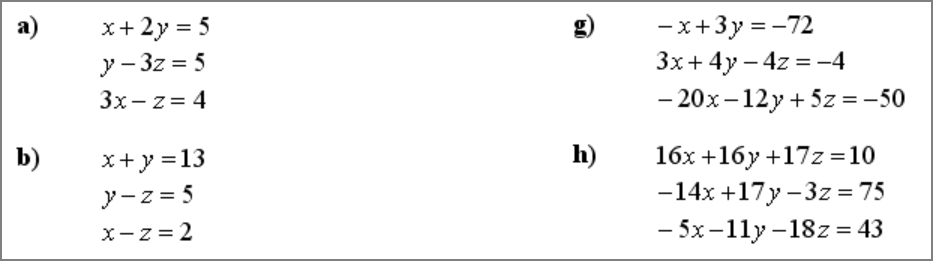

梯度下降法不只針對神經網路求解,也可以解決其他問題,譬如聯立方程式求解及其他機器學習演算法,先來實作聯立方程式。

範例2. 以自動微分實作聯立方程式求解。

import tensorflow as tf

import numpy as np

import random

x+y=16

10x+25y=250

x = tf.Variable(random.random())

y = tf.Variable(random.random())

EPOCHS = 10000

optimizer = tf.keras.optimizers.Adam(0.1)

previous_loss = np.inf

for i in range(EPOCHS):

with tf.GradientTape() as tape:

# 第1題聯立方程式

y1 = x+y-16

y2 = 10*x+25*y-250

loss = y1*y1 + y2*y2

# Calculate gradients

dx, dy = tape.gradient(loss, [x, y])

# Update model weights

optimizer.apply_gradients([(dx, x), (dy, y)])

# 提早結束

if abs(loss - previous_loss) < 10 ** -8:

print(f'epochs={i+1} 提早結束!!')

break

else:

previous_loss = loss

print(f'x={x.numpy()}')

print(f'y={y.numpy()}')

# 載入套件

import tensorflow as tf

import numpy as np

import random

# 初始化x, y為任意值

x = tf.Variable(random.random())

y = tf.Variable(random.random())

# 梯度下降

EPOCHS = 10000

optimizer = tf.keras.optimizers.Adam(0.1)

previous_loss = np.inf

for i in range(EPOCHS):

with tf.GradientTape() as tape:

# 第1題聯立方程式

y1 = x+y-16

y2 = 10*x+25*y-250

# 第2題

# y1 = 2*x + 3*y - 9

# y2 = x - y - 2

# 第3題

# y1 = 2*x + 2 - (x-y)

# y2 = 3*x + 2*y

loss = y1*y1 + y2*y2

# Calculate gradients

dx, dy = tape.gradient(loss, [x, y])

# Update model weights

optimizer.apply_gradients([(dx, x), (dy, y)])

# 提早結束

if abs(loss - previous_loss) < 10 ** -8:

print(f'epochs={i+1} 提早結束!!')

break

else:

previous_loss = loss

print(f'x={x.numpy()}')

print(f'y={y.numpy()}')

# 載入套件

import numpy as np

# 定義方程式的 A、B

A = np.array([[1 , 1], [10, 25]])

B = np.array([16, 250])

print(B.reshape(2, 1))

# np.linalg.solve:線性代數求解

print('\n線性代數求解:')

print(np.linalg.solve(A, B))

# 第2題

# y1 = 2*x + 3*y - 9

# y2 = x - y - 2

# 第3題

# y1 = 2*x + 2 - (x-y)

# y2 = 3*x + 2*y

只要靈活定義損失函數,許多問題都可以使用梯度下降法求解。

許多經典的機器學習演算法都可以使用梯度下降法求解,以下範例舉羅吉斯迴歸為例說明。

範例3. 以羅吉斯迴歸進行手寫阿拉伯數字(MNIST)辨識。

from tensorflow.keras.datasets import mnist

from sklearn.model_selection import train_test_split

import tensorflow as tf

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train/255., x_test/255.

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size=0.15)

x_train = tf.reshape(x_train, shape=(-1, 784))

x_test = tf.reshape(x_test, shape=(-1, 784))

weights = tf.Variable(tf.random.normal(shape=(784, 10), dtype=tf.float64))

biases = tf.Variable(tf.random.normal(shape=(10,), dtype=tf.float64))

def logistic_regression(x):

lr = tf.add(tf.matmul(x, weights), biases)

#return tf.nn.sigmoid(lr)

return lr

def cross_entropy(y_true, y_pred):

y_true = tf.one_hot(y_true, 10) # one-hot encoding

loss = tf.nn.softmax_cross_entropy_with_logits(labels=y_true, logits=y_pred)

return tf.reduce_mean(loss)

def accuracy(y_true, y_pred):

y_true = tf.cast(y_true, dtype=tf.int32)

preds = tf.cast(tf.argmax(y_pred, axis=1), dtype=tf.int32)

preds = tf.equal(y_true, preds)

return tf.reduce_mean(tf.cast(preds, dtype=tf.float32))

def grad(x, y):

with tf.GradientTape() as tape:

y_pred = logistic_regression(x)

loss_val = cross_entropy(y, y_pred)

return tape.gradient(loss_val, [weights, biases])

n_batches = 10000

learning_rate = 0.01

batch_size = 128

dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train))

dataset = dataset.repeat().shuffle(10000).batch(batch_size).prefetch(1)

optimizer = tf.optimizers.SGD(learning_rate)

for batch_no, (batch_xs, batch_ys) in enumerate(dataset.as_numpy_iterator()):

if batch_no >= n_batches: break

gradients = grad(batch_xs, batch_ys)

optimizer.apply_gradients(zip(gradients, [weights, biases]))

y_pred = logistic_regression(batch_xs)

loss = cross_entropy(batch_ys, y_pred)

acc = accuracy(batch_ys, y_pred)

if (batch_no+1) % 100 == 0: # 每100批顯示訓練結果

print(f"Batch number: {batch_no+1}, loss: {loss}, accuracy: {acc}")

y_pred = logistic_regression(x_test)

loss = cross_entropy(y_test, y_pred)

acc = accuracy(y_test, y_pred)

print(f"accuracy: {acc}")

# Soucre:https://stackoverflow.com/questions/56907971/logistic-regression-using-tensorflow-2-0

# 載入套件

from tensorflow.keras.datasets import mnist

from sklearn.model_selection import train_test_split

import tensorflow as tf

# 載入訓練資料(MNIST)及特徵縮放

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train/255., x_test/255.

# 切割訓練資料及驗證資料,並將訓練資料及測試資料轉為一維

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size=0.15)

x_train = tf.reshape(x_train, shape=(-1, 784))

x_test = tf.reshape(x_test, shape=(-1, 784))

# 初始化權重及偏差為任意值

weights = tf.Variable(tf.random.normal(shape=(784, 10), dtype=tf.float64))

biases = tf.Variable(tf.random.normal(shape=(10,), dtype=tf.float64))

# 定義 y = wx + b

def logistic_regression(x):

lr = tf.add(tf.matmul(x, weights), biases)

#return tf.nn.sigmoid(lr)

return lr

# 定義交叉熵(Cross entropy)

def cross_entropy(y_true, y_pred):

y_true = tf.one_hot(y_true, 10) # one-hot encoding

loss = tf.nn.softmax_cross_entropy_with_logits(labels=y_true, logits=y_pred)

return tf.reduce_mean(loss)

# 定義準確率

def accuracy(y_true, y_pred):

y_true = tf.cast(y_true, dtype=tf.int32)

preds = tf.cast(tf.argmax(y_pred, axis=1), dtype=tf.int32)

preds = tf.equal(y_true, preds)

return tf.reduce_mean(tf.cast(preds, dtype=tf.float32))

# 梯度下降

def grad(x, y):

with tf.GradientTape() as tape:

y_pred = logistic_regression(x)

loss_val = cross_entropy(y, y_pred)

return tape.gradient(loss_val, [weights, biases])

# 訓練模型

n_batches = 10000

learning_rate = 0.01

batch_size = 128

dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train))

dataset = dataset.repeat().shuffle(10000).batch(batch_size).prefetch(1)

optimizer = tf.optimizers.SGD(learning_rate)

for batch_no, (batch_xs, batch_ys) in enumerate(dataset.as_numpy_iterator()):

if batch_no >= n_batches: break

gradients = grad(batch_xs, batch_ys)

optimizer.apply_gradients(zip(gradients, [weights, biases]))

y_pred = logistic_regression(batch_xs)

loss = cross_entropy(batch_ys, y_pred)

acc = accuracy(batch_ys, y_pred)

if (batch_no+1) % 100 == 0: # 每100批顯示訓練結果

print(f"Batch number: {batch_no+1}, loss: {loss}, accuracy: {acc}")

# 模型評分

y_pred = logistic_regression(x_test)

loss = cross_entropy(y_test, y_pred)

acc = accuracy(y_test, y_pred)

print(f"accuracy: {acc}")

本篇介紹聯立方程式、羅吉斯迴歸求解,主要是要傳達發揮創意,許多問題都可以使用梯度下降法解決,例如生成式AI的風格轉換(吉卜力/辛普生家庭/公仔...風格)與對抗生成網路(GAN),都是透過定義獨特的損失函數,讓神經網路生成圖像。

另外,細心的讀者可能會發覺兩者的都設定很大的訓練週期數,是否可以改善呢? 下一篇我們就要進入深水區,進一步了解如何動態調整學習率、選用各種優化器、損失函數、Activation function...等,藉以優化模型訓練。

徹底理解神經網路的核心 -- 梯度下降法 (1)

徹底理解神經網路的核心 -- 梯度下降法 (2)

徹底理解神經網路的核心 -- 梯度下降法 (3)

徹底理解神經網路的核心 -- 梯度下降法 (4)

徹底理解神經網路的核心 -- 梯度下降法的應用 (5)

梯度下降法(6) -- 學習率動態調整

梯度下降法(7) -- 優化器(Optimizer)

梯度下降法(8) -- Activation Function

梯度下降法(9) -- 損失函數

梯度下降法(10) -- 總結