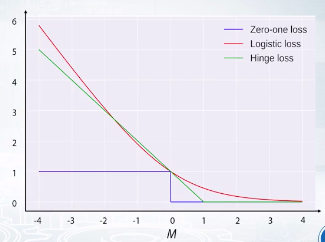

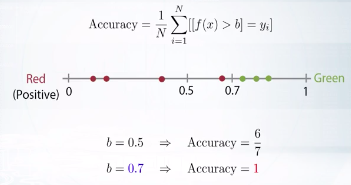

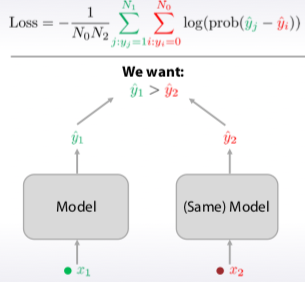

Loss function 損失函數的作用是算預測值f(x)跟真實值y差異程度的表現

.Tree-based

XGBoost, LightGBM

.Linear models

sklearn.<>Regression

sklearn.SGDRegressor

Vowpal Wabbit (quantile loss)

.Neural nets

Pytorch, Keras, TF, etc

截圖自 Coursera

原理

截圖自 Coursera

.Tree-based

XGBoost, LightGBM

.Neural nets

Pytorch, Keras, TF - not out of the box

Quadratic Weighted Kappa 與常見的統計量(例如精準度 或 MSE/RMSE)不同, Quadratic Weighted Kappa 衡量正確答案與預估間一致程度 經過平方加權 會加倍懲罰差距過遠的預估

soft kappa xgboost 語法連結 https://eyusuwbavdctmvzkdnmwro.coursera-apps.org/notebooks/readonly/reading_materials/Metrics_video8_soft_kappa_xgboost.ipynb

補充資料連結

. Evaluation Metrics for Classification Problems: Quick Examples + References http://queirozf.com/entries/evaluation-metrics-for-classification-quick-examples-references

. Decision Trees: “Gini” vs. “Entropy” criteria https://www.garysieling.com/blog/sklearn-gini-vs-entropy-criteria

. Understanding ROC curveshttp://www.navan.name/roc/

. Learning to Rank using Gradient Descent -- original paper about pairwise method for AUC optimization http://icml.cc/2015/wp-content/uploads/2015/06/icml_ranking.pdf

. Overview of further developments of RankNet https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/MSR-TR-2010-82.pdf

. RankLib (implemtations for the 2 papers from above)https://sourceforge.net/p/lemur/wiki/RankLib/

. Learning to Rank Overview https://wellecks.wordpress.com/2015/01/15/learning-to-rank-overview

. Evaluation metrics for clustering http://nlp.uned.es/docs/amigo2007a.pdf