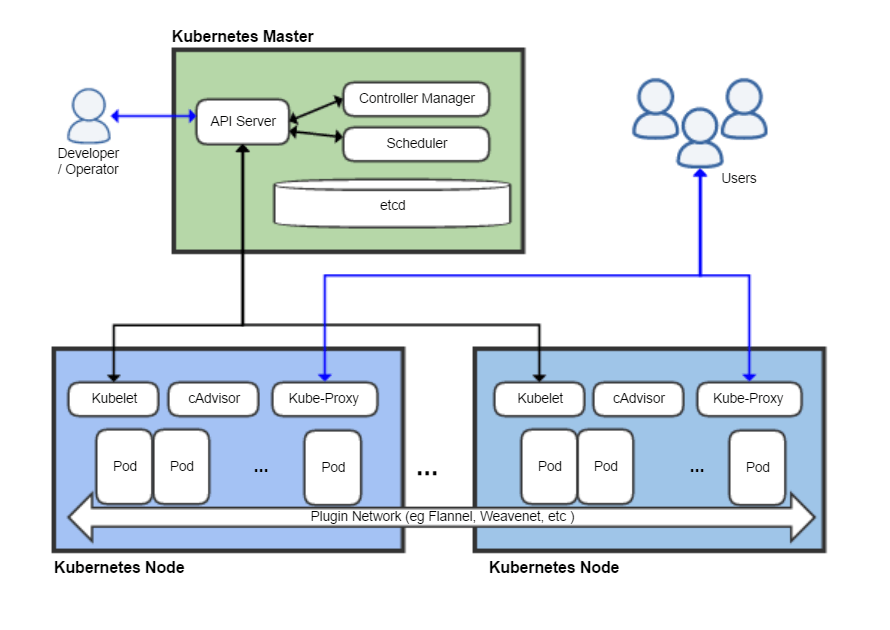

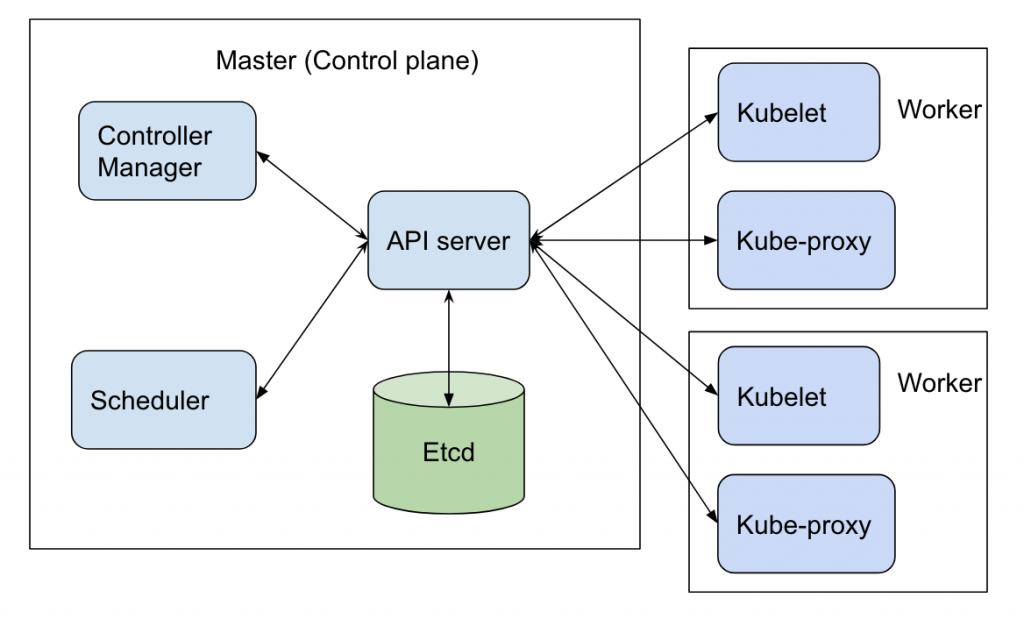

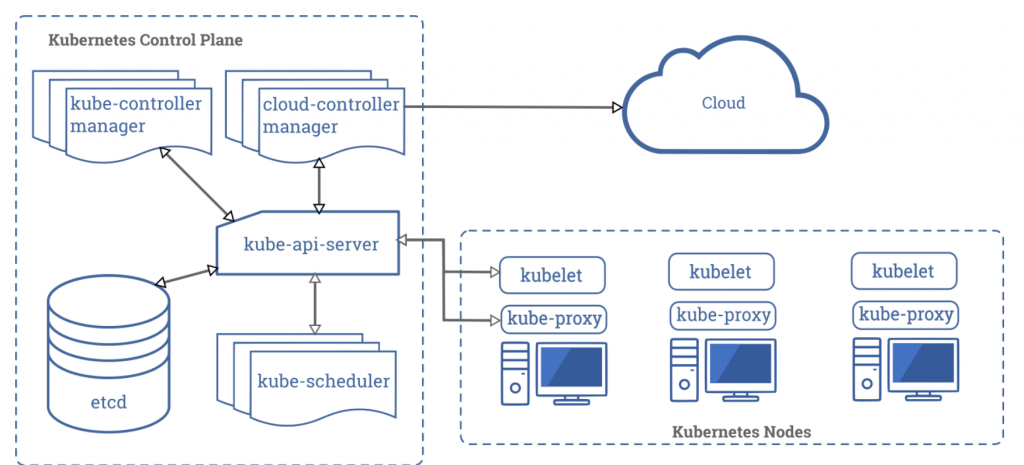

由前面章節可以得知,Kubernetes的Node有兩種,分別是負責決策與判斷的Master Node,以及負責執行的Worker Node。而本章節將與大家探討Master Node與其特性。

Master Node主要用來管理Work Node(也就是Slave Node, Kubernetes Node),進行工作的調配與規劃..等,也因此Master Node有著以下幾個components來完成這些功能

$ kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

controller-manager Healthy ok

scheduler Healthy ok

$ kubectl describe componentstatuses controller-manager

Name: controller-manager

Namespace:

Labels: <none>

Annotations: <none>

API Version: v1

Conditions:

Message: ok

Status: True

Type: Healthy

Kind: ComponentStatus

Metadata:

Creation Timestamp: <nil>

Self Link: /api/v1/componentstatuses/controller-manager

Events: <none>

Controller manager主要透過各種controller來控制process,邏輯上每個controller都是一個獨立的process,但為了降低複雜度,controllers會被編譯在一個二進位的文件,並且在同一process運行著。

這些Controllers包含著

在後面篇章會介紹k8s的worker node, replication, service, account....etc

Scheduler會依據機器資源、軟體資源(叢集)、調度決策、affinity and anti-affinity親和力與反親和力等多方考量,去決定Pods是否新建與Pods的數量分配(分配至Worker Node)。因為每個Pods都會有自己的requirements(像是平均Memory用量超過50%新建、不建立該種Pod在Node-1上..等),所以在叢集調度上並非易事,也因此有了該components的產生

Tips: affinity又有分成是Node affinity與 Pod affinity,affinity也是屬於種調度策略,這邊在後面篇章會詳細介紹。

每個Cluster都會有著一個以上的etcd,etcd用一致且高可用的鍵值方式儲存Kubernetes叢集的資料,Kubernetes Cluster會預設將etcd資料作為備份。

API-Server是Kubernetes Control Plane中的一個重要組件,透過API-Server對外曝露所有的Kubernetes API,亦可以把它當作是Kubernetes Control Plane的前端。而我們操作的Kubernetes ctl也是我們透過kubectl的方式與API-Server進行溝通,總結來說API-Server有著以下幾個功能:

通過kubectl proxy,我們能夠得知目前叢集最上層的api path為何

$ kubectl proxy

Starting to serve on 127.0.0.1:8001

{

"paths": [

"/api",

"/api/v1",

"/apis",

"/apis/",

"/apis/admissionregistration.k8s.io",

"/apis/admissionregistration.k8s.io/v1",

"/apis/admissionregistration.k8s.io/v1beta1",

"/apis/apiextensions.k8s.io",

"/apis/apiextensions.k8s.io/v1",

"/apis/apiextensions.k8s.io/v1beta1",

"/apis/apiregistration.k8s.io",

"/apis/apiregistration.k8s.io/v1",

"/apis/apiregistration.k8s.io/v1beta1",

"/apis/apps",

"/apis/apps/v1",

"/apis/authentication.k8s.io",

"/apis/authentication.k8s.io/v1",

"/apis/authentication.k8s.io/v1beta1",

"/apis/authorization.k8s.io",

"/apis/authorization.k8s.io/v1",

"/apis/authorization.k8s.io/v1beta1",

"/apis/autoscaling",

"/apis/autoscaling/v1",

"/apis/autoscaling/v2beta1",

"/apis/autoscaling/v2beta2",

"/apis/batch",

"/apis/batch/v1",

"/apis/batch/v1beta1",

"/apis/certificates.k8s.io",

"/apis/certificates.k8s.io/v1beta1",

"/apis/cloud.google.com",

"/apis/cloud.google.com/v1",

"/apis/cloud.google.com/v1beta1",

"/apis/coordination.k8s.io",

"/apis/coordination.k8s.io/v1",

"/apis/coordination.k8s.io/v1beta1",

"/apis/discovery.k8s.io",

"/apis/discovery.k8s.io/v1beta1",

"/apis/extensions",

"/apis/extensions/v1beta1",

"/apis/metrics.k8s.io",

"/apis/metrics.k8s.io/v1beta1",

"/apis/migration.k8s.io",

"/apis/migration.k8s.io/v1alpha1",

"/apis/networking.gke.io",

"/apis/networking.gke.io/v1beta1",

"/apis/networking.gke.io/v1beta2",

"/apis/networking.k8s.io",

"/apis/networking.k8s.io/v1",

"/apis/networking.k8s.io/v1beta1",

"/apis/node.k8s.io",

"/apis/node.k8s.io/v1beta1",

"/apis/nodemanagement.gke.io",

"/apis/nodemanagement.gke.io/v1alpha1",

"/apis/policy",

"/apis/policy/v1beta1",

"/apis/rbac.authorization.k8s.io",

"/apis/rbac.authorization.k8s.io/v1",

"/apis/rbac.authorization.k8s.io/v1beta1",

"/apis/scheduling.k8s.io",

"/apis/scheduling.k8s.io/v1",

"/apis/scheduling.k8s.io/v1beta1",

"/apis/snapshot.storage.k8s.io",

"/apis/snapshot.storage.k8s.io/v1beta1",

"/apis/storage.k8s.io",

"/apis/storage.k8s.io/v1",

"/apis/storage.k8s.io/v1beta1",

"/healthz",

"/healthz/SSH Tunnel Check",

"/healthz/autoregister-completion",

"/healthz/etcd",

"/healthz/log",

"/healthz/ping",

"/healthz/poststarthook/apiservice-openapi-controller",

"/healthz/poststarthook/apiservice-registration-controller",

"/healthz/poststarthook/apiservice-status-available-controller",

"/healthz/poststarthook/bootstrap-controller",

"/healthz/poststarthook/crd-informer-synced",

"/healthz/poststarthook/generic-apiserver-start-informers",

"/healthz/poststarthook/kube-apiserver-autoregistration",

"/healthz/poststarthook/rbac/bootstrap-roles",

"/healthz/poststarthook/scheduling/bootstrap-system-priority-classes",

"/healthz/poststarthook/start-apiextensions-controllers",

"/healthz/poststarthook/start-apiextensions-informers",

"/healthz/poststarthook/start-cluster-authentication-info-controller",

"/healthz/poststarthook/start-kube-aggregator-informers",

"/healthz/poststarthook/start-kube-apiserver-admission-initializer",

"/livez",

"/livez/SSH Tunnel Check",

"/livez/autoregister-completion",

"/livez/etcd",

"/livez/log",

"/livez/ping",

"/livez/poststarthook/apiservice-openapi-controller",

"/livez/poststarthook/apiservice-registration-controller",

"/livez/poststarthook/apiservice-status-available-controller",

"/livez/poststarthook/bootstrap-controller",

"/livez/poststarthook/crd-informer-synced",

"/livez/poststarthook/generic-apiserver-start-informers",

"/livez/poststarthook/kube-apiserver-autoregistration",

"/livez/poststarthook/rbac/bootstrap-roles",

"/livez/poststarthook/scheduling/bootstrap-system-priority-classes",

"/livez/poststarthook/start-apiextensions-controllers",

"/livez/poststarthook/start-apiextensions-informers",

"/livez/poststarthook/start-cluster-authentication-info-controller",

"/livez/poststarthook/start-kube-aggregator-informers",

"/livez/poststarthook/start-kube-apiserver-admission-initializer",

"/logs",

"/metrics",

"/openapi/v2",

"/readyz",

"/readyz/SSH Tunnel Check",

"/readyz/autoregister-completion",

"/readyz/etcd",

"/readyz/informer-sync",

"/readyz/log",

"/readyz/ping",

"/readyz/poststarthook/apiservice-openapi-controller",

"/readyz/poststarthook/apiservice-registration-controller",

"/readyz/poststarthook/apiservice-status-available-controller",

"/readyz/poststarthook/bootstrap-controller",

"/readyz/poststarthook/crd-informer-synced",

"/readyz/poststarthook/generic-apiserver-start-informers",

"/readyz/poststarthook/kube-apiserver-autoregistration",

"/readyz/poststarthook/rbac/bootstrap-roles",

"/readyz/poststarthook/scheduling/bootstrap-system-priority-classes",

"/readyz/poststarthook/start-apiextensions-controllers",

"/readyz/poststarthook/start-apiextensions-informers",

"/readyz/poststarthook/start-cluster-authentication-info-controller",

"/readyz/poststarthook/start-kube-aggregator-informers",

"/readyz/poststarthook/start-kube-apiserver-admission-initializer",

"/readyz/shutdown",

"/version"

]

}

最後再來看一次k8s cluster架構,今天介紹的Master Node部分就是扮演著Cluster中 Control Plane的角色,負責K8s大小事的決策以及調度,透過api-server進行溝通最後再將這些資料都存在etcd當中。

這章節主要告訴大家Master Node的基本原理與重要組件,希望能帶給剛認識Kubernetes的讀者一個基礎的觀念。那再下一章節會繼續為大家帶來Worker Node(Slave Node)

https://kubernetes.io/docs/concepts/overview/components/