昨天費盡了九牛二虎之力帶各位推導出BGAN的生成器損失函數,今天就要來實作BGAN了。放心,損失函數定一到了程式環節一樣簡單。

WGAN模型與普通GAN在實作上只有一些差異,分別為:

Boundary Loss,激活函數使用Leaky ReLU。與之前一樣,使用BGAN做mnist手寫資料集的圖片生成。此外這次的BGAN同樣也是使用DCGAN改來的。

這邊一樣除了Keras後端API是新的以外,其他都與DCGAN差不多。

from tensorflow.keras.datasets import mnist

from tensorflow.keras.layers import Input, Dense, Reshape, Flatten, BatchNormalization, LeakyReLU, Conv2DTranspose, Conv2D

from tensorflow.keras.models import Model, save_model

from tensorflow.keras.optimizers import Adam

import tensorflow.keras.backend as K

import matplotlib.pyplot as plt

import numpy as np

import os

資料前處理老套路了,一模一樣:

def load_data(self):

(x_train, _), (_, _) = mnist.load_data() # 底線是未被用到的資料,可忽略

x_train = (x_train / 127.5)-1 # 正規化

x_train = x_train.reshape((-1, 28, 28, 1))

return x_train

這部分也沒有差別。

class BGAN():

def __init__(self, generator_lr, discriminator_lr):

self.generator_lr = generator_lr

self.discriminator_lr = discriminator_lr

self.discriminator = self.build_discriminator()

self.generator = self.build_generator()

self.adversarial = self.build_adversarialmodel()

self.gloss = []

self.dloss = []

if not os.path.exists('result/BGAN/imgs'):# 將訓練過程產生的圖片儲存起來

os.makedirs('result/BGAN/imgs')# 如果忘記新增資料夾可以用這個方式建立

這個部分只需要注意對抗模型在編譯模型時使用Boundary Loss即可。

生成器:

生成器與DCGAN的生成器無異。

def build_generator(self):

input_ = Input(shape=(100, ))

x = Dense(7*7*32)(input_)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Reshape((7, 7, 32))(x)

x = Conv2DTranspose(128, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2DTranspose(256, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

out = Conv2DTranspose(1, kernel_size=4, strides=1, padding='same', activation='tanh')(x)

model = Model(inputs=input_, outputs=out, name='Generator')

model.summary()

return model

判別器:

判別器太深容易使訓練梯度爆炸,所以降低了層數。

def build_discriminator(self):

input_ = Input(shape = (28, 28, 1))

x = Conv2D(256, kernel_size=4, strides=2, padding='same')(input_)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2D(128, kernel_size=4, strides=2, padding='same')(x)

x = LeakyReLU(alpha=0.2)(x)

x = Flatten()(x)

out = Dense(1, activation='sigmoid')(x)

model = Model(inputs=input_, outputs=out, name='Discriminator')

dis_optimizer = Adam(learning_rate=self.discriminator_lr, beta_1=0.5)

model.compile(loss='binary_crossentropy',

optimizer=dis_optimizer,

metrics=['accuracy'])

model.summary()

return model

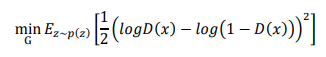

Boundary Loss :Boundary Loss做起來也較簡單,複習一下昨天講到的結論。

接著寫成程式:

因為模型訓練時呼叫方法時會確認參數的使用,所以即使是沒使用到

y_true這個參數,定義副程式時依然要將y_true寫進去喔!y_pred就是對抗模型中生成器生成圖片經過判別器D的判斷結果。

def boundary_loss(self, y_true, y_pred):

return 0.5 * K.mean((K.log(y_pred) - K.log(1 - y_pred)) ** 2)

對抗模型:

對抗模型的損失使用boundary_loss,其他沒有甚麼改動。

def build_adversarialmodel(self):

noise_input = Input(shape=(100, ))

generator_sample = self.generator(noise_input)

self.discriminator.trainable = False

out = self.discriminator(generator_sample)

model = Model(inputs=noise_input, outputs=out)

adv_optimizer = Adam(learning_rate=self.generator_lr, beta_1=0.5)

model.compile(loss=self.boundary_loss, optimizer=adv_optimizer)

model.summary()

return model

訓練步驟:

這部分也是一樣完全沒有變XD,總覺得今天很輕鬆。因為昨天死了很多腦細胞,今天平衡一下也好。

def train(self, epochs, batch_size=128, sample_interval=50):

# 準備訓練資料

x_train = self.load_data()

# 準備訓練的標籤,分為真實標籤與假標籤

valid = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

for epoch in range(epochs):

# 隨機取一批次的資料用來訓練

idx = np.random.randint(0, x_train.shape[0], batch_size)

imgs = x_train[idx]

# 從常態分佈中採樣一段雜訊

noise = np.random.normal(0, 1, (batch_size, 100))

# 生成一批假圖片

gen_imgs = self.generator.predict(noise)

# 判別器訓練判斷真假圖片

d_loss_real = self.discriminator.train_on_batch(imgs, valid)

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

#儲存鑑別器損失變化 索引值0為損失 索引值1為準確率

self.dloss.append(d_loss[0])

# 訓練生成器的生成能力

noise = np.random.normal(0, 1, (batch_size, 100))

g_loss = self.adversarial.train_on_batch(noise, valid)

# 儲存生成器損失變化

self.gloss.append(g_loss)

# 將這一步的訓練資訊print出來

print(f"Epoch:{epoch} [D loss: {d_loss[0]}, acc: {100 * d_loss[1]:.2f}] [G loss: {g_loss}]")

# 在指定的訓練次數中,隨機生成圖片,將訓練過程的圖片儲存起來

if epoch % sample_interval == 0:

self.sample(epoch)

self.save_data()

儲存資料的部分也沒有變。

儲存損失變化與模型權重的部分:

def save_data(self):

np.save(file='./result/BGAN/generator_loss.npy',arr=np.array(self.gloss))

np.save(file='./result/BGAN/discriminator_loss.npy', arr=np.array(self.dloss))

save_model(model=self.generator,filepath='./result/BGAN/Generator.h5')

save_model(model=self.discriminator,filepath='./result/BGAN/Discriminator.h5')

save_model(model=self.adversarial,filepath='./result/BGAN/Adversarial.h5')

模型生成圖片的部分。

def sample(self, epoch=None, num_images=25, save=True):

r = int(np.sqrt(num_images))

noise = np.random.normal(0, 1, (num_images, 100))

gen_imgs = self.generator.predict(noise)

gen_imgs = (gen_imgs+1)/2

fig, axs = plt.subplots(r, r)

count = 0

for i in range(r):

for j in range(r):

axs[i, j].imshow(gen_imgs[count, :, :, 0], cmap='gray')

axs[i, j].axis('off')

count += 1

if save:

fig.savefig(f"./result/BGAN/imgs/{epoch}epochs.png")

else:

plt.show()

plt.close()

這邊參數設定如下表:

| 參數 | 參數值 |

|---|---|

| 生成器學習率 | 0.0002 |

| 判別器學習率 | 0.0002 |

| Batch Size | 128 |

| 訓練次數 | 10000 |

if __name__ == '__main__':

gan = BGAN(generator_lr=0.0002,discriminator_lr=0.0002)

gan.train(epochs=10000, batch_size=128, sample_interval=200)

gan.sample(save=False)

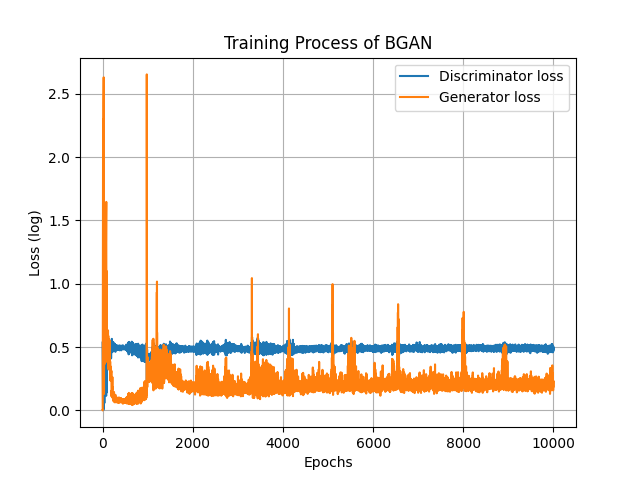

損失圖如下,判別器數值一直保持穩定,生成器則會慢慢學習生成判別器評價好的圖片。

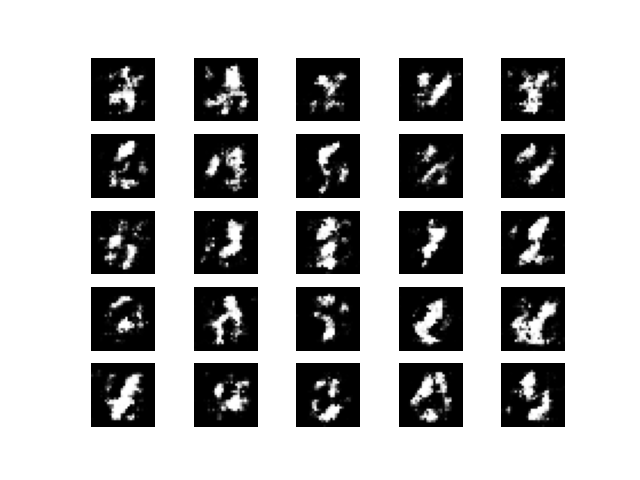

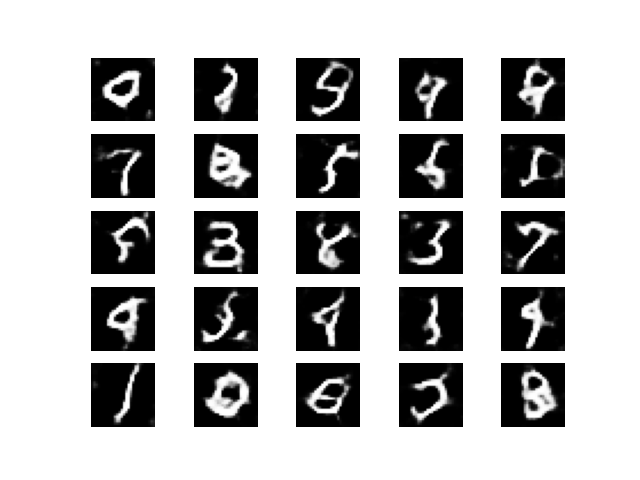

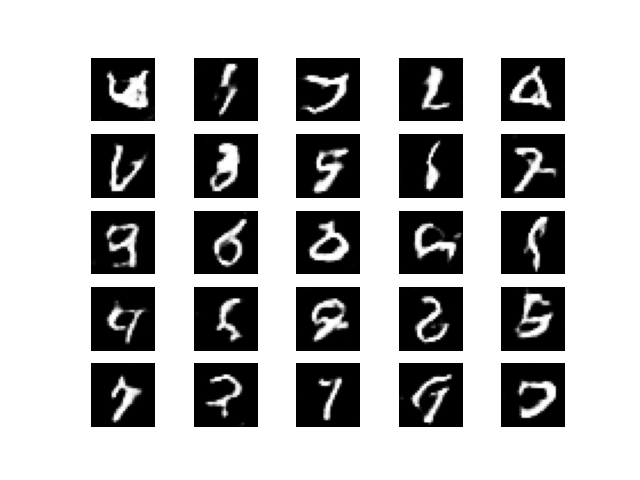

訓練過程如下,總覺得雖然多樣性提高了,但會生成根本不是數字的東西XD

Epoch=200

Epoch=2000

Epoch=5000,把0生成變成三角形真的是…很可愛。

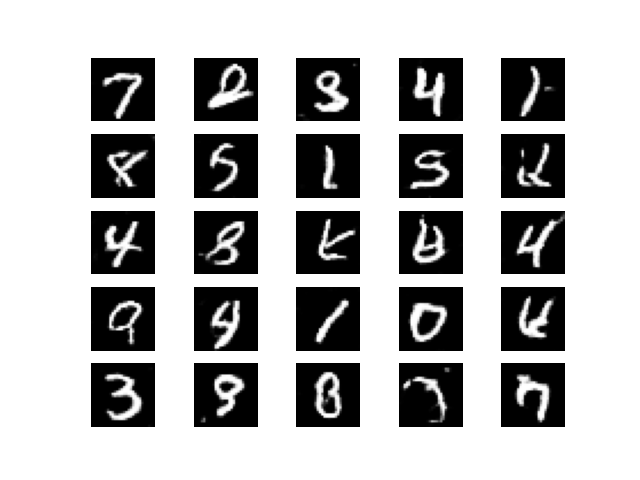

Epoch=10000,有一些圖片看的出來其數字,但也出現了很多莫名其妙的符號。

訓練過程一樣整理成動畫給各位欣賞:

今天帶各位實作了BGAN,這幾天介紹的三種GAN都是將雜訊做為輸入,並且隨機生成圖片,我們對於生成結果是不可控的。如果要隨我們的意思生成圖片的話就必須要加入條件控制,至於條件控制如何添加我將在明天開始介紹,希望這幾天對於GAN的實作與理論解說能讓你更了解其運作原理。接著模型會越來越複雜,但也會越來越好玩喔!

from tensorflow.keras.datasets import mnist

from tensorflow.keras.layers import Input, Dense, Reshape, Flatten, BatchNormalization, LeakyReLU, Conv2DTranspose, Conv2D

from tensorflow.keras.models import Model, save_model

from tensorflow.keras.optimizers import Adam

import tensorflow.keras.backend as K

import matplotlib.pyplot as plt

import numpy as np

import os

class BGAN():

def __init__(self, generator_lr, discriminator_lr):

self.generator_lr = generator_lr

self.discriminator_lr = discriminator_lr

self.discriminator = self.build_discriminator()

self.generator = self.build_generator()

self.adversarial = self.build_adversarialmodel()

self.gloss = []

self.dloss = []

if not os.path.exists('result/BGAN/imgs'):# 將訓練過程產生的圖片儲存起來

os.makedirs('result/BGAN/imgs')# 如果忘記新增資料夾可以用這個方式建立

def load_data(self):

(x_train, _), (_, _) = mnist.load_data() # 底線是未被用到的資料,可忽略

x_train = (x_train / 127.5)-1 # 正規化

x_train = x_train.reshape((-1, 28, 28, 1))

return x_train

def boundary_loss(self, y_true, y_pred):

"""

Boundary seeking loss.

Reference: https://wiseodd.github.io/techblog/2017/03/07/boundary-seeking-gan/

"""

return 0.5 * K.mean((K.log(y_pred) - K.log(1 - y_pred)) ** 2)

def build_generator(self):

input_ = Input(shape=(100, ))

x = Dense(7*7*32)(input_)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Reshape((7, 7, 32))(x)

x = Conv2DTranspose(128, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2DTranspose(256, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

out = Conv2DTranspose(1, kernel_size=4, strides=1, padding='same', activation='tanh')(x)

model = Model(inputs=input_, outputs=out, name='Generator')

model.summary()

return model

def build_discriminator(self):

input_ = Input(shape = (28, 28, 1))

x = Conv2D(256, kernel_size=4, strides=2, padding='same')(input_)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2D(128, kernel_size=4, strides=2, padding='same')(x)

x = LeakyReLU(alpha=0.2)(x)

x = Flatten()(x)

out = Dense(1, activation='sigmoid')(x)

model = Model(inputs=input_, outputs=out, name='Discriminator')

dis_optimizer = Adam(learning_rate=self.discriminator_lr, beta_1=0.5)

model.compile(loss='binary_crossentropy',

optimizer=dis_optimizer,

metrics=['accuracy'])

model.summary()

return model

def build_adversarialmodel(self):

noise_input = Input(shape=(100, ))

generator_sample = self.generator(noise_input)

self.discriminator.trainable = False

out = self.discriminator(generator_sample)

model = Model(inputs=noise_input, outputs=out)

adv_optimizer = Adam(learning_rate=self.generator_lr, beta_1=0.5)

model.compile(loss=self.boundary_loss, optimizer=adv_optimizer)

model.summary()

return model

def train(self, epochs, batch_size=128, sample_interval=50):

# 準備訓練資料

x_train = self.load_data()

# 準備訓練的標籤,分為真實標籤與假標籤

valid = np.ones((batch_size, 1))

fake = np.zeros((batch_size, 1))

for epoch in range(epochs):

# 隨機取一批次的資料用來訓練

idx = np.random.randint(0, x_train.shape[0], batch_size)

imgs = x_train[idx]

# 從常態分佈中採樣一段雜訊

noise = np.random.normal(0, 1, (batch_size, 100))

# 生成一批假圖片

gen_imgs = self.generator.predict(noise)

# 判別器訓練判斷真假圖片

d_loss_real = self.discriminator.train_on_batch(imgs, valid)

d_loss_fake = self.discriminator.train_on_batch(gen_imgs, fake)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

#儲存鑑別器損失變化 索引值0為損失 索引值1為準確率

self.dloss.append(d_loss[0])

# 訓練生成器的生成能力

noise = np.random.normal(0, 1, (batch_size, 100))

g_loss = self.adversarial.train_on_batch(noise, valid)

# 儲存生成器損失變化

self.gloss.append(g_loss)

# 將這一步的訓練資訊print出來

print(f"Epoch:{epoch} [D loss: {d_loss[0]}, acc: {100 * d_loss[1]:.2f}] [G loss: {g_loss}]")

# 在指定的訓練次數中,隨機生成圖片,將訓練過程的圖片儲存起來

if epoch % sample_interval == 0:

self.sample(epoch)

self.save_data()

def save_data(self):

np.save(file='./result/BGAN/generator_loss.npy',arr=np.array(self.gloss))

np.save(file='./result/BGAN/discriminator_loss.npy', arr=np.array(self.dloss))

save_model(model=self.generator,filepath='./result/BGAN/Generator.h5')

save_model(model=self.discriminator,filepath='./result/BGAN/Discriminator.h5')

save_model(model=self.adversarial,filepath='./result/BGAN/Adversarial.h5')

def sample(self, epoch=None, num_images=25, save=True):

r = int(np.sqrt(num_images))

noise = np.random.normal(0, 1, (num_images, 100))

gen_imgs = self.generator.predict(noise)

gen_imgs = (gen_imgs+1)/2

fig, axs = plt.subplots(r, r)

count = 0

for i in range(r):

for j in range(r):

axs[i, j].imshow(gen_imgs[count, :, :, 0], cmap='gray')

axs[i, j].axis('off')

count += 1

if save:

fig.savefig(f"./result/BGAN/imgs/{epoch}epochs.png")

else:

plt.show()

plt.close()

if __name__ == '__main__':

gan = BGAN(generator_lr=0.0002,discriminator_lr=0.0002)

gan.train(epochs=10000, batch_size=128, sample_interval=200)

gan.sample(save=False)