今天繼續建立英翻中神經網絡的實作。

首先引入必要的模組以及函式:

from tensorflow.keras.preprocessing import sequence

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.utils import to_categorical

先載入昨天建立好的 seq_pairs ,並拆分成英文( source language )與中文( target language )文句:

with open("data/eng-cn.pkl", "rb") as f:

seq_pairs = pkl.load(f)

src_sentences = [pair[0] for pair in seq_pairs]

tgt_sentences = [pair[1] for pair in seq_pairs]

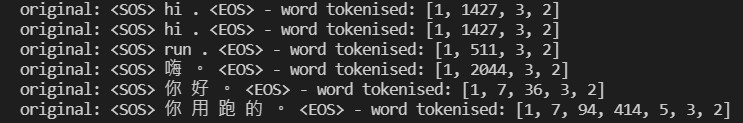

我們分別創造中文和英文的斷詞器( tokenisers ):

def create_tokeniser(sentences):

# create a tokeniser specific to texts

tokeniser = Tokenizer(filters = ' ')

tokeniser.fit_on_texts(sentences)

# preview the first 3 sentences versus their word tokenised versions

for i in range(3):

print("original: {} - word tokenised: {}".format(sentences[i], tokeniser.texts_to_sequences(sentences)[i]))

return tokeniser.texts_to_sequences(sentences), tokeniser

# word tokenise source and target sentences

src_word_tokenised, src_tokeniser = create_tokeniser(src_sentences)

tgt_word_tokenised, tgt_tokeniser = create_tokeniser(tgt_sentences)

整理中文和英文的詞彙表以及詞彙總量:

# source and target vocabulary dictionaries

src_vocab_dict = src_tokeniser.word_index

tgt_vocab_dict = tgt_tokeniser.word_index

src_vocab_size = len(src_vocab_dict) + 1 # 6819 tokens in total

tgt_vocab_size = len(tgt_vocab_dict) + 1 # 3574 tokens in total

再來計算出詞條序列的最大長度:

src_max_seq_length = len(max(src_word_tokenised, key = len)) # 38

tgt_max_seq_length = len(max(tgt_word_tokenised, key = len)) # 46

為了製造訓練特徵向量 X 以及標籤向量 y ,我們須先將每句不足的部分補0。這時候使用稍早引入的函式 pad_sequences() ,值得注意的是引數 padding 要選擇 "post" 才能0使得從序列尾端補足:

src_sentences_padded = pad_sequences(src_word_tokenised, maxlen = src_max_seq_length, padding = "post") # shape: (26388, 38)

tgt_sentences_padded = pad_sequences(tgt_word_tokenised, maxlen = tgt_max_seq_length, padding = "post") # shape: (26388, 46)

# increase 1 dimension

src_sentences_padded = src_sentences_padded.reshape(*src_sentences_padded.shape, 1) # shape: (26388, 38, 1)

tgt_sentences_padded = tgt_sentences_padded.reshape(*tgt_sentences_padded.shape, 1) # shape: (26388, 46, 1)

今天的進度先到這裡,明天繼續建立資料集,晚安!